TensorFlow’s machine learning platform has a comprehensive, flexible ecosystem of tools, libraries and community resources that lets researchers push the state-of-the-art in ML and developers easily build and deploy ML-powered applications.

TensorFlow 2.0, has been redesigned with a focus on developer productivity, simplicity, and ease of use. There are multiple changes in TensorFlow 2.0 to make TensorFlow users more productive. TensorFlow 2.0 removes redundant APIs, makes APIs more consistent (Unified RNNs, Unified Optimizers), and improved integration with the Python runtime with Eager execution.

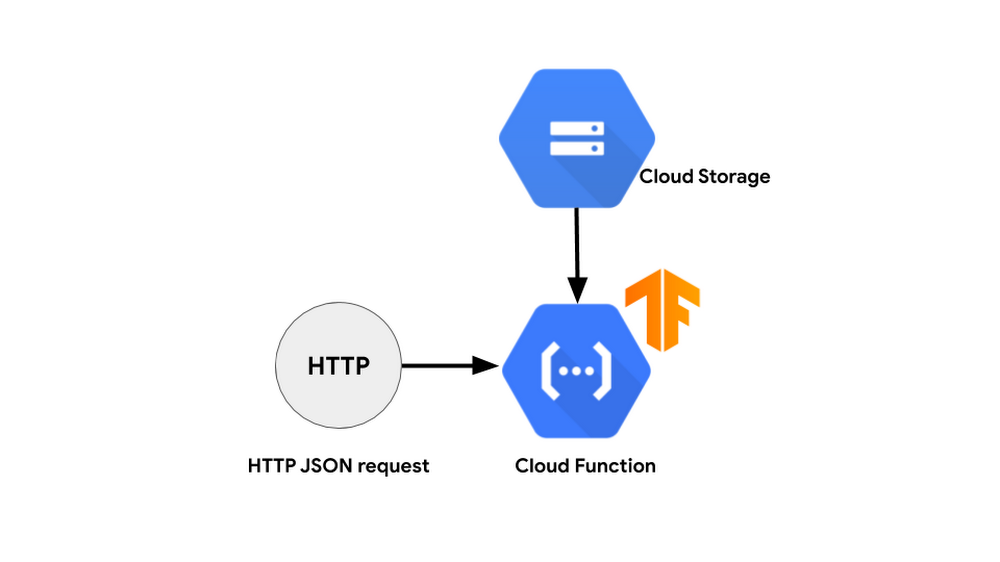

Deploying deep learning models has never been this easy and TensorFlow2.0 when paired with Google Cloud Platform’s Cloud Functions, makes deployment even more feasible.

Google Cloud Platform (GCP) provides multiple ways for deploying inference in the cloud such as the following:

- Compute Engine cluster with TF serving

- Cloud AI Platform Predictions

- Cloud Functions

The differences between the above three can be explained with the fundamental features like machine learning frameworks installation, infrastructure management, scalability etc.

For instance, Cloud Functions require installation of libraries while compute engine come with pre-loaded TensorFlow and other frameworks when using Deep learning images or Deep learning containers. It goes the same for AI Platform predictions. When it comes to scalability, the infrastructure of cloud functions is as scalable as the other two.

Cloud functions support any machine learning framework for inference whereas, compute engine supports the latest machine learning frameworks. When comparing Deep Learning VMs and AI Platform Predictions, the full serverless approach provides the following advantages:

- Simple code for implementing the inference, which at the same time allows one to implement custom logic.

- Great scalability, which allows the user to scale from 0 to 10k almost immediately

- Cost structure enables to pay for runs only option, meaning one need not pay for idle servers.

- Ability to use custom versions of different frameworks (Tensorflow 2.0 or PyTorch)

One of the main upsides of Cloud Functions is that users don’t have to manually generate the package. The user can just use a requirements.txt file and list all used libraries there.

Overview Of Cloud Functions

The model is trained locally and then uploaded to Google Cloud. Then the cloud functions will be invoked through an API request to download the model and a test image from Cloud Storage.Typically a cluster as inference might be used for the model. For instance, TF serving would be a great way to organize inference on one or more VMs —then, all one needs to do is add a load balancer on top of the cluster. The following products can be used to deploy TF serving in AI Platform:

This approach has the following advantages:

- Great response time as the model will be loaded in the memory

- The economy of scale, meaning the cost per run will decrease significantly when there are a lot of requests

Conclusion

Cloud Functions have billing per 100ms without a minimum time period. This means Cloud Functions are great for short, inconsistent jobs, but if the user needs to handle a long, consistent stream of jobs, Compute Engine might be a better choice.

Here is how to deploy cloud functions

Deployment through command prompt:

git clone https://github.com/ryfeus/gcf-packs

cd gcf-packs/tensorflow2.0/example/

gcloud functions deploy handler –runtime python37 –trigger-http –memory 2048

gcloud functions call handler

The response would be something as follows:

executionId: omx2o2y27sm9

result: Trouser

Sample code:

import numpy

import tensorflow

from google.cloud import storage

from tensorflow.keras.layers import Dense, Flatten, Conv2D

from tensorflow.keras import Model

from PIL import Image

#Keeping model as global variable so users don’t have to reload it in case of warm invocations

model = None

class CustomModel(Model):

def __init__(self):

super(CustomModel, self).__init__()

self.conv1 = Conv2D(32, 3, activation='relu')

self.flatten = Flatten()

self.d1 = Dense(128, activation='relu')

self.d2 = Dense(10, activation='softmax')

Check the full code here.