In computer vision, we deal with different problems that are mainly related to images. Some of the applications that we have seen are image classification, image segmentation, and object detection. Image classification is one of the tasks in computer vision where machines are now capable of doing classification more accurately than humans. But the main drawback of image classification is the requirement of large volumes of data.

If you train your model on a large number of images then chances are high that the model will do classification with very good accuracy. But usually, there are cases when we do not have much data for model training. What should we do in that case? The problem can be solved by doing Data augmentation. It is the technique through which one can increase the size of the data for the training of the model without adding the new data. Techniques like padding, cropping, rotating, and flipping are the most common methods that are used over the images to increase the data size.

The article demonstrates how to do data augmentation to increase the size of the data. We will first build a deep learning model without performing augmentation and will compute the accuracy. After which we will build a similar deep learning model after performing augmentation and compute the accuracy. Finally, we will compare the performance of both models.

The Dataset

We will use Fashion MNIST data for this experiment. You can directly download the data from Kaggle or can load it the way shown in this article that is directly from Keras. This data set contains 28X28 images of different clothing as the respective label. There are a total of 60,000 images in the training and 10,000 images in the testing images.

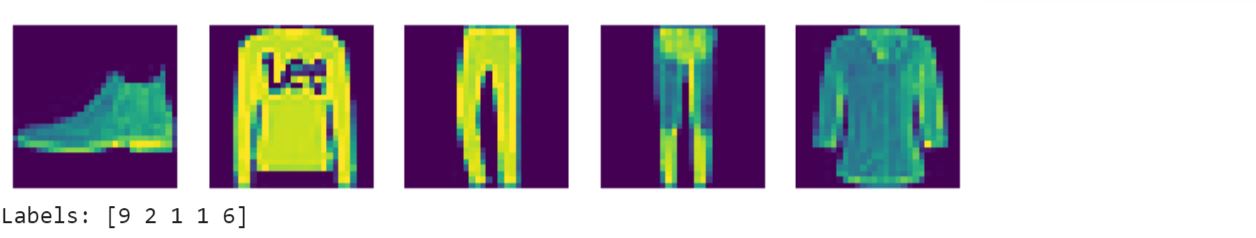

First, we will load the libraries and the dataset that is already divided into training and testing sets. Then we visualize the first 5 images in the training data set with their labels. Use the below code to the same.

import numpy as np from keras.utils import np_utils from keras.datasets import fashion_mnist (X_train, y_train), (X_test, y_test) = fashion_mnist.load_data() %matplotlib inline import matplotlib.pyplot as plt plt.figure(figsize=(10, 10)) for i in range(5): plt.subplot(1, 5, i+1) plt.imshow(X_test[i]) plt.axis('off') plt.show() print('Labels: %s' % (y_test[0:5]))

We then normalize both pieces of training as well as testing data images and convert them into dimensions that are accepted by Keras. After that, we then convert labels to categorical values. Check the below code to do the same.

X_train=X_train.astype('float32') X_test=X_test.astype('float32') X_train/=255 X_test/=255 X_train = X_train.reshape(X_train.shape[0], 28, 28, 1).astype('float32') X_test = X_test.reshape(X_test.shape[0], 28, 28, 1).astype('float32') y_train = np_utils.to_categorical(y_train, 10) y_test = np_utils.to_categorical(y_test, 10)

Model without Data Augmentation

We first import the required libraries to build the model. Use the below code to define the same.

from keras.models import Sequential from keras.layers import Dense, Activation, Dropout, Flatten, Reshape from keras.layers import Convolution2D, MaxPooling2D

We then define the model architecture with different convent layers followed by fully connected layers. Use the below code to define the model and then we will compile it. After compiling we fit the training data to the model.

model = Sequential() model.add(Convolution2D(32, 3, 3, input_shape=(28, 28, 1))) model.add(Activation('relu')) model.add(Dropout(0.25)) model.add(Convolution2D(32, 3, 3)) model.add(Activation('relu')) model.add(Convolution2D(32, 3, 3)) model.add(Activation('relu')) model.add(Dropout(0.25)) model.add(Flatten()) model.add(Dense(128)) model.add(Dense(128)) model.add(Activation('relu')) model.add(Dense(10)) model.add(Activation('softmax')) model.compile(loss='categorical_crossentropy', optimizer='adam', metrics=['accuracy']) model.fit(X_train, y_train, batch_size=32,epochs=10,validation_data=(X_test, y_test))#We now check the testing accuracy of the model using the below code. print('Test accuracy:', model.evaluate(X_test, y_test))

Model with Data Augmentation

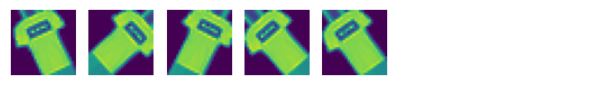

We will first generate data through data augmentation and visualize the new augmented data.

Follow the below code to do augmentation on the training image. We have just used rotation for generating the images whereas you generate images on the basis of other parameters as well given below. To do so you need to just change False to your desired value.

from keras.preprocessing.image import ImageDataGenerator #Data Augmentation datagen = ImageDataGenerator( featurewise_center=False, samplewise_center=False, featurewise_std_normalization=False, samplewise_std_normalization=False, zca_whitening=False, rotation_range=50, width_shift_range=0.01, height_shift_range=0.01, horizontal_flip=False, vertical_flip=False) datagen.fit(X_train) from matplotlib import pyplot as plt gen = datagen.flow(X_train[1:2], batch_size=1) for i in range(1, 6): plt.subplot(1,5,i) plt.axis("off") plt.imshow(gen.next().squeeze()) plt.plot() plt.show()

We will now fit the new training data to the model and start the training of the network again. Follow the below code to the same.

model.fit(X_train, y_train, batch_size=32,epochs=10, validation_data=(X_test, y_test))print('Test accuracy:', model.evaluate(X_test, y_test))

Conclusion

Finally, we can conclude that there is a difference in training and validation loss while a slight change in the accuracy of both models. Here is the summary of training:-

| Without Augmentation | With Augmentation | |

| Training Loss | 0.0306 | 0.1659 |

| Validation Loss | 0.3215 | 0.2981 |

| Training Accuracy | 87.4% | 93.77% |

| Validation Accuracy | 88% | 90% |

The reason for not much significant difference is that we already had much of the image in the training images. Data Augmentation techniques can work more effectively when working with less amount of data. You should check out this article that talks about “How Unsupervised Data Augmentation can improve NLP and vision tasks”. You can see a significant difference in accuracy when you perform data augmentation on a small data set having fewer no of training samples. You can also read this paper that is on “Understanding Data Augmentation for Classification”.