The aim behind this blog post is to introduce open source business intelligence technologies and explore data using open source technologies like D3.js, DC.js, Node JS and MongoDB.

Over the span of this post we will see the importance of the various components that we are using and we will do some code based customization as well.

The Need for Visualization:

Visualization is the so called front-end of modern business intelligence systems. I have been around in quite a few big data architecture discussions and to my surprise I found that most of the discussions are focused on the backend components: the repository, the ingestion framework, the data mart, the ETL engine, the data pipelines and then some visualization.

I might be biased in favor of the visualization technologies as I have been working on them for a long time. Needless to say visualization is as important as any other component of a system. I hope most of you will agree with me on that. Visualization is instrumental in inferring the trends from the data, spotting outliers and making sense of the data-points.

What they say is right, a picture is indeed worth a thousand words.

The components of our analysis and their functions:

D3.js: A JavaScript based visualization engine which will render interactive charts and graphs based on the data.

Dc.js: A JavaScript based wrapper library for D3.js which makes plotting the charts a lot easier.

Crossfilter.js: A JavaScript based data manipulation library. Works splendid with dc.js. Enables two way data binding.

Node JS: Our powerful server which serves data to the visualization engine and also hosts the webpages and javascript libraries.

Mongo DB: The resident No-SQL database which will serve as a fantastic data repository for our project.

The Steps to Success:

- Identifying what our analysis will do

- Fetching the data and storing it in MongoDB

- Creating a Node.js server to get data from MongoDB and host it as an API

- Building our frontend using D3.js, Dc.js and some good old JavaScript.

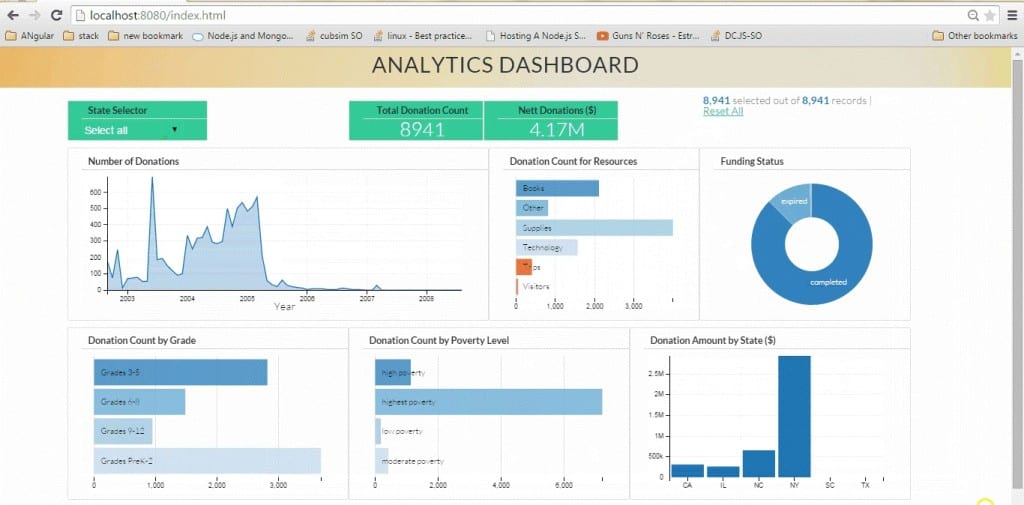

Here’s what the end result will look like:

STEP 1: Identifying our Analysis

We will be analyzing data from DonorsChoose.org, which is a US based non profit organization that allows individuals to donate money directly to public school classroom projects. We will get the dataset and try to create an informative analysis on the basis of the data attributes. We will have more clarity on this once we actually see the data set. I have taken a subset of the original data set for our analysis purposes. This dataset contains nearly 9000 rows.

Step 2: Fetching the data and storing it in MongoDB

The original dataset from DonorsChoose.org is available here. For our project we will be using a portion of the “Project” dataset. I have chosen this data as i am somewhat familiar with it. The “Project” dataset contains datapoints for the classroom projects data available with DonorsChoose.org. I have intentionally used a small subset of the data so that we can focus on getting the charts done quickly rather than waiting for the data to be fetched each time we refresh. That said, you can always ingest the original dataset once you have mastered this post. To download the required dataset click here.

Unzip the rar file to your desktop or a suitable directory.

After unzipping the downloaded file, we get a file named sampledata.csv with a size around 3 megabytes.The data is in csv (comma separated value) format. CSV files are a popular option for storing data in a tabular format. CSV files are easy ti interpret and parse. The file contains nearly 9,000 records and 44 attributes which we can use for our analysis.

Let’s Install MongoDB. I am using a windows environment but the process is nearly similar on all platforms. The installation manuals can be found here. For the windows environment open your command prompt, Go to the folder where you installed mongodb and locate the bin folder. In my case the path is:

C:\Program Files\MongoDB\Server\3.0\bin>

Fire up your mongoDB by running mongod.exe. Leave the prompt running as it is and open another command prompt and navigate to the bin directory again. Enter this command

C:\Program Files\MongoDB\Server\3.0\bin>mongoimport -d donorschoose -c projects –type csv –headerline –file C:\Users\Anmol\Desktop\sampledata\sampledata.csv

You will see mongodb importing the data points from our data set into the database. This process might take some time.

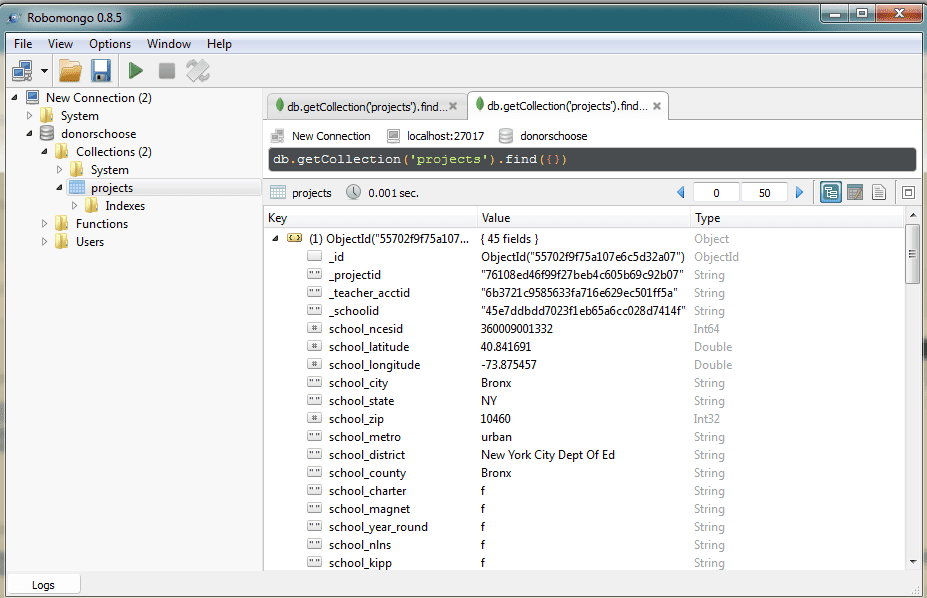

While we are at it, i would strongly recommend using Robomongo in case you are going to work with MongoDB on a regular basis. Robomongo is a GUI based mongoDB management tool. Install robomongo and open it. Create a new connection and enter localhost as the address and 27017 as the password and click save. Connect to this instance in the next screen that you get. The robomongo shell will look something like this:

Navigate to the projects collection and double click on it to see a list of the datapoints. Click on the tiny expand arrow on the data points list to see the full list of attributes for the document. These are the attributes (columns) that we just imported from our dataset. We will be using the following attributes for our analysis:

- school_state

- resource_type

- poverty_level

- date_posted

- total_donations

- funding_status

- grade_level

Step 3: Setting Up the Node.js Server

Now to the one of the happening server platforms: Node.js or Node JS or Nodejs, point is you cannot ignore it!

I am quoting their website here:

Node.js® is a platform built on Chrome’s JavaScript runtime for easily building fast, scalable network applications. Node.js uses an event-driven, non-blocking I/O model that makes it lightweight and efficient, perfect for data-intensive real-time applications that run across distributed devices.

I find Node.js to be very fast and it works really well as far as connecting to mongodb is concerned.

To begin with we need to install Node.js and npm. npm is a package manager for node and helps us in easily deploying modules for added functionality.

Here is a cool guide on installing node and npm for windows.

Our Node.js platform is broadly classified into the following folders:

App Folder: Contains the models for data connections and api serving. Includes the files:

SubjectViews.js: holds the data model. Specify specific queries (if any) and the collection name to fetch data from

routes.js: Fetches data from the collection and serves it as an api

Config Folder:

DB.js: Contains the database information i.e. the address and the port to connect to

node_modules folder: Contains various node modules which are installed to enhance functionality of the node server

Public Folder: Will contain our html, javascript and css files. We will use these three to code our charts utilizing the visualization and aggregation libraries.

package.json file: Contains a list of all the modules which need to be installed for the server to run

server.js file: The file utilizes the node modules to initialize data fetch from mongoDB and host our webpages over the network.

The folder structure for our project will look like this:

You can copy the full code and folder structure from the github repository here:

Navigate to the home folder and run npm install command

C:\Users\Anmol\Desktop\blog>npm install

NPM will read the dependencies from the package.json file and install them.

Tip for ubuntu users installing node.js :

To make those Node.js apps that reference “node” functional symbolic link it up:

sudo ln -s /usr/bin/nodejs /usr/bin/node

We now have our folder structure ready. Run this command:

npm start

You will see a message from node saying that the magic is happening on port 8080. (You can change the port number from the server.js file)

Open your browser and go to localhost:8080/api/data (Defined in the routes.js file)

Awesome. Now our API is all set! For the final part i.e. creating visualizations for the data.

- Building our frontend using D3.js,Dc.js and some good old JavaScript.

A lot of great Business Intelligence (BI) tools exist in the current landscape, Qlik,Spotfire,Tableau,Microstrategy to name a few. Along came D3.js, an awesome open source visualization library which utilized the power of the omnipresent JavaScript to make charting cool and put the control of the visualization design in user’s hands.

We will be using a responsive html template for our design needs as our main aim is to get the charts up and running rather than code the responsiveness and the style of the various divs. I have used a nice synchronous template for this project.

If you take a look at the node.js code you will observe that the static content will be served in the public folder. Hence we place our html stuff there.

We will be utilizing the following libraries for visualization:

D3.js: Which will render our charts. D3 creates svg based charts which are easily passed into out html blocks

Dc.js: which we will use as a wrapper for D3.js, meaning we dont need to code each and every thing about the charts but just the basic parameters

Crossfilter.js: which is used for exploring large multivariate datasets in the browser. Really great for slicing and dicing data.Enables drill down based analysis

queue.js: An asynchronous helper library for data ingestion involving multiple api’s

Dc.css : Contains the styling directives for our dc charts

Dashboard.js: Will contain the code for our charts and graphs

You can always refer to the code repository for the placement of these libraries. We need to include these libraries in our html page (index.html). Now, to the main task at hand: Coding the charts!

In our Dashboard.js file we have the following :

A queue() function which utilizes the queue library for asynchronous loading. It is helpful when you are trying to get data from multiple API’s for a single analysis. In our current project we don’t need the queue functionality, but its good to have a code than can be reused as per the need. The queue function process that data hosted at the API and inserts it into the apiData Variable.

queue()

.defer(d3.json, “/api/data”)

.await(makeGraphs);

function makeGraphs(error, apiData) {

Then we do some basic transformations on our data using the d3 functions. We pass the data inside the apiData variable into our dataSet variable. We then parse the date data type to suit our charting needs and set the data type of total_donations as a number using the + operator.

var dataSet = apiData;

var dateFormat = d3.time.format(“%m/%d/%Y”);

dataSet.forEach(function(d) {

d.date_posted = dateFormat.parse(d.date_posted);

d.date_posted.setDate(1);

d.total_donations = +d.total_donations;

});

Next Steps are ingesting the data into a crossfilter instance and creating dimensions based on the crossfilter instance. Crossfilter acts as a two way data binding pipeline. Whenever you make a selection on the data, it is automatically applied to other charts as well enabling our drill down functionality.

var ndx = crossfilter(dataSet);

var datePosted = ndx.dimension(function(d) { return d.date_posted; });

var gradeLevel = ndx.dimension(function(d) { return d.grade_level; });

var resourceType = ndx.dimension(function(d) { return d.resource_type; });

var fundingStatus = ndx.dimension(function(d) { return d.funding_status; });

var povertyLevel = ndx.dimension(function(d) { return d.poverty_level; });

var state = ndx.dimension(function(d) { return d.school_state; });

var totalDonations = ndx.dimension(function(d) { return d.total_donations; });

Now we calculate metrics and groups for grouping and counting our data.

var projectsByDate = datePosted.group();

var projectsByGrade = gradeLevel.group();

var projectsByResourceType = resourceType.group();

var projectsByFundingStatus = fundingStatus.group();

var projectsByPovertyLevel = povertyLevel.group();

var stateGroup = state.group();

var all = ndx.groupAll();

//Calculate Groups

var totalDonationsState = state.group().reduceSum(function(d) {

return d.total_donations;

});

var totalDonationsGrade = gradeLevel.group().reduceSum(function(d) {

return d.grade_level;

});

var totalDonationsFundingStatus = fundingStatus.group().reduceSum(function(d) {

return d.funding_status;

});

var netTotalDonations = ndx.groupAll().reduceSum(function(d) {return d.total_donations;});

Now we define the charts using DC.js library. Dc.js makes it easy to code good looking charts. Plus the dc library has a lot of charts to suit majority of anaysis. Checkout the github page for dc here.

var dateChart = dc.lineChart(“#date-chart”);

var gradeLevelChart = dc.rowChart(“#grade-chart”);

var resourceTypeChart = dc.rowChart(“#resource-chart”);

var fundingStatusChart = dc.pieChart(“#funding-chart”);

var povertyLevelChart = dc.rowChart(“#poverty-chart”);

var totalProjects = dc.numberDisplay(“#total-projects”);

var netDonations = dc.numberDisplay(“#net-donations”);

var stateDonations = dc.barChart(“#state-donations”);

And the final part where we define our charts. We are using a combination of charts and widgets here. You may notice that we are essentially supplying basic information to the chart definitions like dimension,group, axes properties etc.

// A dropdown widget

selectField = dc.selectMenu(‘#menuselect’)

.dimension(state)

.group(stateGroup);

// Widget for seeing the rows selected and rows available in the dataset

dc.dataCount(“#row-selection”)

.dimension(ndx)

.group(all);

//A number chart

totalProjects

.formatNumber(d3.format(“d”))

.valueAccessor(function(d){return d; })

.group(all);

//Another number chart

netDonations

.formatNumber(d3.format(“d”))

.valueAccessor(function(d){return d; })

.group(netTotalDonations)

.formatNumber(d3.format(“.3s”));

//A line chart

dateChart

//.width(600)

.height(220)

.margins({top: 10, right: 50, bottom: 30, left: 50})

.dimension(datePosted)

.group(projectsByDate)

.renderArea(true)

.transitionDuration(500)

.x(d3.time.scale().domain([minDate, maxDate]))

.elasticY(true)

.renderHorizontalGridLines(true)

.renderVerticalGridLines(true)

.xAxisLabel(“Year”)

.yAxis().ticks(6);

//A row chart

resourceTypeChart

//.width(300)

.height(220)

.dimension(resourceType)

.group(projectsByResourceType)

.elasticX(true)

.xAxis().ticks(5);

//Another row chart

povertyLevelChart

//.width(300)

.height(220)

.dimension(povertyLevel)

.group(projectsByPovertyLevel)

.xAxis().ticks(4);

//Another row chart

gradeLevelChart

//.width(300)

.height(220)

.dimension(gradeLevel)

.group(projectsByGrade)

.xAxis().ticks(4);

//A pie chart

fundingStatusChart

.height(220)

//.width(350)

.radius(90)

.innerRadius(40)

.transitionDuration(1000)

.dimension(fundingStatus)

.group(projectsByFundingStatus);

//A bar chart

stateDonations

//.width(800)

.height(220)

.transitionDuration(1000)

.dimension(state)

.group(totalDonationsState)

.margins({top: 10, right: 50, bottom: 30, left: 50})

.centerBar(false)

.gap(5)

.elasticY(true)

.x(d3.scale.ordinal().domain(state))

.xUnits(dc.units.ordinal)

.renderHorizontalGridLines(true)

.renderVerticalGridLines(true)

.ordering(function(d){return d.value;})

.yAxis().tickFormat(d3.format(“s”));

And finally we call the dc render function which renders our charts.

dc.renderAll();

Mission Accomplished!

Open your browser and go to localhost:8080/index.html to see your dashboard in action.

There is a lot of customization that can be done to the charts. I did not delve into them at this stage. We can format the axes, the colors, the labels, the titles and a whole lot of things using dc.js, d3.js and CSS. Moving on I will be taking up one chart at a time and provide additional examples of what all we can customize.

At the end we now have some knowledge of MongoDB, Nodejs, D3. You can use this project as a boilerplate for exploring and analyzing new data sets. All the source code can be found in this github repository.

I will be most happy to answer your questions and queries. Please leave them in the comments.

Do share the post and spread the good word. It may help folks out there get to speed on this open source visualization stack.