|

Listen to this story

|

Since the birth of text-to-image DALL-E by OpenAI, the AI world has been working towards similar models, for example, Midjourney, and Imagen, to name a few. Soon came text-to-video models like Transframer, NUWA Infinity, CogVideo, etc. Even text-to-voice models like VALL-E were recently unveiled by Microsoft.

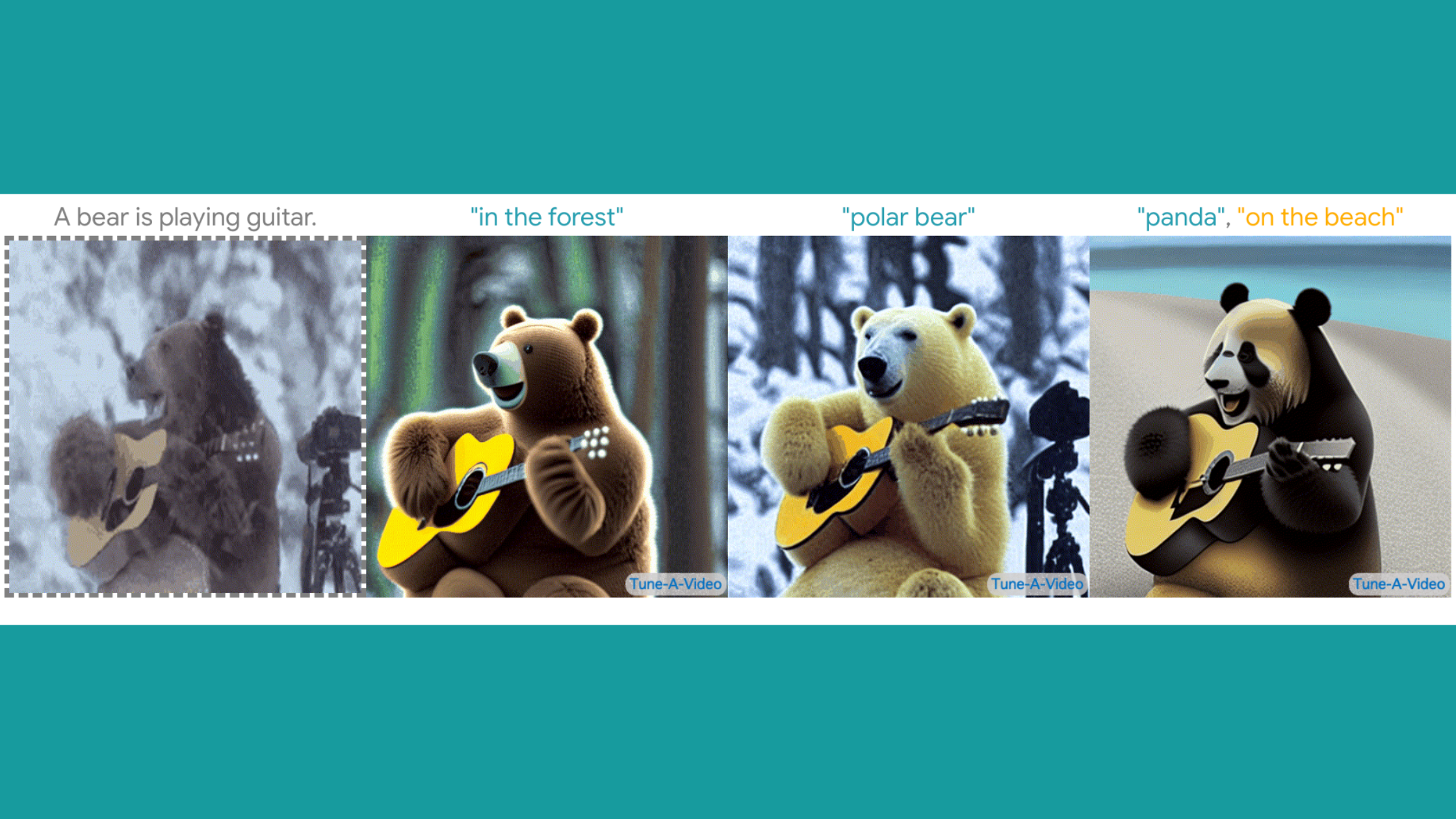

Last month, researchers from Show Lab, National University of Singapore came up with a text-to-video generator called Tune-A-Video (TTV) to address the issue of One-Shot Video Generation, where only a single text-video pair is provided for training an open-domain text-to-video generator. With customised Sparse-Causal Attention, Tune-A-Video expands spatial self-attention to the spatiotemporal domain using pretrained text-to-image (TTI) diffusion models.

Check the unofficial implementation of Tune-A-Video here.

In one training sample, the projection matrices in the attention block are modified to include the relevant motion information. Tune-A-Video can create temporally coherent videos for various applications, including changing the subject or background, modifying attributes, and transferring styles.

It was discovered that TTI models could produce images that match verb terms well and that expanding TTI models to generate different images at once demonstrates unexpectedly strong content consistency.

Fine-Tuning: TTI models are expanded to TTV models using TTI model weights that have already been pretrained. The text-video pair is then subjected to one-shot tuning in order to create a one-shot TTV model.

Inference: A modified text prompt is used to generate new videos.

After receiving a video and text pair as input, it modifies the projection matrices in attention blocks.

Read the full paper here.