In today’s era, a huge part of our life involves going on different applications and searching for our requirements. One of the unique things about it is autocompleting, i.e., before putting a whole word in a search bar, we get some recommendations. Sometimes the search bar recommends exactly what we wanted to search, or sometimes it recommends some other options that we don’t know. To perform this on any platform requires a perfect way to find frequent itemset efficiently. This works like a recommendation system that works with frequent pairs of words and sets of items that occur together frequently. This all comes under the subject of data mining. FP-tree is a special data structure that helps the whole algorithm in finding out the best recommendation.

Introduction

FP-tree(Frequent Pattern tree) is the data structure of the FP-growth algorithm for mining frequent itemsets from a database by using association rules. It’s a perfect alternative to the apriori algorithm.

Mining patterns from a database have been a research subject; most previous studies

suggested an Apriori-like candidate set generation and test approach. But it is pretty slow, and it becomes slower when there are many patterns available in mining. Therefore, FP-tree is proposed. The alternative of the apriori-like algorithm, the frequent-pattern tree(FP-tree) structure, is a tree data structure for storing frequent patterns.

The algorithm is designed to operate on databases containing transactions, such as customers’ purchase history on the Amazon website. The purchased item is considered ‘frequent’. The similar frequent will share the similar branch of the tree, and when they differ, the nodes will split them. The node identifies a single item from the branch(set of items), and the branch (path) shows the number of occurrences—links between the items called node-link.

This structure helps to find the required frequent set rapidly. Internally FP-growth is an algorithm that does not require candidate generation. It uses an FP-tree data structure that does not require the generation of candidate sets explicitly, making the algorithm work better with large databases.

Support and confidence

FP-tree finds the algorithm of frequent sets. Support is the frequency of an item or set in a database where the confidence is the probability of an item set occurrence with its set of items. For more explanation, let’s just make a data set.

Let’s consider A, B, C, D, E, F, G are the items in a database, and they have accrued four times in database like this:

1. B E F G 2. G D B E 3. A B E G 4. C D B E G

So if the transactions are as above, the support degree of B, E, G is 4, and support can be 4/4 = 1

Where D, B has the support degree of 2 and support will be 2/4 = ½

And confidence of {E, G} => {B} is the support degree of B, E, G divided by support degree of {E,G}.

FP-TREE Algorithm

Let’s look into the example to have a complete explanation of the FP-TREE algorithm.

['Milk', 'Onion', 'Nutmeg', 'Kidney Beans', 'Eggs', 'Yogurt'], ['Dill', 'Onion', 'Nutmeg', 'Kidney Beans', 'Eggs', 'Yogurt'], ['Milk', 'Apple', 'Kidney Beans', 'Eggs'], ['Milk', 'Unicorn', 'Corn', 'Kidney Beans', 'Yogurt'], ['Corn', 'Onion', 'Onion', 'Ice cream', 'Eggs']

Consider this is a dataset of supermarkets in which we are having details of 5 transactions of items.

Step 1: Each item has a frequency of occurring; the number of occurrences will increase the item’s support. Here we are talking minimum support 3 or 0.6 of any item for the whole data set.

Step 2: We can see in the transactions that kidney beans are occurring in every transaction, so, at the end of the structure, its support will be 5, but for the first transaction, its support will be 1. So in this step, we reset the item’s order by their support degree for the whole data set.

['Kidney Beans','Eggs', 'Yogurt', 'Onion','Milk',], ['Kidney Beans','Eggs', 'Yogurt', 'Onion'], ['Kidney Beans','Eggs','Milk'], ['Kidney Beans', 'Yogurt', 'Milk'], ['Eggs', 'Onion']

As we have discussed support degree will be 3 or 0.6 for this data set

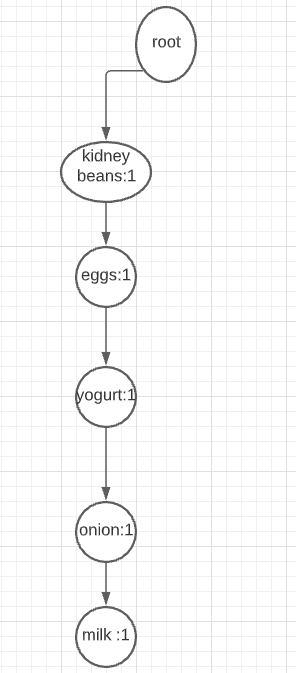

Step 3: Insert the first transaction of the data in a chart like in the picture:

From step 2, we can see in the first transaction we have records for these items and items having support degree 3 and more than three in the whole dataset.

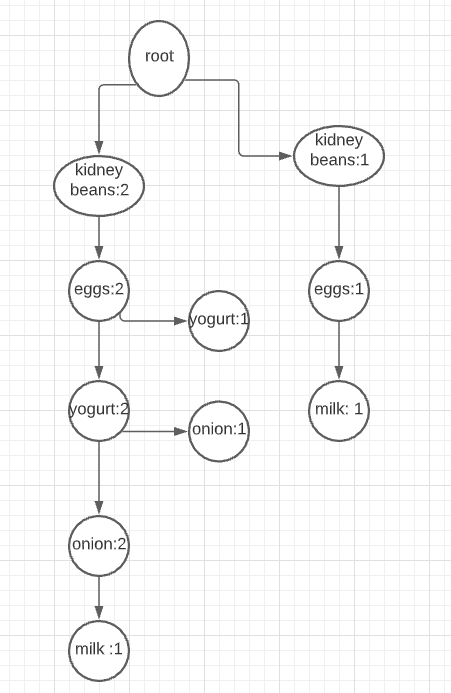

For the second transaction, we can improve our tree (below image). In this, we can see that the all-over support is increased by one value for every item, excluding milk, because in the second transaction, milk was not available.

We can also improve the tree for all transactions; here below image is for the third transaction.

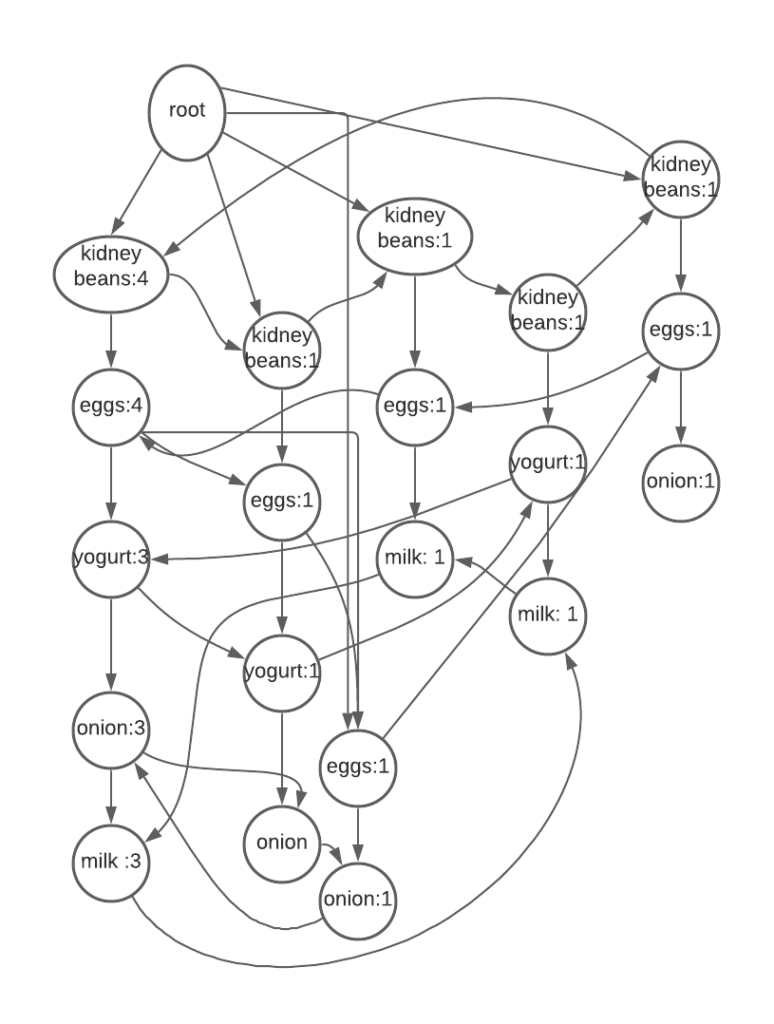

Here in the final, the visualized structure of the FP-tree will look like this.

In the final step, we can see how the FP-tree algorithm counts the support degree. All the items having less than three support degrees are removed, and frequent sets are represented by the curve line and straight lines representing the start of any transaction or call it the branch in terms of a tree.

Next, we will try to make an FP-tree data structure and try to fit an FP-growth algorithm to make decisions or give recommendations of related items.

Code Implementation of FP-Growth algorithm

Defining functions

Input:

class nodes:

def __init__(self, nameValue, numOccur, parentNode):

self.name = nameValue

self.count = numOccur

self.nodeLink = None

self.parents = parentNode #needs to be updated

self.childrens = {}

#increments the count variable with a given amount

def inc(self, numOccur):

self.count += numOccur

#display tree in text. Useful for debugging

def disp(self, ind=1):

print (' '*ind, self.name, ' ', self.count)

for child in self.childrens.values():

child.disp(ind+1)

The above class provides the node, node-link, parentnode and children for the parent nodes.

Node is counted in the class for the node’s name, and node-link will link a similar item. The parentnode is the parent of the nodes, and children contain a blank dictionary in the node.

Verifying the class.

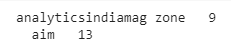

root = nodes('analyticsindiamag zone',9,None)

root.childrens['aim'] = nodes('aim',13,None)

root.disp()

Output:

Constructing the FP-tree

def createTree(dataSet, minSup=1):

headerTable = {}

for trans in dataSet:

for item in trans:

headerTable[item] = headerTable.get(item, 0) + dataSet[trans]

for k in list(headerTable):

if headerTable[k] < minSup:

del(headerTable[k])

freqItemSet = set(headerTable.keys())

if len(freqItemSet) == 0: return None, None

for k in headerTable:

headerTable[k] = [headerTable[k], None]

retTree = nodes('Null Set', 1, None)

for tranSet, count in dataSet.items():

localD = {}

for item in tranSet:

if item in freqItemSet:

localD[item] = headerTable[item][0]

if len(localD) > 0:

orderedItems = [v[0] for v in sorted(localD.items(), key=lambda p: p[1], reverse=True)]

updateTree(orderedItems, retTree, headerTable, count)

return retTree, headerTable #return tree and header table

CreateTree will ask for the dataset, In the CreateTree function and count the items according to the given minimum support. Doing this will generate an FP-tree; this function will go to every transaction of the dataset, and in the end, it will count the frequency of the items, and information will be stored in the header table.

Growing the FP-tree with a dataset

Input:

def updateTree(items, inTree, headerTable, count): if items[0] in inTree.childrens: inTree.childrens[items[0]].inc(count) else: inTree.childrens[items[0]] = nodes(items[0], count, inTree) if headerTable[items[0]][1] == None: headerTable[items[0]][1] = inTree.childrens[items[0]] else: updateHeader(headerTable[items[0]][1], inTree.childrens[items[0]]) if len(items) > 1: updateTree(items[1::], inTree.childrens[items[0]], headerTable, count) def updateHeader(nodeToTest, targetNode): while (nodeToTest.nodeLink != None): nodeToTest = nodeToTest.nodeLink nodeToTest.nodeLink = nodes

Creating a data set for FP-growth algorithm

The function CreatTree does not take the data in list format. Instead, it needs the data in dictionary format and frequency of the dictionary as value.

def loadSimpDat():

simpDat = [['Milk', 'Onion', 'Nutmeg', 'Kidney Beans', 'Eggs', 'Yogurt'],

['Dill', 'Onion', 'Nutmeg', 'Kidney Beans', 'Eggs', 'Yogurt'],

['Milk', 'Apple', 'Kidney Beans', 'Eggs'],

['Milk', 'Unicorn', 'Corn', 'Kidney Beans', 'Yogurt'],

['Corn', 'Onion', 'Onion', 'Kidney Beans', 'Ice cream', 'Eggs']]

return simpDat

def createInitSet(dataSet):

retDict = {}

for trans in dataSet:

retDict[frozenset(trans)] = 1

return retDict

simpDat = loadSimpDat()

initSet = createInitSet(simpDat)

initSet

Output:

{frozenset({'Eggs', 'Kidney Beans', 'Milk', 'Nutmeg', 'Onion', 'Yogurt'}): 1,

frozenset({'Dill', 'Eggs', 'Kidney Beans', 'Nutmeg', 'Onion', 'Yogurt'}): 1,

frozenset({'Apple', 'Eggs', 'Kidney Beans', 'Milk'}): 1,

frozenset({'Corn', 'Kidney Beans', 'Milk', 'Unicorn', 'Yogurt'}): 1,

frozenset({'Corn', 'Eggs', 'Ice cream', 'Kidney Beans', 'Onion'}): 1}

Making dictionary as FP-Tree

Input:

#The FP-tree myFPtree, myHeaderTab = createTree(initSet, 3) myFPtree.disp()

Output:

In the data set, we can see the FP-tree structure of our data set. The most occurring item in the sets has a count of 5. After that, eggs have a score of 4. It means kidney beans and eggs occurred together in a transaction at least four times where yoghurt eggs and kidney beans came together twice.

Extracting frequent items from FP-tree.

To perform this in the next codes, I am going to get conditional patterns of FP-tree.

For this, we need to go from leaf nodes or last nodes to the roof node which null set in our FP-tree.

def ascendTree(leafNode, prefixPath): #ascends from leaf node to root if leafNode.parents != None: prefixPath.append(leafNode.name) ascendTree(leafNode.parents, prefixPath)

This function gives a list of links from leaves to the root, which will be returned to our next function findprefixpath() and appended to a list named condpats which will help get the results using nodes class, node count and node-link functions.

Input:

def findPrefixPath(basePat, nodes): #treeNode comes from header table

condPats = {}

while nodes != None:

prefixPath = []

ascendTree(nodes, prefixPath)

if len(prefixPath) > 1:

condPats[frozenset(prefixPath[1:])] = nodes.count

nodes = nodes.nodelink

return condPats

Let’s check for the commonly occurred item with eggs:

Input:

findPrefixPath('Eggs', myHeaderTab['Eggs'][1])

Output:

Here in the above output, we can see that when we asked to function about the occurrence of eggs, it appeared four times in the transaction, and the most occurring item with eggs is kidney beans.

In the above article, we have seen that how an FP-tree structured data set looks and how it works with the FP-growth algorithm, and how it is much faster than the apriori algorithm; there are some differences between the Apriori and FP-tree algorithm which makes FP-growth algorithm better as it need only two scans of database where apriori algorithm requires multiple scans to generate candidate set.