The word “Feature”, when explained as in the domain of computer vision and image processing, can be defined as a piece of information that describes or tells us about the content of an image. For example, it helps us identify whether a certain region of an image has certain properties. Features in an image help us recognize parts or patterns of an object in the image that help us identify it. It may tell us about specific structures in the image such as points, edges or objects or information related to motion in image sequences or give details about shapes present in the image defined in terms of curves or boundaries between different image regions. It corresponds to local regions in the image and is fundamental in many applications and different areas of image analysis, namely recognition, matching, reconstruction and much more. Image features help us deal with two different types of problems faced in computer vision: detecting areas of interest in the image, typically contours, and the description of local regions in the image, typically for matching in different images.

When we look at an image, our brain tries to process and understand the image by analysing all the possible sides and points in the image and then recognising the important aspects and what it means. Similarly, for solving problems related to computer vision, the machine needs to understand the important aspects of an image. To do so, it uses high computational processing power, mathematical algorithms, and machine learning models that help understand, identify and recognise.

Mathematical representations of key areas in an image help us understand the features. Features in the mathematical aspect are the vector representations of the visual content from an image to perform typical mathematical operations. Applying Computer Vision Techniques such as Detection helps us identify typical points of interest in the image. The description helps understand the image’s appearance even under changed illumination conditions, scale or rotation, and Matching is used to identify similar features among a pair of images. In this article, we will be talking more about feature matching and its different aspects.

What is Feature Matching?

Feature matching refers to finding corresponding features from two similar images based on a search distance algorithm. One of the images is considered the source and the other as target, and the feature matching technique is used to either find or derive and transfer attributes from source to target image. The feature matching process generally analyses the source and target’s image topology, detects the feature patterns, matches the patterns, and matches the features within the discovered patterns. The accuracy of feature matching depends on image similarity, complexity, and quality. Normally, a high percentage of successful matching can be achieved using the correct method, while uncertainty and errors may occur and would require post-inspection and corrections.

Feature attributes analysis on the image can help determine the right match while feature matching. When one or more match fields are specified through the algorithm or model created, spatially matched features are checked against the match fields. If one source feature spatially matches two or more candidate target features, but one of the target features has matching attribute values, and the other doesn’t, then the detected match is chosen as the final match. The condition of attribute match affects the level and confidence in the feature matching. Within each match group created using the analysis, the match relationship is defined as the number of source features (m) versus the number of target features (n).

LoFTR for Feature Matching

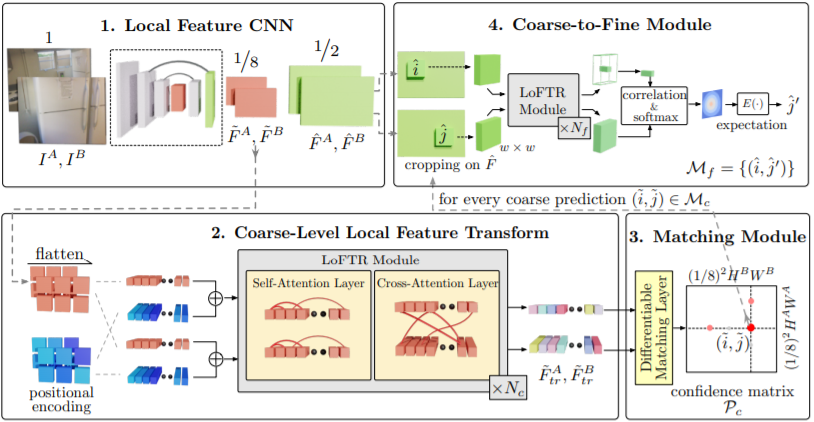

The LoFTR or Local Feature TRansformer is a Framework that provides a method for image feature matching. Instead of performing image processing methods such as image feature detection, description, and matching one by one sequentially, it first establishes a pixel-wise dense match and later refines the matches. In contrast to traditional methods that use a cost volume to search corresponding matches, the framework uses self and cross attention layers from its Transformer model to obtain feature descriptors present on both images. The global receptive field provided by Transformer enables LoFTR to produce dense matches in even low-texture areas, where traditional feature detectors usually struggle to produce repeatable interest points. Furthermore, the framework model comes pre-trained on indoor and outdoor datasets to detect the kind of image being analyzed, with features like self-attention. Hence, it makes LoFTR outperform other state-of-the-art methods by a large margin.

LoFTR works in the following way:

- First, the CNN extracts the coarse-level feature maps Feature A and Feature B, together with the fine-level feature maps created from the image pair A and B .

- Then, the created feature maps get flattened into 1-D vectors and are added with the positional encoding that describes the positional orientation of objects present in the input image. The added features are then processed by the Local Feature Transformer (LoFTR) module.

- Further, a differentiable matching layer is used to match the transformed features, which provide a confidence matrix. The matches are then selected according to the confidence threshold level and mutual nearest-neighbor criteria, yielding a coarse-level match prediction.

- For every selected coarse prediction made, a local window with size w × w is cropped from the fine-level feature map. Coarse matches are then refined from this local window to a sub-pixel level and considered as the final match prediction.

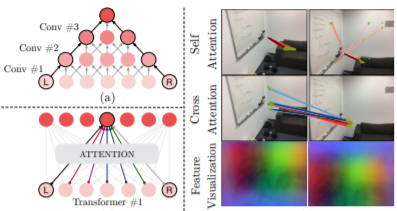

The Convolution Layers and the Transformers assume that the objective is to establish a connection between the Left and Right elements between two images to extract their joint feature representation. Due to the local connectivity of convolutions, many convolution layers are stacked together to achieve such a connection. The global receptive field of the Transformers enables this connection to be established through only one attention layer, which stores the previously learned attributes as well. For visualising the attention weights and transformed dense features, Principal Component Analysis is used to reduce the dimension of the transformed features A and B. In turn, it visualizes the results with RGB colors. The visualization from attention weights demonstrates that the features in indistinctive or regions of low texture from the image can aggregate local and global context information through self-attention and cross-attention. Using such, the feature visualization with PCA further enables LoFTR to learn positional features for better representation.

Getting Started with LoFTR

Now, we will try to create a Local Feature Transformer model using convolutional neural networks and image recognition features of LoFTR. We will perform this on two images of a similar room space taken from different angles and try to detect similar features between them and get a count. This is an official implementation from the creators of LoFTR whose Github repository can be accessed from the link here.

First Steps

First, we will upload the images that we want to compare using Feature Matching. I am using the image upload method; you can also set a path to your images from the directory.

#uploading Images

from google.colab import files

uploaded = files.upload()

for file_name in uploaded.keys():

print('User uploaded file "{name}" with length {length} bytes'.format(

name=file_name, length=len(uploaded[file_name])))

image_pair = ['/content/uploaded/' + f for f in list(uploaded.keys())]

%cd ..

Or

img0_pth = "assets/scannet_sample_images/scene0711_00_frame-001680.jpg"img1_pth = "assets/scannet_sample_images/scene0711_00_frame-001995.jpg"

I’ll be comparing two images of the same indoor room space taken from two different perspectives. You can also use images for outdoor spaces and make use of the outdoor detection function. However, it is generally recommended to use wide-angle images for better feature detection results.

#Mentioning the image type . image_type = 'indoor' # image_type = 'outdoor'

Calling the LoFTR Framework

Now, we will be calling the LoFTR Framework to start with creating our model. We will also be downloading the pre-trained weights for our neural network.

# Configure LoFTR environment. !rm -rf sample_data !pip install torch einops yacs kornia !git clone https://github.com/zju3dv/LoFTR --depth 1 !mv LoFTR/* . && rm -rf LoFTR # Download pretrained weights !mkdir weights %cd weights/ !gdown --id 1w1Qhea3WLRMS81Vod_k5rxS_GNRgIi-O # indoor-ds !gdown --id 1M-VD35-qdB5Iw-AtbDBCKC7hPolFW9UY # outdoor-ds %cd ..

Installing the dependencies

Installing our further dependencies for our model,

import torch import cv2 import numpy as np import matplotlib.cm as cm from src.utils.plotting import make_matching_figure from src.loftr import LoFTR, default_cfg

Further setting our network for matching. We will use dual-softmax as our activation function for the hidden layers in the CNN being created.

# The default config uses dual-softmax.

# You can change the default values like thr and coarse_match_type.

matcher = LoFTR(config=default_cfg)

if image_type == 'indoor':

matcher.load_state_dict(torch.load("weights/indoor_ds.ckpt")['state_dict'])

elif image_type == 'outdoor':

matcher.load_state_dict(torch.load("weights/outdoor_ds.ckpt")['state_dict'])

else:

raise ValueError("Wrong image_type is given.")

matcher = matcher.eval().cuda()

Setting the batch and creating functions to get prediction,

img0_raw = cv2.imread(image_pair[0], cv2.IMREAD_GRAYSCALE)

img1_raw = cv2.imread(image_pair[1], cv2.IMREAD_GRAYSCALE)

img0_raw = cv2.resize(img0_raw, (640, 480))

img1_raw = cv2.resize(img1_raw, (640, 480))

img0 = torch.from_numpy(img0_raw)[None][None].cuda() / 255.

img1 = torch.from_numpy(img1_raw)[None][None].cuda() / 255.

batch = {'image0': img0, 'image1': img1}

# Inference with LoFTR and get prediction

with torch.no_grad():

matcher(batch)

mkpts0 = batch['mkpts0_f'].cpu().numpy()

mkpts1 = batch['mkpts1_f'].cpu().numpy()

mconf = batch['mconf'].cpu().numpy()

Plotting the results

The final step will be to plot the results processed and obtained from our CNN. The Code also implements a match counter that keeps track of the number of matches from the images.

# Drawing the plot

color = cm.jet(mconf, alpha=0.7)

text = [

'LoFTR',

'Matches: {}'.format(len(mkpts0)),

]

fig = make_matching_figure(img0_raw, img1_raw, mkpts0, mkpts1, color, mkpts0, mkpts1, text)

# A high-res PDF will also be downloaded automatically.

make_matching_figure(img0_raw, img1_raw, mkpts0, mkpts1, color, mkpts0, mkpts1, text, path="LoFTR-colab-demo.pdf")

files.download("LoFTR-colab-demo.pdf")

EndNotes

In this article, we have explored the domain of Feature Matching and explored the LoFTR Framework for feature matching, also known as Local Feature TRansformer. I suggest using various complex indoor and outdoor images to compare the model’s accuracy and efficiency. The following implementation can be found as a colab notebook from the link here.

Happy Learning!