LiDAR systems are one of the key components of many self-driving vehicles around the world.

LiDAR works much like radar, but instead of sending out radio waves, it emits pulses of infrared light, which are invisible to the naked eye. It then measures how long this emitted pulse takes to come back after hitting nearby objects. LiDar systems though popular with modern autonomous systems, come under scanner as experts began to back the cheaper camera-based system for stereoscopic vision in autonomous vehicles.

“LiDAR is a fool’s errand,” Tesla’s Elon Musk said in April. “Anyone relying on lidar is doomed.”

Recent studies show that a “physically adversarial Stop Sign” can be synthesized such that the autonomous driving cars will misidentify.

However, these image-based adversarial examples cannot easily alter 3D scans such as widely equipped LiDAR or radar on autonomous vehicles.

In this paper, the team at the University of Michigan in collaboration with Baidu research and the University of Illinois makes an attempt to expose the shortcomings of LiDAR-based autonomous driving detection systems.

The authors propose an optimization-based approach LiDAR-Adv to generate real-world adversarial objects that can escape the LiDAR-based detection systems under various conditions.

To expose the setbacks in LiDar based systems, the researchers use an evolution-based black box attack algorithm. And, then propose a strong attack strategy, using a gradient-based approach LiDAR-Adv.

Checking For Adversities

As can be seen in the picture above, in the first step, a sensor fires off an array of laser beams consecutive in horizontal and vertical directions.

This sensor then captures the intensity of light that has been reflected back on those surfaces. And, then it calculates the time that photons have traveled along each beam.

Since LiDar systems are good at detecting the intensity of light, the authors wrote that it is unclear how adversarial algorithms that are designed for natural lighting in image space can be adapted to invisible laser beams used as light sources.

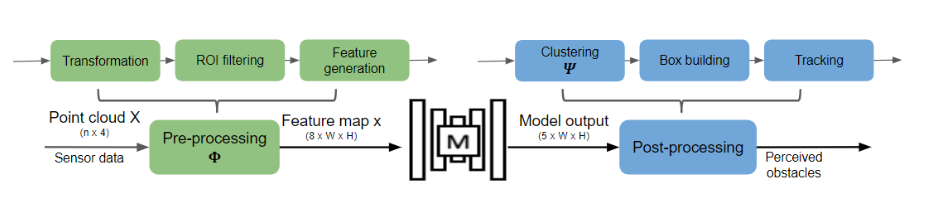

A LiDAR sensor scans the surrounding environment and generates a point cloud with 3D coordinates. The previous raw point cloud goes through a preprocessing phase to form a feature map. The raw point cloud X is first transformed and filtered based on a High Definition Map. Deep Neural Networks (DNNs) are used to process the H × W × 8 feature map, and then output the metrics for each one of the H × W cells.

The team behind this work has also 3D-printed the adversarial objects and performed physical experiments with LiDAR equipped cars to illustrate the effectiveness of LiDAR-Adv.

The generated adversarial objects are tested on the Baidu Apollo autonomous driving platform to demonstrate whether physical systems are vulnerable to the proposed attacks.

Blackbox Attack And LiDar-Adv

The hidden adversarial object initialized as a resampled 3D cube-shaped CAD model using MeshLab.

For rendering, a fully differential LiDAR simulator is implemented with predefined laser beam ray directions extracted from a real scene.

The researchers generated adversarial objects in different size (50cm and 75cm in edge length). For each object, 45 different position and orientation pairs were selected for evaluation. This is to mimic the scenario where a well-classified object can still confuse the detection system when it is presented in a different angle.

The experimental results show that the attacks orchestrated in this method were indeed successful and that even the object’s label can be changed with these attacks.

The above picture shows the car mounted with LiDAR system and the adversarial object on the right. This object is inserted into the simulated environment where the detection system was tested.

The successful transfer of adversity to a deployed detection model raises concerns about large scale deployment of such systems. LiDar based systems are facing stiff competition from camera-based systems like that of Tesla and these adversarial experiments can be another nail in the coffin not to forget how expensive and space-consuming they are for an autonomous vehicle.

Check the full work here.