The National Highway Traffic Safety Administration’s (NHTSA) report indicates that in 2009 over 70,000 car accidents were caused by driver drowsiness, out of which 700 of them resulted in fatalities and 36,000 of them resulted in injuries, excluding crashes not reported. Now, researchers have found a way to reduce these numbers using machine learning algorithms. They are using these algorithms to detect drowsiness symptoms in advance using facial characteristics such as eye blinks, head movements and yawns.

In this article, we are going to discuss the key findings from the research titled Driver Drowsiness Detection Using Behavioral Measures And Machine Learning Techniques: A Review Of State-of-art Techniques by Mkhuseli Ngxande, Jules-Raymond Tapamo and Michael Burke:

Factors That Can Be Studied For Drowsiness Detection

- Biological Indicators: Biological indicators such as the frequency of eyes shutting and yawning can be recorded. Some features such as inner and outer eye corners, eye centres, the tip of the nose, inner and outer mouth corners and the centre of the mouth can be registered. Physiological signals like heart rate, pulse rate and EEG are also indicators. When a person is drowsy, the power of alpha and theta bands of EEG increases. So an increase in EEG would indicate drowsiness.

- Vehicle behaviour: Factors such as speed, exacerbation angle, the position of the vehicle, has limitations such as the type of the car, road conditions, weather conditions and so on. They also play a role in detecting driver fatigue accurately.

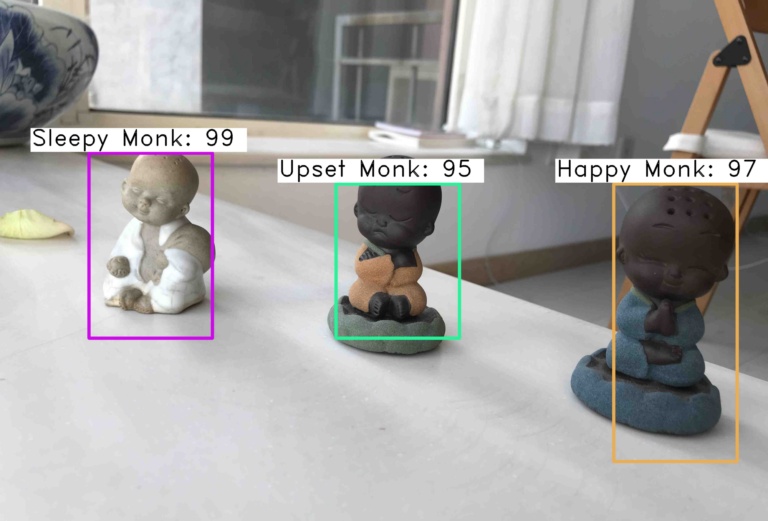

- Computer vision analysis: Facial expression detection is done using computer analysis. It takes out useful information from the image and discards the unrelated images.

ML Methods To Detect Drowsiness

Facial Action Coding System (FACS):

It is one of the most popular expression coding systems, used to code facial expressions. The facial expressions are decomposed to 46 component movements, this number corresponds to the number of an individual’s facial muscles. Head motions can be detected through an automatic eye tracking and an accelerometer. FACS is also capable of discovering new patterns based on emotional states.

Support Vector Machines (SVM):

For face detection, the Haar feature algorithm is used. Each feature is classified by a Haar feature classifier. It takes the captured face as input, detected face as output. Detects eyes image from this detected face and this detected eye is sent to the ML algorithm for further ML processes. SVM is used to identify whether the eyes are closed or open. An SVM can be trained to detect the face and see whether the eyes are shut to open and then decide to trigger the alarm or not. The training set has a set of images that have eyes shut and some set of images that have eyes open. When the model is built, it will be used to classify any new pre-processed eye-image.

An ML classifier is built using this algorithm to classify the pre-processed eye image. SVMs can efficiently solve linear or non-linear classification problems. It maximizes the margin around the separating hyperplane and then can find an optimal classifier.

Hidden Markov Model (HMM):

It is a statistical model which makes predictions about hidden states based on observed states. It has techniques for eye tracking based on colour and geometrical features.

Convolution Neural Network (CNN):

This method uses layers of spatial convolutions that are well suited for images, which exhibit strong spatial convolutions. CNNs produce more accurate results in comparison with SVMs and HMMs. Voila and Jones algorithm can be used to detect faces. Images are cropped to square images and are fed to the first layer of the network that has filters. The output is passed further for classification.

The blinking of only the one eye, either right or left, is detected so that the memory for detecting both the eyes is saved. We only need one eye blinking information because we blink both the eyes together.

The programming in all the methods is done with Python using a library called OpenCV. OpenCV has inbuilt, pre-trained classifiers for features like face, eyes and smiles.

Typically, Following Are The Steps Involved In Drowsiness Detection

- Video Capture: Video frames from a camera are broken down into a series of images.

- Face Detection: From the image frames, the face is detected first. Convolutional Neural Network (CNN) feeds the whole image to a network that has multiple filters and the face features are extracted from this network. Viola and Jones is another algorithm to extract the driver’s face from the image frames.

- Face Feature Extraction: Landmark localization, Histogram of oriented gradients (HOG), and Local Binary Patterns (LBP) are some of the methods to extract face features. This step simplifies the image by extracting useful information and discarding the irrelevant information. Eyes detected by pixel difference, or by using Sobel vertical edge operator.

- Feature Analysis: The features of the face extracted can then be processed further, as is the case for PERCLOS (percentage of eyelid closure) or EAR (eye aspect ratio) for eye analysis or mouth-based methods for yawning detection. These algorithms transform the RGB form images to the gradation image by the skin colour segmentation and then the eyes are detected. Then it calculates the speed at which the eyes close. Real-time eye detection under infrared illumination algorithm is developed for PERCLOS calculation. It is the most popular and most reliable algorithm for drowsiness detection. The EAR algorithm involves a calculation based on the ratio of the distances between various facial landmarks of the eyes.

- Classification: This stage consists of classifiers that help in decision making with respect to drowsiness. It detects drowsiness with the aid of weighted parameters. The eye-image will be pre-processed (by converting it into greyscale) and then the eye-image will be classified using a machine learning classifier to detect whether it was opened or closed. The normal blinking per minute is roughly 10 times.

Conclusion

Machine Learning techniques can extend its applications widely in the field of driver drowsiness detection and a lot of accidents can be avoided. This method can also be extended for aeroplanes and pilots.