The pandemic forced the corporate world to abandon their offices and work from home. As a matter of fact, the in-person meetings were replaced by virtual meetups. Thanks to this new tradition, video conferencing companies like Zoom benefited tremendously. The sudden rush to embrace virtual worlds led to various challenges. Users were annoyed by interruptions, noise, monotonous walls and more. So, companies that offer video call services allowed users to change their background and made changes so that the noise in audio is reduced.

These tweaks are usually the result of machine learning models running in the background. Loading these models and running them for inference can be slow. So, models need to be small along with the imagery. More so, if you are launching these services on your browser. Google Workspace (formerly G-Suite) has done well in this regard by making the voice calls more clear and the backgrounds more aesthetic for its users. In a recent blog post, Google discussed how they had achieved such high quality for their video services.

The engineers at Google diligently crafted a pipeline that leverages many ML innovations that Google has developed over the years. One of them is MediaPipe, an open-source, cross-platform framework for building pipelines to process perceptual data of different modalities. More about it in the next section.

About MediaPipe

Most of the object detection usually addresses two dimensional or 2D objects. The bounding boxes are always rectangles and squares but never a cube. By extending prediction to 3D, one can capture an object’s size, position and orientation in the world, leading to a variety of applications in robotics, self-driving vehicles, image retrieval, and augmented reality.

Google AI released MediaPipe Objectron, a mobile real-time 3D object detection pipeline for everyday objects. This pipeline detects objects in 2D images, and estimates their poses and sizes through a machine learning (ML) model, trained on a newly created 3D dataset. Objectron computes oriented 3D bounding boxes of objects in real-time on mobile devices.

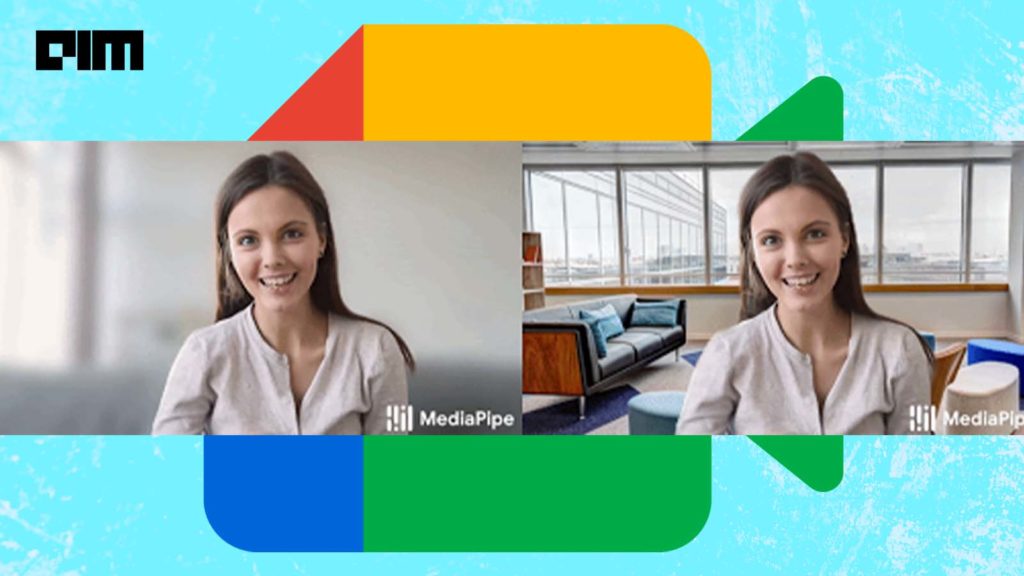

Using MediaPipe, Google introduced a new in-browser ML solution for blurring and background replacement in Google Meet. With the help of MediaPipe, ML models and OpenGL shaders run efficiently on the browser. Google claims that it has achieved real-time performance with low power consumption, even on low-power devices.

How Did Google ‘Meet’ The Challenge

“…other solutions require installing additional software, Meet’s features are powered by cutting-edge web ML technologies built with MediaPipe that work directly in your browser — no extra steps necessary.”

To provide real-time, in-browser performance, Google combined efficient on-device ML models, WebGL-based rendering, and web-based ML inference via XNNPACK and TensorFlow Lite.

MediaPipe leverages WebAssembly, a low-level binary code format designed specifically for web browsers. This helps improve speed for compute-heavy tasks. During a video call, the browser converts WebAssembly instructions into native machine code that executes much faster than traditional JavaScript code.

The procedure can be summarised as follows:

- Each video frame is processed by segmenting a user from their background.

- ML inference is used to compute a low-resolution mask.

- The mask is further refined to align it with the image boundaries.

- This mask is then used to render the video output via WebGL2, with the background blurred or replaced.

The Google Meet team has built a Segmentation model for the smooth functioning of on-device ML models. These models need to be ultra-lightweight for fast inference, low power consumption, and small download size. Mount these models onto a browser, and the resolution takes a toll on the number of FLOPs necessary to process each frame. So, smaller images are mandatory for a better experience.

So, the images are downsampled to a smaller size before fed to the model. The team has brought the encoder-decoder model size down to 400KB by modifying the exporting the model to TensorFlow Lite using float16 quantization. This, said Google, resulted in a slight loss in weight precision with no drop in quality. The resulting model has 193K parameters and is only 400KB in size. For the encoder model, they have used MobileNetV3-small.

For background replacement, the team adopted a compositing technique, known as light wrapping. Light wrapping softens the segmentation edges by allowing background light to spill over onto foreground elements and will enable users to blend well with their backgrounds. Also removes the halo effects.

Read more about it here.