The primary task of a Deep Neural Network – especially in case of Image recognition, Video Processing etc is to extract the features in a systematic way by identifying edges and gradients, forming textures on top of it. As a whole, convolutional layers in the Deep Neural Networks form parts of objects and finally objects which can summarize the features in an input image.

In this process, maintaining the same image size throughout the Neural Network will lead to the stacking of multiple layers. This is not sustainable due to the huge computing resources it demands. At the same time, we need enough convolutions to extract meaningful features.

Let’s suppose, we are trying to identify a cat. For this, we need to perform convolutions on top of this image by passing Kernels.

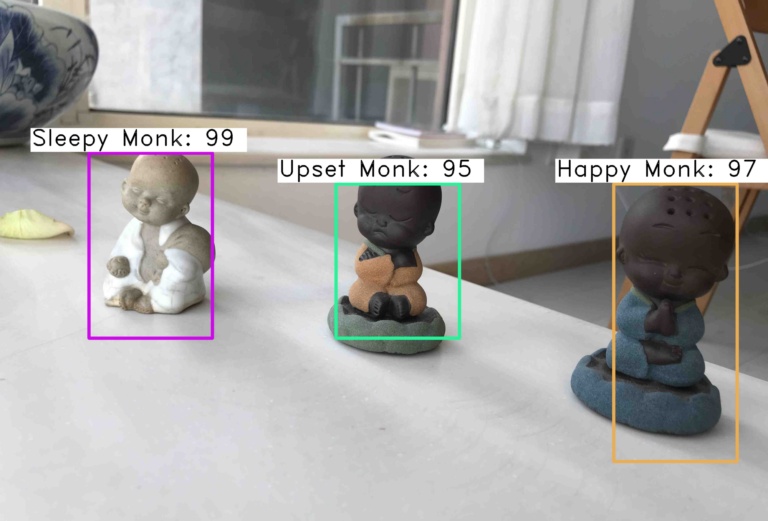

To gain a better understanding of this, let us split the image into multiple parts. If we have a look at the two images below which are nothing but the subset of the images, one image contains the head of the cat along with the background space. The other image contains only the head of the cat. In the first image, the only head part of the cat is enough for us to identify it as a cat and we don’t need the background. In addition to that, we need predominant features to be extracted such as the eye of the cat, which acts as a differentiator to identify the image.

If we observe the feature maps performed by the convolution layers, they are sensitive to the location of the features in the input. This can be addressed by downsampling the feature maps. So, to maintain a balance between computing resources and extracting meaningful features, down-sizing or downsampling should be done at proper intervals.

In order to achieve this, we use a concept called Pooling. Pooling provides an approach to downsample feature maps by summarizing the presence of features in the feature maps.

The most commonly used Pooling methods are “Max Pooling” and “Average Pooling”.

Here we shall discuss Max Pooling

Max Pooling is a convolution process where the Kernel extracts the maximum value of the area it convolves. Max Pooling simply says to the Convolutional Neural Network that we will carry forward only that information, if that is the largest information available amplitude wise.

Max-pooling on a 4*4 channel using 2*2 kernel and a stride of 2: As we are convolving with a 2*2 Kernel. If we observe the first 2*2 set on which the kernel is focusing the channel have four values 8,3,4,7. Max-Pooling picks the maximum value from that set which is “8”.

Here in our context, we will make a kernel that amplifies the image of the cat’s eye to such an extent that even after Max Pooling the predominant information is not lost. When Max Pooling now clips my pixels, the 25% pixels which are left are enough to get the information about the cat. So, there is going to be one channel or feature map which contains the information of the cat’s eye no matter what happens at the benefit of reducing 75% pixels. In another way, we can say that we are filtering information that we don’t want by building Kernels which can allow getting required information out through Max Pooling.

When Should you perform Max Pooling in Your Network?

Analyze your image. Say your image is of size 28 * 28 pixels. In this image, if you can reach a receptive field of 5*5, you can find some features visible. When you can extract some features, it is advisable to do Max Pooling. It’s not advised to do Max pooling in the initial stages of the Convolutional Neural Network as the Kernels would be at the stage of extracting edges and gradients.

Code Illustration (In Pytorch)

We have taken an image of size 28*28. Convolution operation (Layer1) is performed on it by a 3*3 Kernel resulting in a Receptive field of 3*3. Again a convolution operation (Layer 2) is performed and the receptive field resulted to be 5*5. As the 5*5 Receptive field is enough to identify features on a 28*28 image, Max Pooling is performed as shown in the Transition block mentioned below in “Yellow”.

Features of Max Pooling

Max Pooling adds a bit of slight – Shift Invariance, Rotational Invariance, Scale Invariance.

Slight change or shift does not cause invariance as we get max value from the 2 *2 image. This is called Shift invariance. Similarly, Max Pooling is slightly Rotational and scale-invariant.

This article is presented by AIM Expert Network (AEN), an invite-only thought leadership platform for tech experts. Check your eligibility.