Recently, researchers from Facebook AI introduced a Transformer architecture, that is known to be with more memory as well as time-efficient, called Linformer. According to the researchers, Linformer is the first theoretically proven linear-time Transformer architecture.

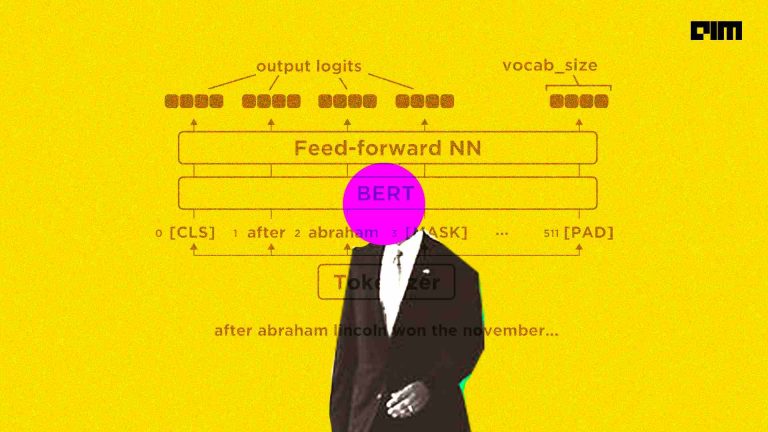

For a few years now, the number of parameters in Natural Language Processing (NLP) transformers has grown drastically, from the original 340 million parameters introduced in BERT-Large to 175 billion parameters in GPT-3 models. The Transformer models have been applied into a wide variety of applications such as machine translation, question answering, text classification and other such.

Why Linformer?

Training and deploying large Transformer models can be prohibitively costly for long sequences. The key efficiency bottleneck in standard Transformer models is the model’s self-attention mechanism.

In the self-attention mechanism, the representation of each token is updated by visiting all the other tokens that are present in the previous layer. This operation is essential for retaining long-term information. It also provides Transformers with the edge over recurrent models on long sequences. However, attending to all the tokens at each layer provokes a complexity of O(n2) with respect to the sequence length.

This is the reason why the researchers introduced the Linformer architecture and demonstrated that the self-attention mechanism could be approximated by a low-rank matrix. The Linformer architecture reduces the overall self-attention complexity from O(n2) to O(n) in both time and space.

The Tech Behind Linformer

Linformer is a Transformer architecture for tackling the self-attention bottleneck in Transformers. It reduces self-attention to an O(n) operation in both space- and time complexity. It is a new self-attention mechanism which allows the researchers to compute the contextual mapping in linear time and memory complexity with respect to the sequence length.

The researchers first compared the pretraining performance of the proposed architecture against RoBERTa, a popular Transformer model by Facebook AI. For pretraining, they used BookCorpus dataset along with English Wikipedia, which includes 3300 million words. Also, all the models are pre-trained with the masked-language-modelling (MLM) objective, and the training for all experiments are parallelised across 64 Tesla V100 GPUs with 250k updates.

Furthermore, the researchers fine-tuned the Linformer architecture on popular datasets like IMDB and SST-2(sentiment classification), as well as QNLI (natural language inference) and QQP (textual similarity).

The researchers observed that the Linformer Transformer model with values n = 512 and k = 128 has the comparable downstream performance to the popular RoBERTa model. It is even noticed that Linformer slightly outperform it at k = 256. Moreover, although the layerwise sharing strategy of the Linformer Transformer shares a single projection matrix across the entire model, it actually performs the best accuracy outcome of all the three-parameter sharing strategies.

They also added that the Linformer pretrained with longer sequence length has similar results to the one pretrained with the shorter length. As a result, it empirically establishes the notion that the performance of the Linformer model is largely determined by the projected dimension k instead of the ratio n/k.

Applications of Linformer

Currently, Facebook AI Research (FAIR) lab is using Linformer to analyse billions of pieces of content on Facebook as well as on Instagram in different regions around the globe. In a blog post, developers stated that this Transformer architecture had assisted them in making steady progress in catching hate speech and content that incites violence.

The researchers developed the Linformer architecture with an aim to unlock the capabilities of powerful AI models, such as RoBERTa, BERT, XLM-R, among others. The architecture makes it possible to use them efficiently at scale.

According to the researchers, the main reason to use Linformer is that, with the standard Transformers, the volume of required processing power rises at a geometric rate as the input length increases. However, with Linformer, the number of computations increases only at a linear rate. This, in result, makes it possible to utilise the larger pieces of text to train models, thus achieve better performance.