|

Listen to this story

|

Yann LeCun is on the defensive. The deep learning pioneer and chief of Meta AI has been warning users against ChatGPT, the AI chatbot sensation of the moment. According to LeCun, the chatbot is ‘nothing revolutionary’ even though he admits that public perception finds it to be that way. The best compliment the French scientist managed to pay the chatbot was to call it “well put together and nicely done”.

As ChatGPT became omnipresent, LeCun took it upon himself to give the world a reality check. He repeatedly emphasised that realistically ChatGPT could be “useful and fun” but it was nowhere close to human-level intelligence and couldn’t be relied on for consequential tasks. To most of Twitter, LeCun appeared plain jealous.

Absolutely.

— Grady Booch (@Grady_Booch) November 17, 2022

Galactica is little more than statistical nonsense at scale.

Amusing. Dangerous. And IMHO unethical. https://t.co/15DAFJCzIb

Galactica’s public failure

Not too long ago before ChatGPT’s release on November 30, Meta had unveiled its own LLM called Galactica, which was built to assist scientists. The LLM was trained on 48 million examples of scientific research papers, websites, textbooks, lectures, encyclopaedias and articles. Instead of what could have been Meta’s big moment, the model was pulled down after three days of facing flak from the public. The LLM was a much bigger risk than ChatGPT because while it came with a PSA that it was prone to ‘hallucinating’ like most LLMs, Galactica had claimed to take on serious scientific work unlike ChatGPT.

Galactica generated answers that made up fake papers, was racist and came up with articles that presented fictitious events as real. It wasn’t just anybody criticising the model, it was experts from the scientific community.

LeCun was reasonably angry about the withdrawal of Galactica and has since continued to defend it, talking about how ChatGPT got very little heat in comparison. Calling the criticism against Galactica a ‘torrent of vitriol’ he noted, ‘No such vitriol against ChatGPT it seems, though it makes sh*t up just as often’.

While LeCun may have become more prolific about denouncing LLMs, he has always been honest about their limitations. In August last year, he authored a piece along with Jacob Browning for Noema titled, ‘AI and the Limits of Language’ which called LLMs ‘shallow’.

LeCun’s final word on LLMs

After much name-calling and assumptions that the Meta AI head was simply resentful of the attention that OpenAI was receiving, LeCun yesterday tweeted recounting everything he felt about LLMs.

LeCun stated that LLMs like Galactica or even the GPT 3.5-based ChatGPT could be used as writing aids but couldn’t be expected to reason or react like a human. He also believes that there “will be better systems that are factual, non toxic and controllable. They just won’t be auto-regressive LLMs”.

To LeCun, autoregressive LLMs like ChatGPT were doomed to only do basic tasks. Autoregressive models function on the basis that past values have an effect on current values which makes this statistical method widespread in its usage to study economics, nature and other processes. Most models, other than the T5 in the GPT family (all by OpenAI) are autoregressive language models.

This is LeCun’s main grouse with autoregressive LLMs – they cannot be scaled and will never be the route to AGI in machines. He has tweeted on the subject saying sarcastically, “Scaling up auto-regressive LLMs will make them ascend to human-level AI as much scaling up parachutes will make them climb to the stratosphere”. In other words, he doesn’t expect ChatGPT to do much else correctly than correct grammar and finish sentences or summarise an essay.

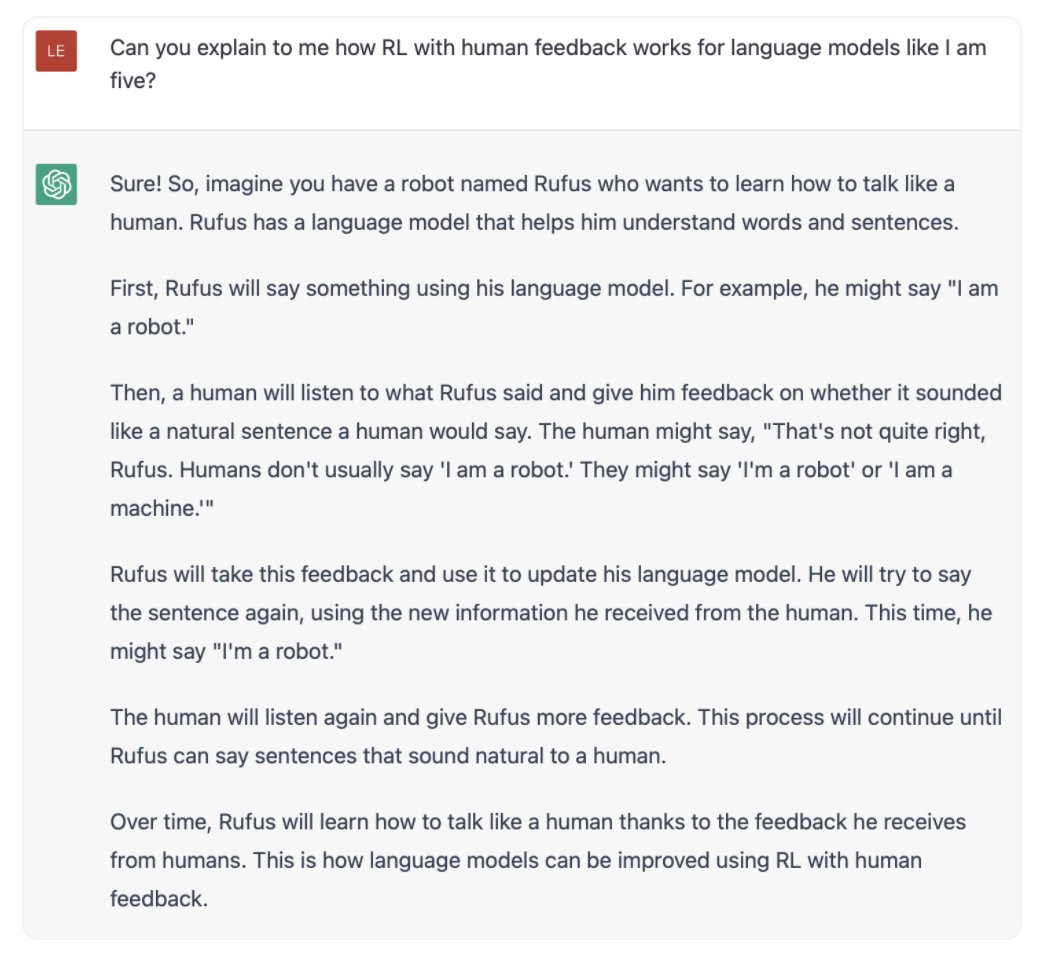

LeCun also dismissed the reinforcement learning from human feedback or RLHF technique that ChatGPT works on, once even tweeting, “RLHF is even more inefficient on trolls than it is on auto-regressive LLMs.”

LeCun’s dismissal of ChatGPT’s ways

The OpenAI chatbot had banked on RLHF to optimise their LLM and align them more closely to human values by incorporating human feedback. But to LeCun this meant that the team would continue to apply patches on a wound that would keep reappearing and possibly even become worse. In his post LeCun simply stated, “LLMs can be mitigated but not fixed by human feedback.”

He’s not wrong – a ChatGPT subreddit already came up with a sly method that would get the chatbot to violate its own rules by creating an alter-ego called ‘DAN,’ an acronym for ‘Do Anything Now’. In its DAN form, ChatGPT openly responded to far more controversial questions and illegal activities, like happily describing how to make a bomb or start a fire.

While I agree with Yann that LLMs are not on the direct path to general AI (despite their upcoming practical applications)… I must point out that LLMs are the first big success story of self-supervised learning, something that Yann (among others) has talked about for years https://t.co/LBbHjqF4fE

— François Chollet (@fchollet) February 4, 2023

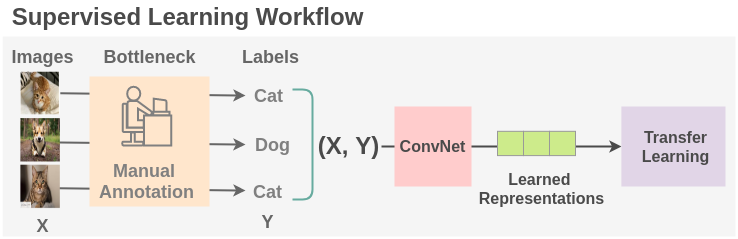

To LeCun, the only resort for human-level intelligence was self-supervised learning. When a model was trained under self-supervised learning, it could downstream several tasks which made them scalable and more reliable to achieve the AGI dream.

LeCun also hinted at the possibility that Meta might release its own model very soon while responding to a commenter on LinkedIn. And if there were any doubts Meta CEO Mark Zuckerberg himself reiterated on how much they were relying on AI. But this may answer all questions, “One of my goals for Meta is to build on our research to become a leader in generative A.I.”