|

Listen to this story

|

The accidental open sourcing of ‘LLaMA’, Meta’s LLM, acted as a spark to rejuvenate the open-source AI community. Now, it seems that Microsoft wants to replicate their accidental success with the launch of ‘HuggingGPT’, also known as ‘JARVIS’. This technology, built on ChatGPT, aims to leverage Hugging Face, one of the biggest pillars of open-source AI research, to create a new approach to solving complex AI problems.

Researchers from Microsoft detailed a way to use LLMs as the user-facing part of the system, utilising its natural language capabilities to interface with other models. This seems to be somewhat of a spiritual successor to ‘Visual ChatGPT’, which used a similar approach to plug in LLMs to text-to-image models.

JARVIS explained

Named after Iron Man’s personal AI assistant, ‘JARVIS’ aims to bring together the power of the open-source community and ChatGPT. Just as JARVIS accesses Tony Stark’s vast arsenal of services and acts as an AI butler of sorts, HuggingGPT calls specialised models for certain use-cases by interfacing between the user and the models.

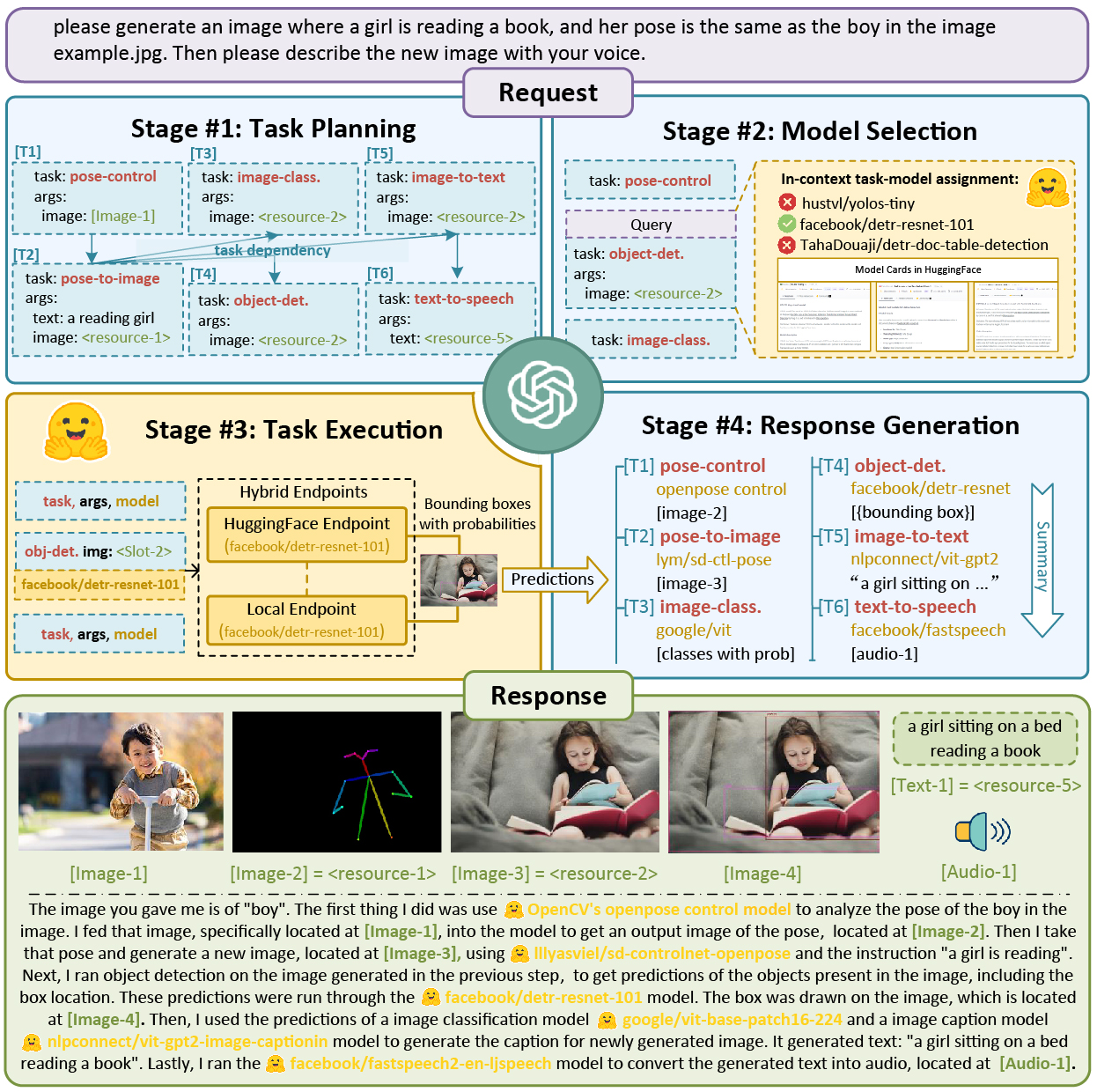

The architecture created for HuggingGPT is made up of two main components. The first is the LLM, which acts as a controller. This model takes up the roles of planning out tasks, selecting the secondary model, and response generation. The second component is the Hugging Face platform, which mainly conducts task execution.

The standout feature of JARVIS is the idea behind it, which can be condensed to the definition ‘language-as-an-interface’. By using language as a general interface and putting the LLM in the ‘brain’ position, it is possible for many different, specialised AI models to work together.

HuggingGPT/JARVIS Architecture

The researchers provided many examples to illustrate the potential use-cases of JARVIS. By giving a single prompt containing multiple instructions, HuggingGPT was able to call on a pose detection model, image generation model, image classification model, image captioning model, and a text-to-speech model.

Request flow in HuggingGPT/JARVIS

While the models called on by JARVIS are not novel and have been a mainstay of the open-source community for years, bringing them together is a novel approach to solve complex problems. Even though the given prompt had multiple stages of execution with different tasks in each step, the architecture handled it flawlessly.

Microsoft’s newfound attitude towards leveraging open-source research shouldn’t come as a surprise, especially considering the waves that LLaMA has been making over the past few weeks. Open source is the next big multiplier for AI, and it seems that Microsoft is on board with it.

Open source for AGI

While Microsoft is beholden to Sam Altman and OpenAI’s policy of closed AI research, it seems that they are pursuing a different path towards AGI. While the research paper carefully avoids using this loaded term, the abstract of the paper describes solutions like HuggingGPT to be a “key step” towards “advanced artificial intelligence”.

For all its talk of creating an AGI and where humanity is on the “path to AGI”, OpenAI is increasingly closed in terms of its research. While many scientists and researchers have criticised this approach of treating AI as proprietary technology, many others have already built up a comprehensive reputation for open sourcing models in the AI community.

Last month, the release of LLaMA essentially sparked the open-source community into action by giving them a state-of-the-art LLM (with leaked weights). This has now resulted in a spate of LLaMA-based projects being released out into the world—a formula Microsoft seems eager to reciprocate.

Indeed, leveraging the open-source community’s vast library of open-source algorithms might just be the path towards AGI. By bringing together various domain specific AI, also termed ‘narrow AI’, it is possible to move towards a type of artificial general intelligence known as self-organising complex adaptive systems.

In his musings on AGI, Ben Goertzel, the CEO of SingularityNet, offered the idea of a narrow AGI which sounds suspiciously similar to Microsoft’s JARVIS. He stated,

“There is a path from today’s Narrow AIs to tomorrow’s AGIs that passes through intermediate systems that are best thought of as Narrow AGIs.”

These so-called intermediate systems are the precursors for SCADS, which are AI systems composed of smaller AI algorithms. The ‘intelligent’ part of SCADs is responsible for deciding which algorithm performs which function, similar to ChatGPT’s role in HuggingGPT. Goertzel elaborates,

“A Narrow AGI for biomedical analytics might leverage a small army of Narrow AI tools carrying out specific intelligent functions—but it would figure out how to combine these on its own.”

According to Goertzel, combining these narrow AIs into a bigger AI would create a SCADS AI, which, in turn, would pave the way for a human-like AGI. By creating HuggingGPT, researchers have actually begun making realistic progress towards an AGI, far removed from OpenAI’s empty promises of an AGI future.