Thanks to self-supervised learning, few-shot, zero-shot, and fine-tuning techniques, the size of the language models are growing each passing day significantly, calling for high-performance hardware, software, and algorithms to enable training large models.

Taking a collaborative approach, Microsoft and NVIDIA have joined hands to train one of the largest, monolithic transformer-based language models with 530 billion parameters, Megatron-Turing NLG (MT-NLG). The duo claimed to have established state-of-the-art results, alongside SOTA accuracies in natural language processing (NLP), by adapting to downstream tasks via few-shot, zero-shot, and fine-tuning techniques.

In a research paper “Using DeepSpeed and Megatron to Train Megatron-Turing NLG 530B, A Large-Scale Generative Language Model,” the researchers from NVIDIA and Microsoft discussed the challenges in training neural networks at scale. They presented 3D parallelism strategies and hardware infrastructures that enabled efficient training of MT-NLG.

“Large language model training is challenging to stabilise, and experimentation can be costly, therefore, we documented our training configurations and datasets extensively to facilitate future research,” shared the researchers.

In addition to this, they also analysed the social biases exhibited by MT-NLG, alongside examining various factors that can affect in-context learning, bringing forth awareness of certain limitations of the current generation of large language models. “We believe that our results and findings can help, shape, and facilitate future research in foundational, large-scale pretraining,” added researchers.

Unleashing the power of large scale language models

In October last year, the duo introduced MT-NLG. The SOTA language model is powered by Microsoft’s DeepSpeed and NVIDIA’s Megatron transformer models. It has 3x the number of parameters compared to the existing largest models, including GPT-3 (175 billion parameters), Turing NLG (17 billion parameters), Megatron-LM (8 billion parameters), and the most recent EleutherAI’s GPT-NeoX with 20 billion parameters trained on CoreWeave GPUs.

As part of Microsoft’s AI at sale effort, the DeepSpeed team has investigated model applications and optimisations for a mixture of experts (MoE) models. These models are said to reduce the cost of training and inference for large models while allowing the next generation models to be trained and served on today’s technology.

In comparison, Google’s Switch Transformer (1.6 trillion parameters) and China’s Wu Dao 2.0 (1.75 trillion parameters) are the largest transformer language models in the space. However, when it comes to large scale language models and use cases, Microsoft has been upping the game. It has partnered with OpenAI, acquiring the exclusive right to use its GPT-3 language models for commercial use cases.

Microsoft owned GitHub last year released GitHub Copilot, which is powered by Codex, an AI system created by OpenAI that has been trained on a selection of English language and source code from open sources, including code in public repositories on GitHub.

There is more

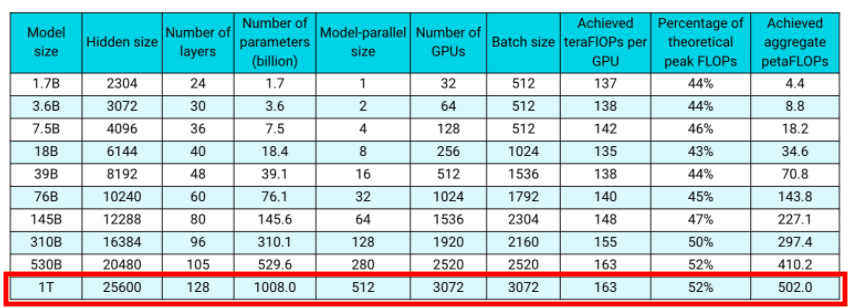

MT-NLG with 530 billion parameters is not the largest language model developed by NVIDIA and Microsoft. Last year, Microsoft announced a bigger and more powerful model with one trillion (1T) parameters. This 1 T model is bigger and has the highest numbers for every performance figure, including tera-FLOPs that were achieved, batch size, number of GPUs, etc.

This brings us to the question: if the language model with one trillion is bigger than every measure, how can MT-NLG with 530 billion parameters be the biggest?

To this, NVIDIA had said that the one trillion language model was never ‘trained to convergence,’ – a term used for a model that has been fully developed and can be used for performing inference, and a stage where predictions are made. Instead, this particular model went through a limited number of training runs, also known as epochs, which does not lead to convergence.

MT-NLG with 530 billion parameters is still a research project between NVIDIA and Microsoft and is yet to see the light as a commercial product. Check out NVIDIA’s catalogue page for other popular models made available. It includes transformer-based language models and other neural networks for classification, language translation, text-to-speech, object detection, recommender engines, sentiment analysis, etc.