|

Listen to this story

|

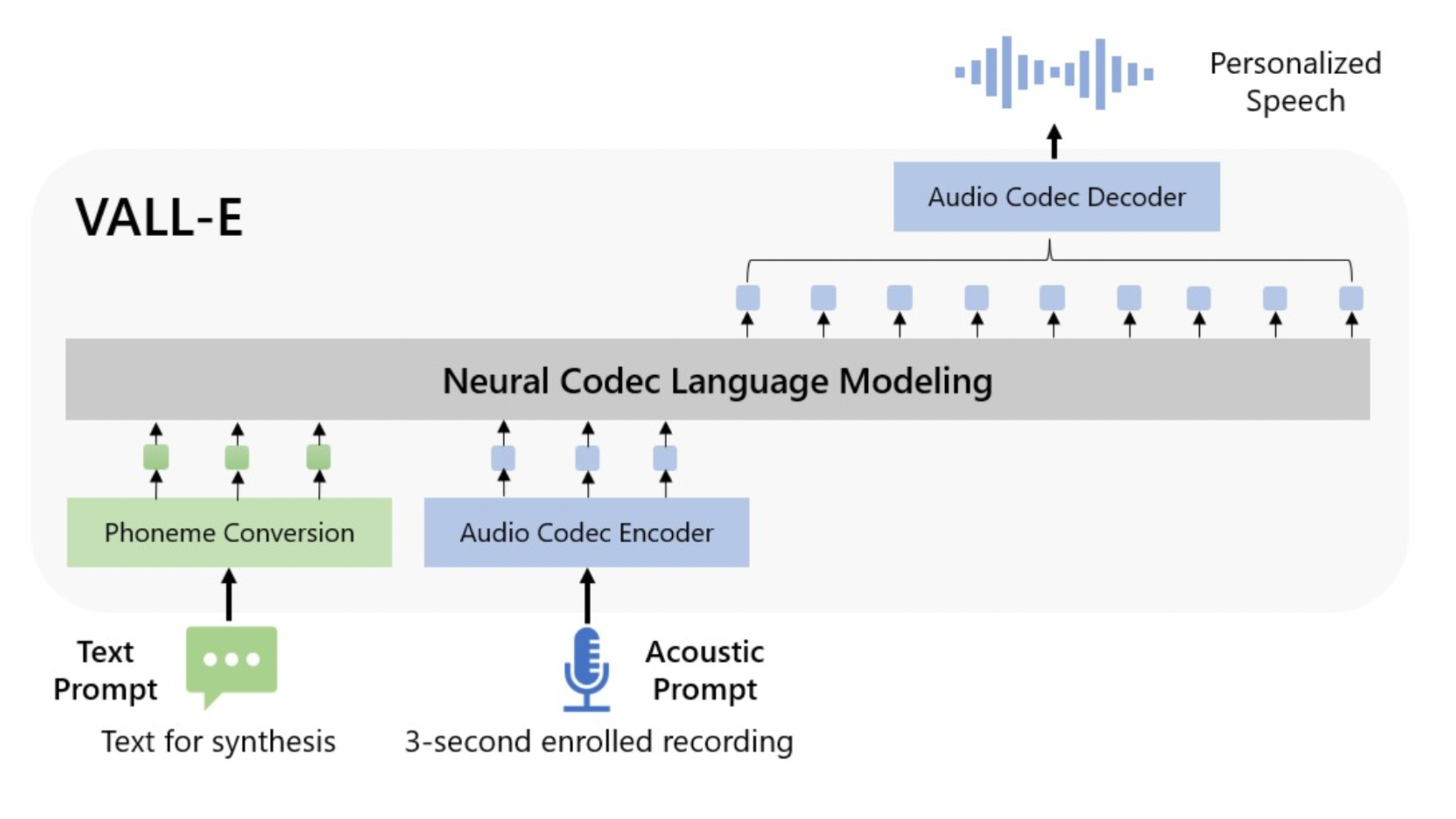

Microsoft recently released VALL-E, a new language model approach for text-to-speech synthesis (TTS) that uses audio codec codes as intermediate representations. It demonstrated in-context learning capabilities in zero-shot scenarios after being pre-trained on 60,000 hours of English speech data.

With just a three-second enrolled recording of an oblique speaker serving as an acoustic prompt, VALL-E can create high-quality personalised speech. It supports contextual learning and prompt-based zero-shot TTS techniques without additional structural engineering, pre-designed acoustic features, and fine-tuning. Microsoft has leveraged a large amount of semi-supervised data to develop a generalised TTS system in the speaker dimension, which indicates that the scaling up of semi-supervised data for TTS has been underutilised.

Read the paper here.

VALL-E can generate various outputs with the same input text while maintaining the speaker’s emotion and the acoustical prompt. VALL-E can synthesise natural speech with high speaker accuracy by prompting in the zero-shot scenario. According to evaluation results, VALL-E performs much better on LibriSpeech and VCTK than the most advanced zero-shot TTS system. VALL-E even achieved new state-of-the-art zero-shot TTS results on LibriSpeech and VCTK.

It is interesting to note that people who have lost their voice can ‘talk’ again through this text-to-speech method if they have previous voice recordings of themselves. Two years ago, a Stanford University Professor, Maneesh Agarwala, also told AIM that they were working on something similar, where they had planned to record a patient’s voice before the surgery and then use that pre-surgery recording to convert their electrolarynx voice back into their pre-surgery voice.

Features of VALL-E:

- Synthesis of Diversity: VALL- E’s output varies for the same input text since it generates discrete tokens using the sampling-based method. So, using various random seeds, it can synthesise different personalised speech samples.

- Acoustic Environment Maintenance: While retaining the speaker prompt’s acoustic environment, VALL-E can generate personalised speech. VALL-E is trained on a large-scale dataset with more acoustic variables than the data used by the baseline. Samples from the Fisher dataset were used to create the audio and transcriptions.

- Speaker’s emotion maintenance: Based on the Emotional Voices Database for sample audio prompts, VALL-E can build personalised speech while preserving the speaker prompt’s emotional tone. The speech correlates to a transcription and an emotion label in a supervised emotional TTS dataset, which is how traditional approaches train a model. In a zero-shot setting, VALL-E can maintain the emotion in the prompt.

VALL-E is yet to overcome shortcomings like synthesis robustness, data coverage and model structure.

Last year, the Microsoft-supported AI research lab OpenAI released Point-E, a method to generate 3D point clouds from complex points. Point-E seeks to change 3D space in the same way that DALL-E did for text-to-image generation.