|

Listen to this story

|

In 2020, at least 1.7 MB of data was created by every individual. This seems like an early Christmas for data scientists because there are so many possibilities and concepts to investigate, verify, discover, and create models for. But if we want such models to address actual business issues and real user queries, we need to address the following:

- Gathering and cleaning up data;

- Posting model training runs for experiment tracking and versioning;

- Establishing pipelines for deployment and monitoring models that reach production.

A path to scale ML operations suitable to the needs of the customers is needed. Similar problems arose in the past when we had to scale up traditional software systems to accommodate more users. The answer provided by DevOps was a set of procedures for creating, testing, deploying, and managing substantial software systems. That’s how MLOps came to existence. It shares a concept with DevOps but is implemented differently and was born at the junction of DevOps, Data Engineering, and Machine Learning.

How does MLOps help customer insights?

Businesses are using machine learning to rationalise their process while MLOps is accelerating the delivery process and making it feasible for businesses to produce ML products with real time cooperation and swiftness. Continuous monitoring of ML models through automated MLOps yields high-impact business insights and opens up new opportunities to improve customer experience. Following are some of the ways in which MLOps can be deployed for better organisational functionality:

- Business analysis and customer service: ML models are used to deliver insights utilising data analytics to refine the decision-making process, provide insights for better services and goods, and predict the demand for a certain product and future trends. This is made possible by the amount of data that is collected every day.

- Internal cooperation and communication: MLOps encourages internal teamwork and cooperation for the best output because MLOps and DevOps both place a high importance on collaboration and communication. By keeping key stakeholders updated while outlining the organisation’s goals and expectations, this allows for more clarity for the future of the business.

- Customer satisfaction: To better understand customers and their perspectives to enhance customer experience, businesses might concentrate on studying customer feedback. Furthermore, ML models can aid in forecasting consumer requirements and expectations to provide the company with a competitive edge in the market.

- Bias: All stakeholders can be shielded from unintentional bias through a decision-making process that is informed by facts and perceptive values. Data-driven evaluation and reporting are the cornerstones of all successful organisational outcomes.

MLOps helping organisations with AI transformation

MLOps helps enterprises rest easy knowing that ML will be a transformative technology. Through faster, more effective data and analytics operations and improved accuracy of findings for data-dependent activities like business intelligence development, predictive analytics, and process automation, MLOps helps ensure that investments in machine learning tools yield demonstrable results.

Organisations will discover that their ROI is accelerated when they adopt MLOps as the framework for installing, integrating, and employing ML products. Operational economies and competitive advantages linked to better, more prompt decision-making based on more accurate BI will enable such results to be realised. Additionally, as new ML tools are added, companies will be able to incorporate them more quickly as a result of the lessons learnt through using and experimenting with new tools and procedures as part of an MLOps plan.

Challenges

ML projects that are implemented come with their own set of challenges and complexities, often based on:

- The ever-changing data.

- A particular model designed and equipped for different parameters.

- A code that needs to adapt to the latest technological milieu.

It is vital that all the right business problems are solved by ML. The predictions offered are not always 100% accurate. Organisations must be prepared for every possibility and accept that not all experiments will succeed. Conversely, ML may not be a solution to all business problems and may call for a deliberate decision to be made to ensure that ML is indeed the right choice for a certain task.

Scalability

Data pipelines for machine learning must be adaptable enough to manage evolving data needs. Machine learning pipelines can be quickly established using a cloud data platform designed for data science operations, and they can then be simply modified as needed to continue producing high-quality outputs. Reasonable scale is a term that was introduced by the overall MLOps framework and is made up of three main components, namely, the pre-processing, training, and evaluation pipeline; the model deployment pipeline; and the configuration deployment pipeline.

- Pre-processing, training, and evaluation pipeline: The actual foundation of the developed MLOps architecture is the pre-processing, training, and evaluation pipeline. A trained, deployed model is now generated and available for human decision-making, assisted by automated evaluation, on whether it should be used. This part of the framework is completely dynamic and flexible enough to handle any models that must be processed through it. Due to the availability and functionality of the configuration deployment pipeline, it is able to choose without any hard-coding which operations to carry out for data processing, which parameters to use for training the model, and how to evaluate it. Not having to hard-code has the advantage of making the process repeatable, minimising resource duplication, and ultimately streamlining the system as a whole. A data scientist can specify everything that goes into this pipeline in the ‘model’ repository, thus giving them the ability to govern what happens inside the pipeline without having to worry about coordinating all the moving components.

- Model Deployment: The model deployment pipeline is separated from the prior pipeline to enable the re-deployment of older models without the need for training. De-coupling also makes it possible to draw a clear line between a model’s assessment and deployment. Future approval processes can more easily be implemented because of this clear demarcation. The model deployment is simply the start—not the end! In short, the end user can utilise it to obtain predictions made using real-time data.

- Configuration deployment pipeline: The power of those who are developing the models without overloading them with the management of infrastructure and pipelines is a fundamental principle of MLOps. This method gives the model’s data scientists complete control over the scripts that are executed to prepare the data for and assess the trained model. The different resource needs for the various pipeline stages can also be configured using the configuration file. This implies that a data scientist can set up a system without consulting an operations team if they modify the way data is processed for training and, as a result, need a compute system with additional resources.

Evaluate computational constraints

Lack of computational power is one of the main obstacles to using MLOps. Running machine learning algorithms requires a lot of resources. Intense demands of ML are frequently difficult for on-premises systems to meet while still providing for the computational requirements of other business sectors. As opposed to this, a cloud data platform’s computational capacity elastically scales and offers dedicated resources for carrying out any machine learning operation—from data preparation to model training and model inference. Cloud-native solutions will be necessary for the majority of MLOps endeavours.

Unified data store absence

For machine learning algorithms to produce accurate results, vast volumes of data must be supplied into the system. Siloed data that is kept in diverse formats causes problems for many organisations. You’ll therefore require a cloud data platform that enables you to store any data, regardless of structure, in a single location, with reliable data security and governance.

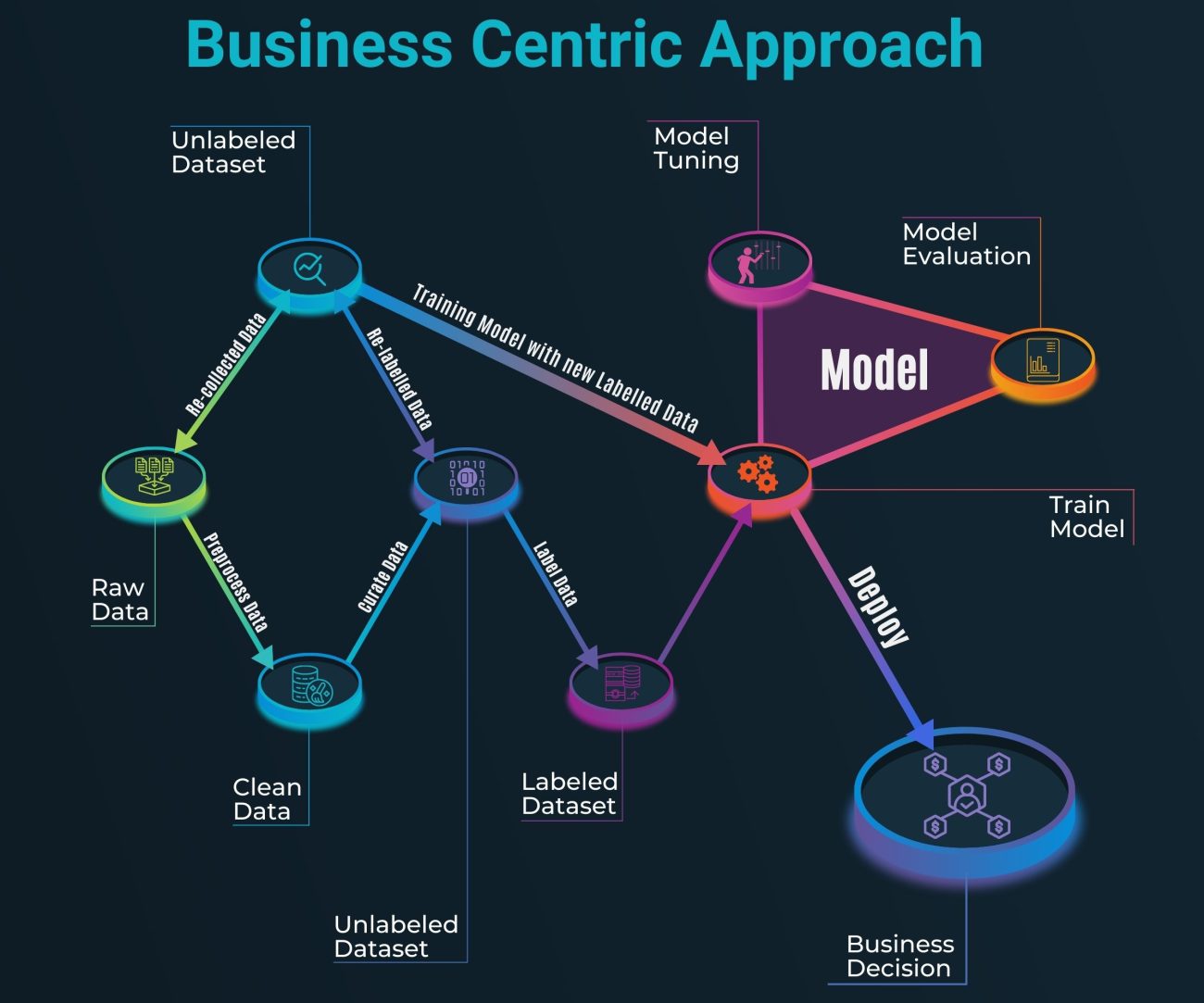

Evolution to Business-centric approach (model-centric + data centric) to identify business challenge

Data and Model are the two fundamental elements of every AI system, and they work together to deliver desired outcomes.

The model-centric approach entails conducting experimental research to enhance the performance of the ML model. In order to do this, the best model architecture and training method must be chosen from a wide variety of options.

With this strategy, you maintain the data constant while enhancing the code or model design.

The main goal of this strategy is to work on code.

A data-centric organisation can better align its strategy with the interests of its stakeholders by harnessing information generated by its operations in an era where data is at the centre of every decision-making process. A more accurate, organised, and transparent outcome can make an organisation work more efficiently. This strategy entails methodically modifying/improving datasets to raise the precision of your ML applications.

The main goal of this strategy is to work with data.

Both these approaches are required to help face the business challenges that are only increasing day by day. There is a need for a business centric approach which involves both model centric and data centric.

Business centric approach = Model Centric approach + Data Centric approach

When using ML in the real world, integrating data-centric decisions into the mix is ultimately the optimal course of action, even though being model-centric has its advantages. There is a logical connection between this and the adoption of MLOps as a result. Most businesses now find that investing in MLOps is essential. It makes it possible to quickly put models into production and guarantee their continued dependability. When it comes to huge and complicated data sets, models, pipelines, and infrastructure, MLOps assists enterprises of all sizes in streamlining and automating their activities across the data science life cycle. It also helps them address scalability concerns. For a company that is serious about repeatable machine learning in production and long-term maintainability, the time investment in setting up MLOps might be significant, but the advantages are equally significant.

New solutions are becoming available that make it simple to set up MLOps for your company as machine learning as a field grows. MLOps is likely to be as popular and significant in the future as DevOps. As Chip Huyen tweeted, “Machine learning engineering is 10% machine learning and 90% engineering.”

This article is written by a member of the AIM Leaders Council. AIM Leaders Council is an invitation-only forum of senior executives in the Data Science and Analytics industry. To check if you are eligible for a membership, please fill the form here.