Machine learning problems deal with a variety of new data if it is stretched to a broader context. For example, consider the case of image recognition for lions and cats. The data sometimes not only spans images of these animals, but also may have animals with similar resemblance. This will present problems in training algorithms. They may recognise the animals wrongly and the learning accuracy goes down significantly.

In order to overcome this, a class of algorithms called unsupervised learning algorithms are used. These algorithms work with data that are relatively new and unknown data in order to learn more. This class is again subdivided into two categories, clustering and association (also called Apriori). This article discusses clustering algorithms and its types frequently used in unsupervised machine learning.

What Is Clustering?

Clustering is the process of organising objects (data) into groups based on similar features within the members (data points) of the group. To understand this, consider the example mentioned earlier. The lions can be segregated into groups based on the species (Indian lion, Barbary lion, Congo lion and so on). The attributes (species, in the above case) ascertain the lion type.

Similarly, this is applicable to other ML problems which show similarities in data. This is the goal of unsupervised learning. Grouping a set of new data based on similarities amongst them depends on the requirements specified by the user for ML.

Types Of Clustering Algorithms

K-means Algorithm

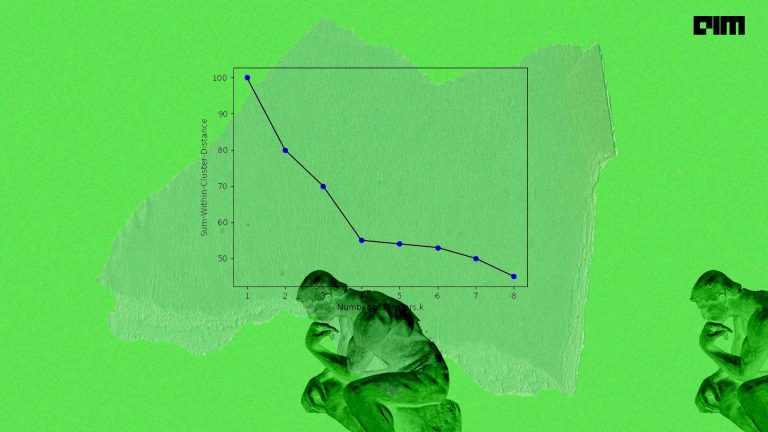

The simplest among unsupervised learning algorithms. This works on the principle of k-means clustering. This actually means that the clustered groups (clusters) for a given set of data are represented by a variable ‘k’. For each cluster, a centroid is defined. The centroid is a data point present at the centre of each cluster (considering Euclidean distance). The trick is to define the centroids far away from each other so that the variation is less. After this, each data point in the cluster is assigned to the nearest centroid.

All data points are now assigned. The k centroids (centroids of the cluster) are again calculated as barycenters of the clusters. These new centroids need to assign to each data point in every data point in clusters as mentioned earlier. This process is repeated until the centroids no longer move from their positions. This provides the configuration for minimising the measure using an objective function (should be defined earlier).

K-means clustering algorithm has found to be very useful in grouping new data. Some practical applications which use k-means clustering are sensor measurements, activity monitoring in a manufacturing process, audio detection and image segmentation.

Fuzzy C-means (FCM) Algorithm

In this algorithm, each data point in a cluster has the probability of belonging to the other. Therefore, the data point does not have an absolute membership over a particular cluster. This is the reason the algorithm is named ‘fuzzy’. The centroids are found out based on the fuzzy coefficient which assesses the strength of membership of data in a cluster. Fuzzy c-means clustering follows a similar approach to that of k-means except that it differs in the calculation of fuzzy coefficients and gives out a probability distribution result.

In addition, the accuracy of the training data can be tweaked to compensate the loss in training. It works on the objective function given below.

J = N(∑)i=1 C(∑)j=1ẟij ||xi – cj||2

Where N is number of data points, C is number of clusters, cj is cluster centre vector and ẟij defines the membership of ith data point xi of cluster j, ||xi – cj|| measures similarity.

This equation is even used in k-means but does not always act as the standard. The objective function equation is derived based on the context of training requirements.

Expectation-Maximisation (EM) Algorithm

This algorithm is based on the Gaussian distribution in statistics. It considers a collection of Gaussian distributions for the data set in an ML problem. So, this means the data is pictured as a model to solve the problem. Generally, this algorithm chooses a Gaussian distribution component for a data cluster and assigns a probability value. After this, a point sample is calculated based on that Gaussian component. This is done by using the expectation and maximisation equations given below.

Expectation (E) → P(Ꞷj| xk,ƛt) = P(xk | Ꞷi, μi(t), 2)pi(t) / ∑k P(xk | Ꞷi, μi(t)2)pj(t)

Maximisation (M) → μi(t+1) = ∑k P(Ꞷj| xk,ƛt) xk / ∑k P(Ꞷj| xk,ƛt)

Hierarchical Clustering Algorithms

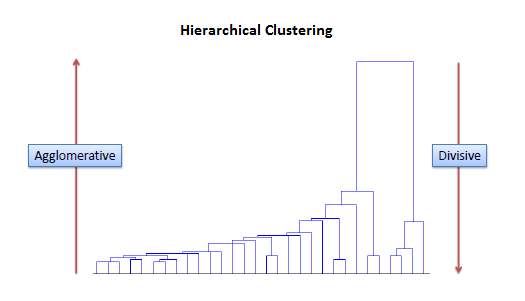

Last but not the least are the hierarchical clustering algorithms. These algorithms have clusters sorted in an order based on the hierarchy in data similarity observations. Hierarchical clustering is categorised into two types, divisive(top-down) clustering and agglomerative (bottom-up) clustering. The former type groups all data points/observations in a single cluster and divides it into two clusters on least similarity between them, while the latter type assigns every data point as a cluster itself and aggregates the most similar clusters. This basically means bringing the right data together.

Most of the hierarchical algorithms such as single linkage, complete linkage, median linkage, Ward’s method, among others, follow the agglomerative approach. (More information on hierarchical clustering can be found here).

Comments

Clustering algorithms described in this article works with multivariate data(except for k-means). Therefore, it is suggested that the ML problem, as well as data, are defined correctly before choosing an algorithm. All of these algorithms have their respective advantages in terms of accuracy and performance. Ultimately, data is what makes the algorithms perform better.