The performance of deep learning applications has improved proportionally with the increase in automation techniques such as Neural Architecture Search(NAS). Today hierarchical feature extractors are learned in an end-to-end fashion from data rather than manually designed.

NAS is the process of automating architecture engineering, a part of AutoML. NAS can be seen as a subfield of AutoML and has significant overlap with hyperparameter optimization and meta-learning.

Researchers exploit the transferability of architectures to enable search to be performed on different data and labels. However, one thing common with the automated search and the traditional methods is that both need images and the (semantic) labels to search for an architecture. In other words, NAS flourish in the supervised learning regime. But, the real world remains an arena of unsupervised examples largely.

To probe the unsupervised angle in NAS methods, researchers from John Hopkins University and Facebook AI investigated the significance of labels on the quality of the output.

They asked the question: Can we find the target from just images (without labels)?

To formalize this question, the authors introduced Unsupervised Neural Architecture Search (UnNAS).

Overview of Unsupervised NAS

Given a pre-defined search space, a typical NAS algorithm would explore the space and estimate the performance of the architectures sampled from the space.

UnNAS though, follows the principle of the NAS algorithms. It does not require human-annotated labels for estimating the performance of the architectures.

In traditional unsupervised learning, during the training, the model learns the weights of a fixed architecture. Then the quality of the weights is evaluated by training a classifier (ex: fine-tuning the weights) using supervision from the target dataset.

UnNAS too, follows the same procedure of an unsupervised regime. Here also, in the search phase, the algorithm searches for an architecture without using labels, and then measures the quality of the architecture found by an UnNAS algorithm by training the architecture’s weights using supervision from the target dataset.

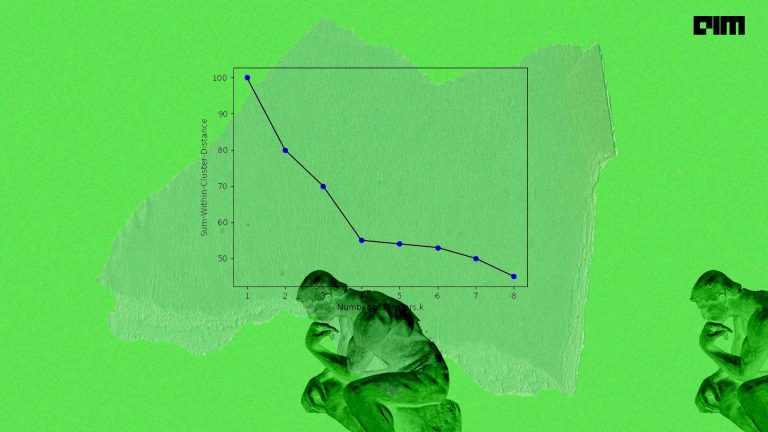

- To demonstrate the performance of UnNAS, the researchers conduct two kinds of experiments: In sample-based experiments, we train a large number (500) of diverse architectures with either supervised or unsupervised objectives.

- In search-based experiments, a well-established NAS algorithm (DARTS) was used.

The results from the various experiments can be summarized as follows:

- UnNAS architectures perform competitively to supervised counterparts

- NAS and UnNAS show reliable results across a variety of datasets and tasks

- UnNAS outperforms previous methods

Overall, the findings in this paper indicate that labels are NOT necessary for neural architecture search.

Read more about UnNAS here.

Future Direction

NAS methods have already outperformed many manually designed architectures on image classification tasks. However, the full potential of NAS methods can be realized only when it is tested for other tasks as well. Researchers assert that it is crucial to go beyond image classification problems by applying NAS to less explored domains.

One interesting domain can be the application of NAS algorithms to search for architectures that do well under adversarial attacks.

While most works report results on the CIFAR-10 data set, experiments often differ about search space, computational budget, data augmentation, training procedures, regularization, and other factors.

There is no doubt that NAS has demonstrated impressive results so far. However, it provides little insights into why specific architectures work well and how similar the architectures derived in independent runs would be.

While unsupervised search techniques look promising, NAS, still has a lot of areas to be explored. For instance, why does NAS perform the way it performs is still puzzling. Although machine learning is known for its lack of interpretability, NAS – both supervised and unsupervised – need to be evaluated for their fairness, which in itself can open up new avenues of AutoML.

Know more about the current state of NAS in this survey.