Recently, the researchers from MIT introduced a new AI system known as Timecraft that has the capability to synthesise time-lapse videos depicting how a given painting might have been created. According to the researchers, there are various possibilities and unique combinations of brushes, strokes, colours, etc. in a painting and the goal behind this research is to learn to capture this rich range of possibilities.

Creating the exact same piece of a famous painting can take days even by skilled artists. However, with the advent of AI and ML, we have witnessed the emergence of a number of AI Artists for a few years now. One of the most popular artisanship of AI is the portrait of Edmond Belamy that was created by Generative Adversarial Network (GAN) and sold for an incredible $432,500.

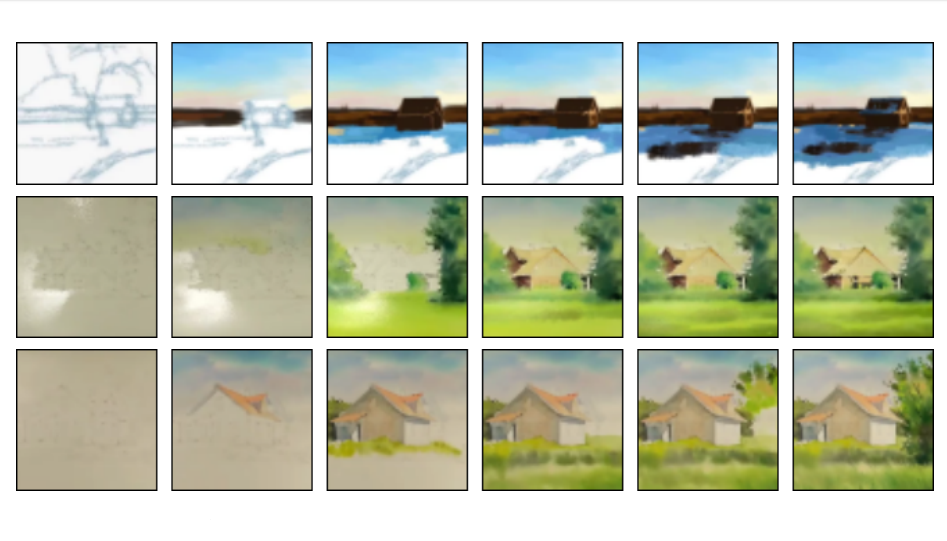

In this research, the researchers presented a recurrent probabilistic model that can take an image of a finished painting and create a time-lapse video depicting how it was most likely to have been painted by the original artist. The system was trained on more than 200 existing time-lapse videos that people posted online of both digital and watercolour paintings.

Behind The Model

The model is implemented as a Convolutional Neural Network (CNN) and introduced a training policy to enable learning from a limited and noisy dataset of painting time-lapses. The researchers worked with the recordings of digital and watercolour painting time-lapses that are collected from video websites. The convolutional neural network model can look at a new painting that hasn’t been trained with, and figure out the most likely way it was created.

In the process, they came across a number of challenges such as high variabilities, including painting trajectories, data availability, painting rates, etc. and medium-specific challenges, including erasing effects, non-pint effects, etc. to overcome these issues, the researchers designed a learning-based model that has the capability to handle the challenges.

The Neural Network model is implemented using a conditional variational autoencoder (CVAE) framework, and the model parameters are being learned using short sequences from the training video dataset. Using the sequential conditional variational autoencoder (CVAE), the model is trained to reconstruct a real frame while building upon its previous predictions.

Sequential CVAE training helped in encouraging the sequences of frames to be well-captured by the learned distribution, reducing the compounding of errors. For video synthesis, the researchers implemented the model with the help of popular machine learning libraries, Keras and TensorFlow.

Dataset Used

For training the model, the researchers collected time-lapse recordings of paintings from YouTube and Vimeo. They stated that the digital and watercolour paintings were being selected and were mainly focused on landscapes that are usually well-known subjects for both mediums.

Each video was downloaded at 360×640 resolution and cropped temporally and spatially to include only the painting process. The researchers collected 117 digital painting time-lapses with an average duration of 4 mins and 116 watercolour time lapses, with an average duration of 20 minutes.

Contributions

In this research, the contributions made by the researchers are mentioned below: –

- The researchers used a probabilistic model to capture stochastic decisions made by artists, thereby capturing a distribution of plausible ways to create a painting.

- Unlike work in future frame prediction or frame interpolation, the researchers synthesised long-term videos spanning dozens of time steps and many real-time minutes.

- They demonstrated a model that successfully learns from painting time lapses “from the wild.” According to the researchers, here the data is small and noisy, as it had been collected from uncontrolled environments with variable lighting, spatial resolution and video capture rates.

- This project can be said as the first work that models and synthesises distributions of videos of the past, given a single final frame.

Wrapping Up

In this research, a new video synthesis method is introduced, which is making time-lapse videos that depict the creation of paintings. The researchers proposed a recurrent probabilistic model that captured the stochastic decisions of human artists and introduced an alternating sequential training scheme that encourages the model to create realistic predictions over several time steps.

According to a blog post, Timecraft videos outperformed existing benchmarks more than 90 per cent of the time and were actually confused for the real videos nearly half of the time. The model can be used to sample many time steps, enabling long-term stochastic video synthesis.

Read the paper here.

Watch The Video Here: