Earlier this week, NVIDIA announced a new line of data processing units (DPUs) during the NVIDIA GPU Technology Conference (GTU) 2020. DPU, along with the data centre architecture (DOCA), which is an on-chip architecture, is expected to transform networking, storage, security performance.

DPUs as processors have flexible and programmable acceleration engines that are great for AI and machine learning applications. DPU is increasingly gaining popularity, with tech giants such as Asus, Atos, Dell Technologies, Fujitsu, Lenovo, Quanta, and Supermicro announcing their plans to integrate NVIDIA DPUs into their offerings.

DPU and Its Features

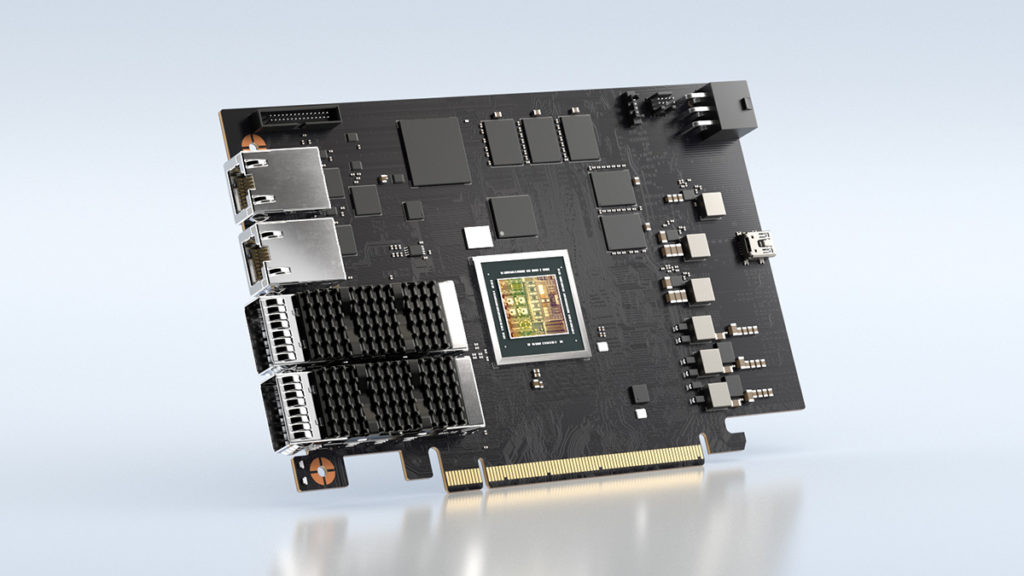

DPU is a system on a chip, a new class of programmable processor that combines three elements — high performance, software programmable, multi-core CPU; a network interface with parsing, processing, and efficient and fast data transfer capabilities; and programmable engines to offload networking tasks and optimise performance for AI and machine learning.

DPU offers multifold features:

- The DPU architecture builds a zero-trust data centre domain at the edge of every server, thus isolating data centre security policies from the host CPU

- The NVIDIA BlueField-2 DPU, along with NVMe over Fabric (NVMe-oF), provides a high-performance storage network with latencies for remote storage. This system is better than direct-attached storage.

- It delivers upto 200 gigabits per second ethernet and InfiniBand line-rate performance for both traditional and modern GPU-accelerated AI workloads. This frees the host CPU cores.

- The DOCA software development kit (SDK) enables developers to create software-defined and cloud-native DPU-accelerated services that leverage industry-standard APIs.

They were initially popularised by Mellanox which was acquired by NVIDIA in 2019. Before its acquisition, Mellanox had deployed the first generation of BlueField DPUs, making it a leader in high-performance computing and deep learning to provide scale and efficiency with improved operational agility.

NVIDIA’s two main DPU offerings are — NVIDIA BlueField-2 and NVIDIA BlueField-2X.

NVIDIA BlueField-2 is a new kind of DPU that offers innovative acceleration, security, and efficiency in every host. BlueField-2 data centre infrastructure on a chip combines the power of the ConnectX-6 Dx with programmable Arm cores and hardware offloads for software-defined storage, networking, security, and manage workloads. The BlueField-2X, on the other hand, is an advanced DPU with the AI capabilities of NVIDIA Ampere GPU. It can use AI for real-time security analytics, dynamic security orchestration, as well as automated responses.

DPU’s Advantage Over Traditional Systems

The industry stakeholders are eager to use DPU for a range of reasons. Some of them as listed below:

- It separates the control plane from the data placed within a single system and a cluster of systems. This makes it safer than its counterparts, such as CPU.

- It also helps in freeing up capacity on the servers for application computing. The throughput increases as many as two times.

- DPUs are cheaper. Since it frees up the core, memory space and I/O, the cost of the server is slashed by at least half as compared to other loaded servers.

- A DPU is different from a CPU as it provides a high degree of parallelism and it differentiates from GPU as it uses a MIMD architecture rather than a SIMD architecture.

- All these capabilities of DPU enable isolated, cloud-native computing that will define the next generation of cloud-scale computing.

As opposed to a traditional data centre where the network is just a web of cables linked by switches to deliver data to and from the CPU, the DPUs process data on the fly to reduce the load on the CPU. DPUs play a role that can be likened to a team of professional interpreters.

Even with the availability of GPUs for crunching the data, the CPU remains burdened as it has to access and share information between computers to feed data to the GPU. Now with DPU, the processing is closer to the data itself, and the network acts as a co-processor offloading labour from the central engine.