Ever since its introduction in 2017, in the seminal paper “Attention Is All You Need,” transformers have revised and renewed the interest in the way we deal with language models. Transformers have led to groundbreaking works such as BERT, which is now widely used by Google for its search engine autocomplete and smart compose on Gmail.

Transformers have been widely applied in tasks as diverse as speech recognition, symbolic mathematics, and even reinforcement learning. However, computer vision, so far, has been immune to the advent of transformers so far.

DETR can be implemented in less than 50 lines with PyTorch

Now for the time, object detection is being looked at through the lens of transformers. A new model by the name Detection Transformers (DETR) was recently released by the AI wing of Facebook.

DE⫶TR: End-to-End Object Detection with Transformers https://t.co/4W27PVx6Js (+paper https://t.co/xmxnSOBjBa) awesome to see a solid swing at (non-autoregressive) end-to-end detection. Anchor boxes + nms is a mess. (I was hoping detection would go end-to-end back in ~2013) pic.twitter.com/cN4hK2ZFY0

— Andrej Karpathy (@karpathy) May 27, 2020

Overview Of DETR

The overall DETR architecture contains three main components: a CNN backbone to extract a compact feature representation, an encoder-decoder transformer, and a simple feed-forward network (FFN) that makes the final detection prediction. Unlike many modern detectors, stated the researchers, DETR can be implemented in any deep learning framework that provides a common CNN backbone and a transformer architecture implementation with just a few hundred lines. Inference code for DETR can be implemented in less than 50 lines with PyTorch.

The working of DETR can be distilled as follows:

- Consider the object detection task as an image-to-set problem.

- Add a convolutional neural network (CNN) that extracts the local information from the image with a transformer encoder-decoder architecture, which then reasons about the image as a whole and then generates the predictions.

DETR uses a convolutional neural network (CNN) as a fundamental component of learning a 2D representation of an input image. The model flattens it and supplements it with a positional encoding before passing it into a transformer encoder. A transformer decoder then takes as input a small fixed number of learned positional embeddings or object queries and additionally attends to the encoder output. Then output embedding of the Decoder is passed to a shared feed forward network (FFN) that predicts either a detection (class and bounding box) or a “no object” class.

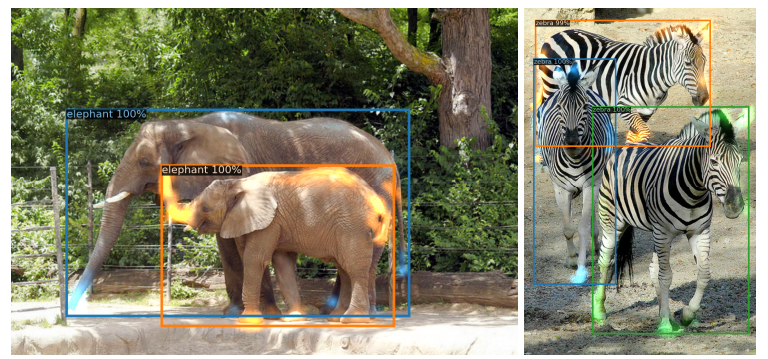

Given an image, the DETR model successfully identifies all the objects present in an image, each represented by its class, along with a tight bounding box surrounding each one.

To evaluate the model, the researchers performed experiments on COCO 2017 detection and panoptic segmentation datasets containing 118k training images and 5k validation images.

The above table compares the performance of DETR with state-of-the-art models.

Key Takeaways

To understand how Transformers make an end-to-end object detection simpler, the researchers pitted it against the state-of-the-art Faster R-CNN, a traditional two-stage detection system. In case of Faster R-CNN, as shown above, object bounding boxes are predicted by filtering over a large number of coarse candidate regions, which are generally a function of the CNN features. Then each selected region is used in a refinement step. The refinement step consists of identifying the features of a certain area in the image and then classifying it independently (if it is a bird, then its beak is a feature). After refinement, deduplication is carried out where a non-maximum suppression step is applied to remove duplicate boxes.

DETR, on the other hand, gets rid of the whole refinement and deduplication process by placing a transformer architecture. It simplifies the detection pipeline as the transformer architecture performs the operations that are traditionally specific to object detection.

The key takeaways from this work are as follows:

- DETR, a new design for object detection systems based on transformers and bipartite matching loss for direct set prediction

- DETR is the first object detection framework to successfully integrate transformers as a central building block in the detection pipeline

- DETR matches the performance of state-of-the-art highly optimised Faster R-CNN

Know more about DETR here.