“You put a car on the road which may be driving by the letter of the law, but compared to the surrounding road users, it’s acting very conservatively. This can lead to situations where the autonomous car is a bit of a fish out of water,” said Motional’s Karl Iagnemma.

Autonomous vehicles have control systems that learn how to emulate safe steering controls in a variety of situations based on real-world datasets of human driving trajectories. However, it is extremely hard to program the decision-making process given the infinite possible scenarios on real roads. Meanwhile, real-world data on “edge cases” (such as nearly crashing or being forced off the road) are hard to come by.

Researchers from MIT Computer Science & Artificial Intelligence Laboratory (CSAIL), in collaboration with the Toyota Research Institute, have developed a simulation system called Virtual Image Synthesis and Transformation for Autonomy (VISTA) to train driverless cars in March 2020.

VISTA essentially created a photorealistic world with infinite steering possibilities, allowing the cars to practise navigating a variety of worst-case scenarios. It used a small dataset captured by humans driving on a road to generate a nearly infinite number of new viewpoints from real-world trajectories. The controller is rewarded for the distance it travels without crashing, so it must learn how to travel safely on its own. As a result, the vehicle learns to navigate different situations safely, such as regaining control after swerving between lanes or recovering from near-collisions.

How data-driven simulation works

VISTA starts by ingesting video data of human driving and converting each pixel into a 3D point cloud for each frame. When users place a virtual vehicle in that world and have it issue a steering command, the engine generates a new trajectory through the point cloud based on the steering curve and the vehicle’s orientation and velocity.

The engine then uses that new trajectory to render a photorealistic scene. It does so by estimating a depth map, which contains information about the distance of objects from the controller’s viewpoint using a convolutional neural network. The depth map is then combined with a technique that estimates the camera’s orientation within a 3D scene. All of this contributes to determining the vehicle’s location and relative distance from everything in the virtual simulator. Based on that data, it reorients the original pixels to create a 3D representation of the world from the vehicle’s new perspective. It also follows the motion of the pixels to capture the movement of the cars, people, and other moving objects in the scene.

MIT researchers deployed the learned controller in a full-scale autonomous vehicle in the real world after successfully driving 10,000 kilometres in simulation. According to MIT, this was the first time a controller trained in simulation using end-to-end reinforcement learning was successfully deployed onto a full-scale autonomous car.

An open-source play

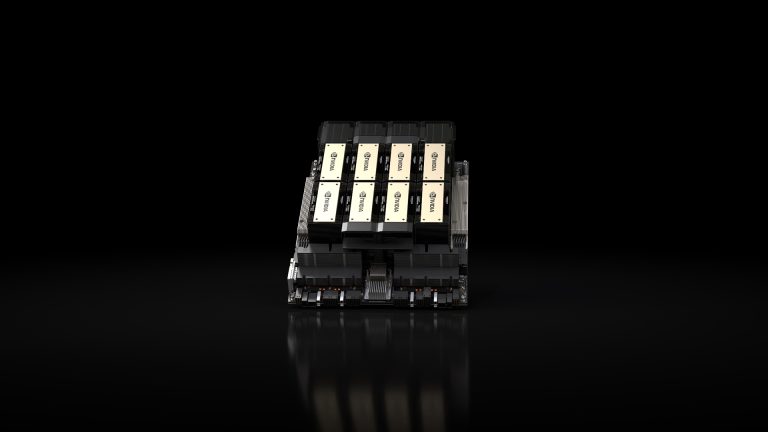

Since its initial launch in 2020, MIT CSAIL has been rather quiet about VISTA. However, this changed when they launched VISTA 2.0 and open-sourced the code. This work was supported by the National Science Foundation, Toyota Research Institute, and NVIDIA with donation from the Drive AGX Pegasus.

VISTA 1 supported only single-car lane-following with one camera sensor. But to achieve high-fidelity data-driven simulation, MIT researchers had to rethink the foundations of how different sensors and behavioural interactions can be synthesised.

VISTA 2.0 is a data-driven system that can simulate complex sensor types and massively interactive scenarios and intersections at scale. With less data than previous models, the team was able to train autonomous vehicles that could be substantially more robust than those trained on large amounts of real-world data. “VISTA 2.0 demonstrates the ability to simulate sensor data far beyond 2D RGB cameras, but also extremely high dimensional 3D lidars with millions of points, irregularly timed event-based cameras, and even interactive and dynamic scenarios with other vehicles as well,” said CSAIL PhD student Alexander Amini.

In focus: Lidar sensor data

In a data-driven world, Lidar sensor data is much more difficult to interpret — you’re effectively trying to generate brand-new 3D point clouds with millions of points from sparse views of the world. MIT researchers used the data collected by the car to project it into a 3D space based on the Lidar data and then let a new virtual vehicle drive around locally from where the original vehicle was. Finally, they used neural networks to project all of that sensory data back into the frame of view of this new virtual vehicle.

Together with the simulation of event-based cameras, which operate at speeds of thousands of events per second, the simulator was able to simulate this multimodal information in real-time, allowing neural nets to be trained offline and tested online in augmented reality setups for safety evaluations.

As a result, you can move around in the simulation, use different types of controllers, simulate various types of events, create interactive scenarios, and simply drop in brand new vehicles that weren’t even in the original data. They tested for lane following, lane turning, car following, and more dangerous scenarios such as static and dynamic overtaking (seeing obstacles and moving around to avoid collisions). Real and simulated agents interact with the multi-agency, and new agents can be dropped into the scene and controlled in any way.

VISTA 2.0 essentially allows the community to collect their own datasets and convert them into virtual worlds where they can directly simulate their own virtual autonomous vehicles, drive around these virtual terrains, train autonomous vehicles in these worlds, and then directly transfer them to full-sized, real self-driving cars.

Value add

“The usefulness depends on the quality of data collected and would be interesting to see as researchers use this tool. Typically radar is also a sensor data source, and it would be interesting to see the differences in simulation world quality with and without it. Generally speaking, this model serves as a good simulation environment to train and test various AI models deployed. Modelling of actors in the simulation environment is always challenging to the deployment of AVs and remains a challenge not clearly addressed in this tool,” said an autonomous driving expert.

The simulation environment is grounded in real-world data and serves as a strong platform to build on. Releasing this dataset and environment for researchers to train and test on removes a lot of overages in building AV platforms. Autonomous vehicle firms like Waymo and Cruise have also open-sourced data sets and tools. “As most mature AV Companies have invested heavily in this effort and have developed homegrown tools and rely on them being tightly integrated into their development process. Additionally, the dependence on their own data source, sensors, and HW is critical and reduces the value of such a tool to a mature company. On the other hand, early-stage startups could benefit from showing value using this framework, but there are limitations. There are also companies like Applied intuition that offer a lot more stability and confidence and provide compelling price points, which directly reduces the value of such a data set to AV makers. However, the biggest advantage for AV makers from this framework is the ability to separate good talent from the best as students display their technical adeptness on these platforms and can show tangible value upfront,” he added.