|

Listen to this story

|

Last week, OpenAI released an open-source automatic speech recognition system called ‘Whisper’ that can transcribe audio into text in multiple languages including Japanese, Italian and Spanish. The company said that the neural network “approaches human level robustness and accuracy on English speech recognition” and if the reaction on Twitter is to be believed, OpenAI’s claims aren’t too far from the truth.

While this is not to say that Whisper will put its competitors like Otter.ai—which is comparatively easier to use—out of business, first-hand accounts say Whisper is far more accurate. The more common approach taken to train these models is to use smaller audio-text training datasets that are more closely paired, or with unsupervised audio pretraining.

Mere transcription tool?

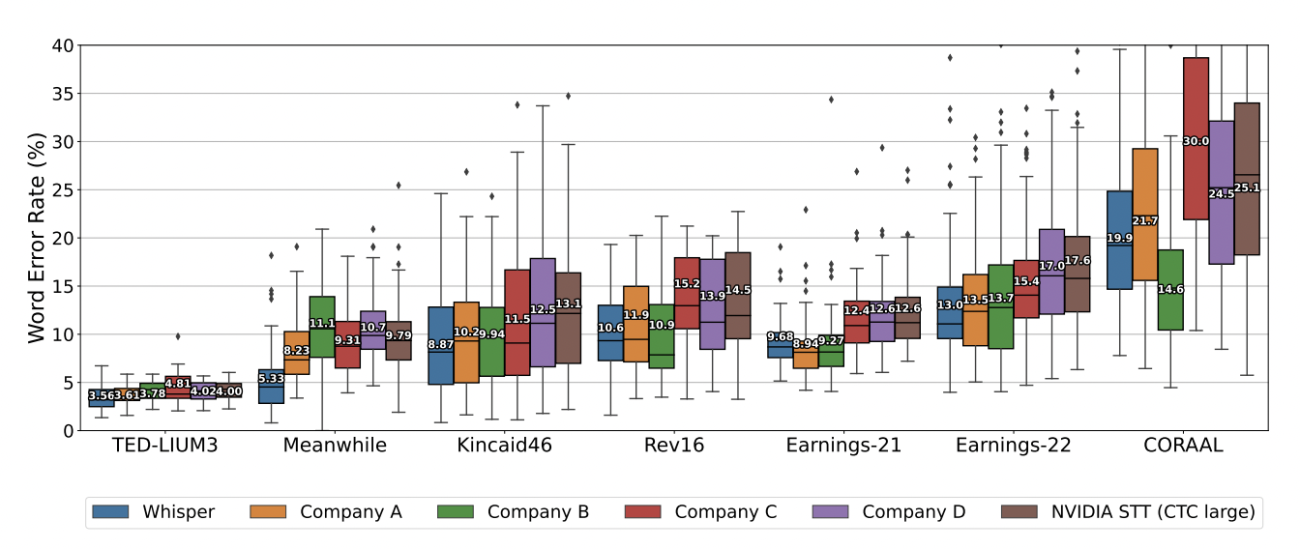

Whisper instead was trained using a comparatively larger and more diverse dataset and wasn’t fine-tuned to a specific dataset due to which it didn’t surpass other models that were specialised around the LibriSpeech performance benchmark, one of the most noted parameters to judge speech recognition.

Playing with Whisper. Fed in a 1m25s audio snippet from one of my lectures. I speak fast. I correct myself and backtrack a bit. I use technical terms (MLP, RNN, GRU). ~10 seconds later the (292 word) transcription is perfect except "Benjio et al. 2003" should be Bengio. Impressed pic.twitter.com/HDvaxZO37v

— Andrej Karpathy (@karpathy) September 23, 2022

Several users who tested Whisper, including Tesla’s former director of AI, Andrej Karpathy described the accuracy levels as “through the roof,” calling the translations “almost perfect”. Transcription tools aren’t exactly revolutionary but accurate ones are rare. As a commenter noted, “I spend more time correcting the transcribed text than transcribing it on my own.”

Transcribing itself has great utility across industries. A user said he was able to subtitle the trailer for a Telugu film without making any corrections, another engineer built an app that would make Whisper accessible to laymen, and yet another transcribed all their audio courses into text.

Made an app called Buzz that transcribes live audio recordings from your computer to text using @OpenAI's Whisper model:https://t.co/G7M3bFfmGE

— chidi (@chidiwilliams__) September 28, 2022

Here's a short demo: pic.twitter.com/fbJKDtSmpZ

Diverse and massive audio dataset, but private

But a portion of the AI community speculated that transcription wasn’t OpenAI’s final destination for Whisper. In the blog, while announcing the release of the tool, the company said that it hoped the code would “serve as a foundation for building useful applications and for further research on robust speech processing”. More significantly, the training dataset for Whisper had been kept private. The model for Whisper had been trained on a massive “680,000 hours of multilingual and multitask supervised data collected from the web”.

For OpenAI, which has its hands in too many pies—the recent text-to-image game-changing DALL.E 2 or the long-awaited GPT4, there’s simply a lot to be done with these many audiosets. Like a commenter said, “There’s more information in audio than in text alone. Using audio just to extract the transcripts seems like throwing away lots of good data.”

With the anticipated date for the release of GPT4 coming closer, there is an expectation that the training dataset for Whisper could be used to train it. A successor to the 175 billion parameter-GPT3, experts believe that GPT4 would be a turnaround for OpenAI from the tenet of ‘the bigger the model, the better it is’. In fact, most companies have been slowly moving away from this principle.

This generalised rule witnessed a change when Deepmind released ‘Chinchilla’. A much smaller 70 billion parameter model, Chinchilla outperformed other much larger LLMs like GPT3 and Gopher with 280 billion. The model was trained on the logic that more efficient LLMs with ‘compute-optimal’ could be made by using more training data and keeping the number of parameters constant.

The paper released along with Chinchilla titled, ‘Training Compute-Optimal Large Language Models’, by Hoffmann et al., came to the conclusion that scaling the size of a model was only partially important, and that scaling the number of training tokens (which is the amount of text data the model is fed) was just as vital during training.

Speculation around GPT4

About a year ago, OpenAI founder Sam Altman answered questions around GPT4 that seemed to indicate that training for the imminent model might be in this direction. Altman stated that, contrary to the assumptions that were made then, GPT4 would not be bigger than GPT3 but it will need more computational resources.

If proven true, GPT4 will require much bigger quantities of data than Chinchilla did, to build a compute-optimal. The assumption is that the Whisper’s audio datasets will be used to create the textual data needed to train GPT4.

Another easy function for these datasets could be used to generate automatic video captions for the next batch of generative models. While research in computer vision and natural language processing areas has evolved, a lot of the recent work has been focused on automatically forming natural language descriptions in videos. Among other tasks, video captioning has proven to be difficult due to the wide range of content.