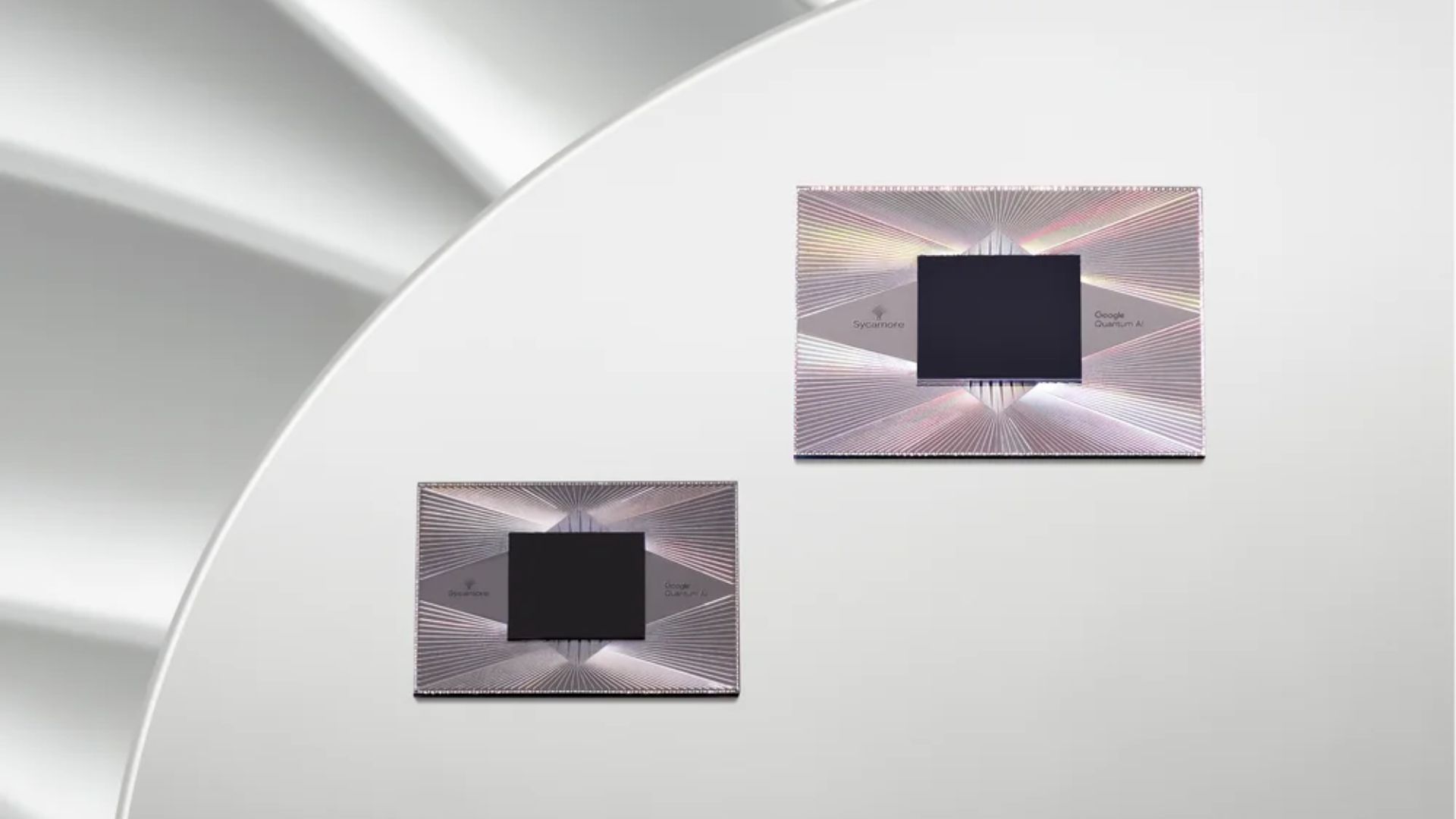

Google’s Quantum Division Reaches A New Milestone, Reduces Error Rate

Qubits are so sensitive that even stray light can cause calculation errors.

Qubits are so sensitive that even stray light can cause calculation errors.

YOLO are the most important algorithms used in terms of

the ratio between mean average precision and inference time.

The FTC itself has also gone under a marked change since antitrust pioneer Lina Khan was appointed as the chairperson in June last year.

From Tesla, NVIDIA, and Intel to India’s PARAM, 2022 witnesses many developments in supercomputers

In just two years, our team has made incredible progress on our existing quantum roadmap.

Graphcore has dubbed the ultra-intelligence AI computer Good after the computer science pioneer Jack Good.

Two homegrown startups, DeepVisionTech and TensorGo Technologies, won awards at the Oracle APAC Startup Idol 2022.

Vision Transformers (ViTs) is emerging as an alternative to convolutional neural networks (CNNs) for visual recognition.

Vision transformer (ViT) is a transformer used in the field of computer vision that works based on the working nature of the transformers used in the field of natural language processing. Internally, the transformer learns by measuring the relationship between input token pairs. In computer vision, we can use the patches of images as the token.

Meta has released the AI Research SuperCluster (RSC), calling it one of the fastest AI supercomputers running presently in the world.

These seemingly similar models can be confusing to understand to decide which one will be the right choice to apply for a particular setting.

They have applied it separately to speech, text and images where it outperformed the previous best single-purpose algorithms for computer vision and speech.

In this article, we will talk about how to segment images at the image level using the image-level supervision approach.

Hand gestures can be used for input in place of keyboards and a mouse to make computers accessible to stroke patients with partial paralysis.

The New Multi-Weight API allows loading different pre-trained weights on the same model variant, keeps track of vital meta-data such as the classification labels, and includes the preprocessing transforms necessary for using the models

IceVision is a framework for object detection which allows us to perform object detection in a variety of ways using various pre-trained models provided by

Built on the NVIDIA Ampere architecture, Jetson AGX Orin provides 6x the processing power and maintains form factor and pin compatibility with its predecessor, Jetson AGX Xavier.

VOLO is a simple yet powerful CNN model architecture used for visual recognition and helps achieve fine-level token representation.

Starting as a small local enterprise in 1927, Volvo has grown into a major player in the commercial transport and infrastructure solutions market. In May

Andrej Karpathy, Senior Director of AI at Tesla, unveiled a supercomputer at the Computer Vision and Pattern Recognition Conference 2021. It is the world’s fifth

The most common approaches in machine learning are supervised and unsupervised learning.

As has happened with almost all areas of our life, technology came to change forever, also education. Being one of the most rigid industries in

DDN is the first NVIDIA storage partner to receive certification to support the DGX SuperPOD with BlueField-2.

T2T-ViT employs progressive tokenization that takes patches of an image and converts it into an overlapped-token over a few iterations

For this week’s data science career series, Analytics India Magazine got in touch with Neil Heffernan, the computer science professor at Worcester Polytechnic Institute. Known

Transformers are all geared up to rule the world of computer vision. The runaway success of OpenAI’s CLIP and DALL.E had a lot to do

VISSL is a computer VIsion library for state-of-the-art Self-Supervised Learning research. This framework is based on PyTorch. The key idea of this library is to

Apple has introduced a new method for generating 3D scenes called Equivariant Neural Rendering. This method requires no 3D supervision but only images and their

In a recent work by Microsoft Research, a new framework is introduced which can address in the case of unforeseen corruptions or distribution shifts of data models to create “unadversarial objects,” inputs that are optimized particularly for more robust model performance.

ViT breaks an input image of 16×16 to a sequence of patches, just like a series of word embeddings generated by an NLP Transformers. Each patch gets flattened into a single vector in a series of interconnected channels of all pixels in a patch, then projects it to desired input dimension.

Join the forefront of data innovation at the Data Engineering Summit 2024, where industry leaders redefine technology’s future.

© Analytics India Magazine Pvt Ltd & AIM Media House LLC 2024

The Belamy, our weekly Newsletter is a rage. Just enter your email below.