Since the internet has become such a large pool of data, every business must start adopting web scraping techniques to make their business more profitable. Now the previous era of Web scraping was all relying on coding skills and hours of working to achieve the smallest result, and whenever websites change their code a little bit, coders have to update their scraper again to make it work for another day.

That’s why No-code development platforms(NCDPs) are trending because it saves time, money, and resources for companies; they can be used by anyone with zero coding experience and can do wonders. Forrester predicted the no-code market to reach $21 billion by 2022. As the number of users is increasing on the internet day by day, it will affect the big data market more and more, which is going to make web scraping tools sharper and incisive.

So to remove these hours of tedious coding work, ParseHub came into the picture. It is a powerful Visual based web scraping tool, which enables everyone to create their own data extraction workflows without worrying about coding at all. Because ParseHub can handle all the source code element selection and prediction of neighbor elements on its own.

Use-Cases

- Used by Data Scientists for research.

- Used in Sales Leads to scrape new sales leads from directors, communities and social media.

- Used for Competitor, marketing and industry analysis

- Used to extract multiple websites millions of data into one

- Scraping news, products pricing, reviews, profiles, jobs and more.

Installation

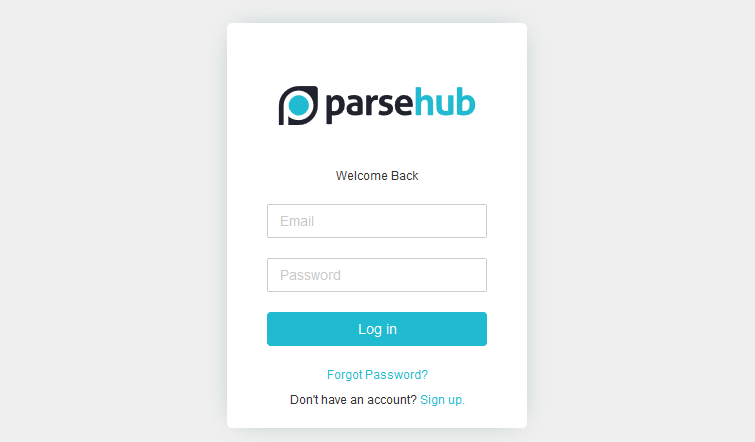

Installing Parse Hub is super easy, just go to the website signup and download the free plan which includes 200 pages per run, five public projects and some other features.

They have documented guides for installing ParseHub on different operating systems.

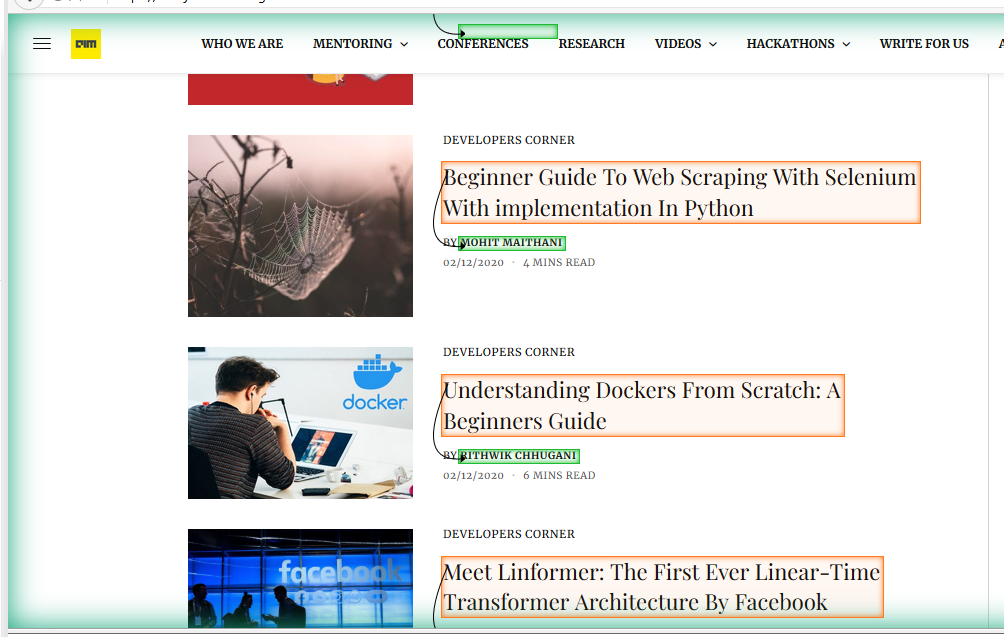

Last time we used Beautiful Soup and a large portion of code in this article to extract the article titles from analytics india magazine homepage.

This time we are doing the same actually more than that without coding, and the result will be visible in your choice of a spreadsheet.

Agenda

Let’s Start

Let’s start

After installing, On first boot up you need to sign up with your ParseHub account and parsehub comes with its own inbuilt browser on which it handles all the web requests and extraction as well.

Let’s dive into the User Interface(UI) which will boot up in pre pre-built web-browser environment with a tutorial and demo project, skip that part for now.

- Click on New Project project to start Web scraping.

- Load Analytics india magazine website in the work environment by searching inside the browser tab, or you can simply put the Url of a website in the Upper-left box as shown in the picture. It’s all up to you.

- Click Start project on this URL and new window will popup

- Now let’s understand the UI, there are three main sections:

- The first block on the left side is where you can see your attributes and rename and modify them.

- Upper Right Tab works like a simple browser where you can interact and select reliable elements for scraping.

- And all the output is shown in the 3rd tab: Result tab, from where after cleaning and fetching we can download that dataset for further analysis.

- Now to begin extraction, you need to click on Webpage text or image as per your needs. In this case, we are clicking on the article title.

- Remember to click on Yes Tick on Non selected title to make your scraper accuracy high.

- Rename this attribute selection2 -> Title

- Now that you have some data you can see the preview of it in Bottom output tab.

- On the left side, click on the PLUS(+) sign next to the title to add related attributes like author name.

- Using Relative Select command, click on the first article and then author name to extract Related author names

- Repeat step 7 and step 8 for Extracting further Published Date, Reading time and more using Relative Select.

As shown in the below video

- Click on Get Data to Export your data.

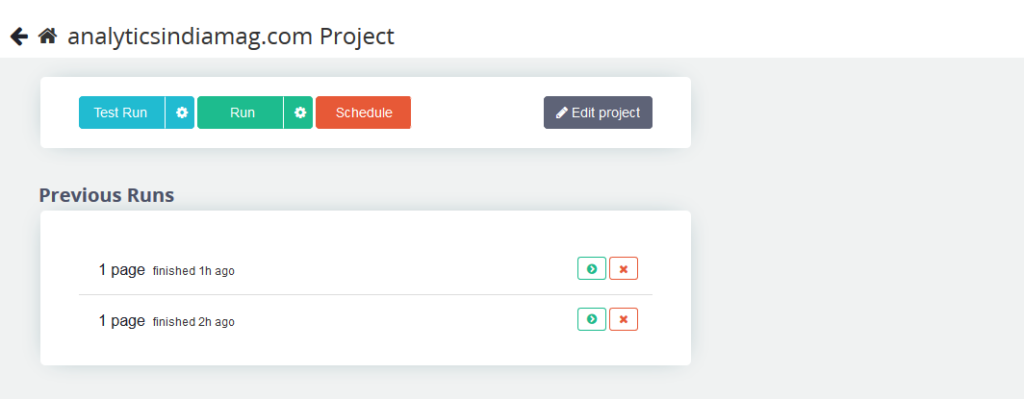

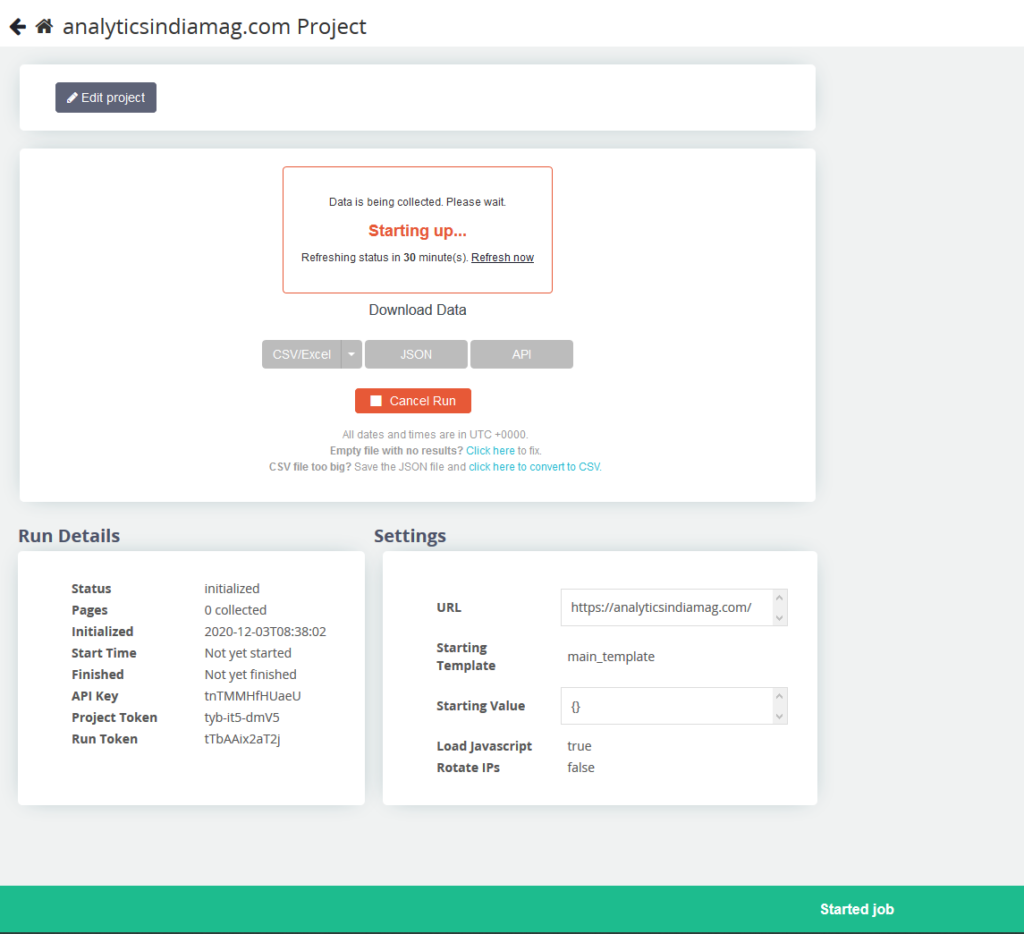

- You have three options to choose from as per the data you are scraping: we use a test run to see if everything is going well, schedule to schedule the data extraction operation in case of large data extraction, but in our case, we are going to click on Run.

- Parsehub will start the data collection process as we call parsehub magic and in a minute we’ll get our data.

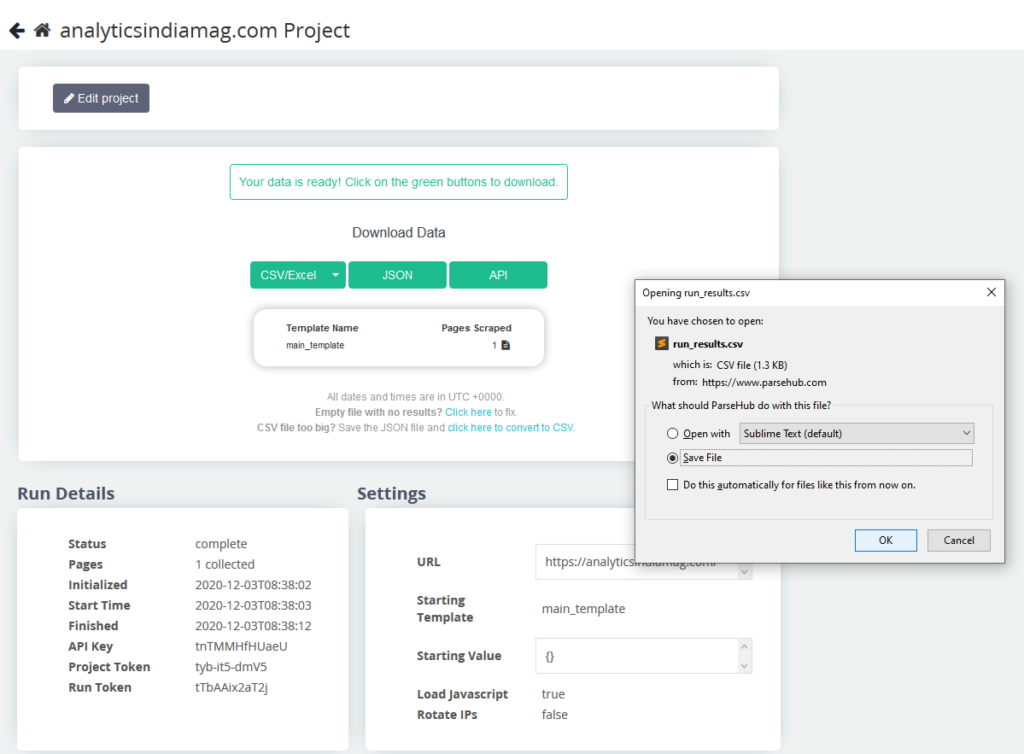

- Now download the data in formats like CSV/Excel, JSON, API as per our need. If we want to do data science work on this data, we can download it as CSV and then we can implement some word cloud or data visualization for the same.

Output

And there you have it ! Full structured and clean data for your further research.

Having Article name, Author name, Date published, Article URLs, Reading time ????

Conclusion

We learned how non-coding web scraping tools can extract the data fast and easily and more accurately.

Also, we saw a full demonstration of scraping data from the Analytics India magazine website.

With exported output in spreadsheet ready for you data science work or any research.

Parsehub has also published its API documentation which is designed around REST and can be used programmatically to manage and run projects.