|

Listen to this story

|

As per a report from the AAA Foundation for Traffic Safety, advanced driver assistance systems can reduce the number of injuries and deaths by a whopping 37% and 29%, respectively. Advanced driver assistance systems (ADAS) include collision warning, automatic energy braking, lane-keeping assistance, lane-departure warning, and blind-spot monitoring systems that can potentially ensure safety by providing simulation to predict and perceive the world through such algorithms.

The biological perception algorithm in humans took millions of years to evolve. But, it didn’t take engineers a million years to wait for these systems to complete the algorithm test for autonomous development – thanks to simulation and digital testing which made it happen.

Perception algorithm and sensor types

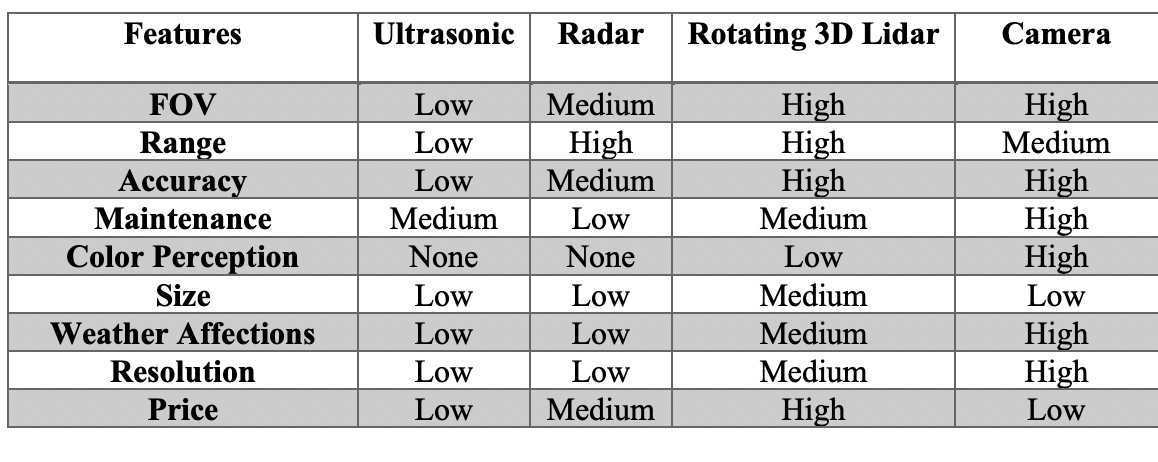

Crowley defines perception as the process of having an internal description of the external environment. ADAS systems are skilled at sensing their surroundings, processing information, and carrying out feature-specific tasks. Sensing components are widely used in modern ADAS systems to determine the vehicle’s surroundings. Three major types of sensors include:

Proprioceptive sensors: These sensors examine the behavior of the driver.

Exteroceptive sensors: These notice external changes in the atmosphere. It consists of lidars, radars, ultrasonics, and cameras.

Sensor network: These provide road information through multisensory platforms and traffic sensor networks. The components of these sensors are merged to create unique sensor suites that ADAS-equipped vehicles are fitted with. Factors such as consumer appeal, cost, and computation power are comprehensively examined for each sensor suite before integrating the vehicle.

Why does sensor fusion work best in the automotive industry?

Sensor Fusion is the process of collecting data from lidar, radar, cameras, and ultrasonic sensors to analyze environmental conditions. For a single sensor to work and deliver all the information necessary to operate an autonomous vehicle would be difficult, so the involvement of multiple sensors comes into play for a better experience and to counterbalance individual weaknesses. Autonomous vehicles work from sensor-fusion data, dependent on programmed algorithms. Such algorithms enable self-driving vehicles to make the correct decisions and take actions easily.

For intricate ADAS applications requiring more than one sensor, like all elements requiring lateral/longitudinal control, information from these sensors can be integrated to fill each sensor’s information gap. Therefore, this mixed data output error is somewhat less than personal sensor errors. The purpose of sensor fusion is:

Complementary sensors: Different sensors deliver various types of information needed for the system. For example, radars help you witness better obstacle position estimates; the camera, on the other hand, provides object information. And when combined, a better picture of the obstacle can be generated, thus enabling good quality of the complete system.

Computation: The fuss of acquiring and processing data from individual sensors would be reduced with fusion’s involvement. Plus, the gathered information can be stored for a longer period.

Cost: Rather than outfitting vehicles with sensors every inch, sensor suites can be carefully designed to use the fewest number of sensors needed for a 360-degree view by coinciding sensors and fusing the data.

How autonomous perception works in CAV system

The CAV (Connected automated vehicle) system seeks to enhance urban traffic efficiency. It primarily focuses on vehicle self-perception and environment perception. CAV consists of algorithms typically responsible for three primary functions: tracking, sensor fusion, and lane assignment. These algorithms use the radar sensor and front vision to define the lateral and longitudinal position, relative lateral and longitudinal velocity, lane information, and relative longitudinal acceleration, thus tracking all vehicles inside the front sensors. All information within the team must clearly see a region of 100 mm longitudinally and 12 m laterally and is provided as inputs to the perception algorithm.

CAV Perception SystemThe sensor fusion algorithm channels the radar position information, conducts data association, and merges radar and camera points for each vehicle, using weighted averages to generate sensor-fused tracks. The tracking algorithm works from the last and current timestep data to allocate track ID and conduct acceleration and velocity calculations to generate tracked object velocities, acceleration, and track ID and positions. The lane assignment algorithm assigns numbers 0–7 to tracked objects based on their lateral positions.

Real time data for autonomous vehicles

This process of developing data becomes an iterative sequence in the perception algorithm. Automakers must collect big data from real-life situations to create and work on more advanced features through new AI algorithms. That’s how real-life data becomes relevant. Incorporating perception radar at an early stage provides access to quality data and facilitates the creation and operation of these features for current and new customers. The data collection cycle marks development, and software rollout constantly promotes perception to grow and add inputs throughout valuable vehicle spans. This simply indicates the improvement of a person’s vehicle with time.

However, data collection does not just mean rolling out autonomy features and ADAS. As automakers continuously upgrade their accumulation on real-life data tracks, the experience they gain will drive the entire autonomous vehicle industry and better algorithms for level four technology. Moreover, by implementing this process, drivers can foresee being passengers in their own cars, which can drive better from their perfect driving data and learning.