Technology and Technological developments in this decade have led to some of the most awe-inspiring discoveries. With rapidly changing technology and systems to support them and provide back-end processing power, the world seems to be becoming a better place to live day by day. Technology has reached such new heights that nothing our ingenious mind today thinks about looks impossible to accomplish. The driving factor of such advancements in this new era of technological and computational superiority seems to be wrapped around two of the most highly debated domains and topics, namely Machine Learning & Artificial Intelligence.

The canvas and ideal space that these two domains provide are unfathomable. Breakthrough discoveries in both Machine Learning & Artificial Intelligence have pushed the boundaries of technological need even further and shown us the possibilities both possess. Discoveries in both these fields have also led to Industrial Development and advancements in System Automation and Digitization. A wide spectrum of Robotics, Healthcare, Fintech, telecommunications, Image and Videography, to name a few, have all benefited from the advancements. Hence, Legacy players such as Google, Amazon and Facebook have been investing in further research and development in the domain of both Machine Learning and Artificial Intelligence and trying to answer the big question of today’s era, “What Next?”.

Machines are being taught and made more and more intelligent and self-sufficient. Machines loaded with appropriate functional software and algorithms can be made capable enough to perform tasks that the mere human generally cannot. One such task that comes across and seems to be a buzz around social media these days is Restoring Old Images and making them brand new. Sometimes during the process of image acquisition or storage, images might get degraded for various reasons. Degradation may come in various forms, such as motion blur, noise, and camera misfocus. Image restoration aims to generally compensate for or, in other terms, “undo” defects that have degraded an image. Image restoration is a challenging task in the field of Image processing.

Image restoration comes across as a complex and challenging task in the field of Image processing. The restoration process improves the image’s appearance, and the main goal is to restore it to how it looked when it was first synthesized originally. The degraded image can be described as the convolution of the original image, degraded function, and additive noise. The process of restoration deconvolves the degraded image to obtain a noiselessly and deblurred original image. To restore the image to its original form, the knowledge of degradation and how it happens must be inculcated. In the case of Image Restoration through artificial intelligence and involving high computational processing power, the knowledge around degradation is taught to a machine or a system through algorithmic models and machine and Deep Learning Techniques.

But Image Restoration is not to be confused with Image Enhancement. They are both similar yet different respectively. Image restoration is different from Image Enhancement as Restoration is more of an Objective operation, whereas Enhancement appears to be Subjective. A mathematical function cannot precisely represent image Enhancement, whereas, in Image restoration, it is related more to feature extraction from the imperfect image. Image restoration assumes a degradation model that is known or can be estimated. Image Enhancement is the process that aims to improve bad images so they will “look” better, but restoration aims to invert known degradation operations applied to images.

About Blind Face Restoration with Generative Facial Prior

Blind face restoration aims at recovering high-quality faces from the low-quality image counterparts suffering from degradation, which may have been caused due to multiple factors such as the image being low-resolution, noise, blur, compression artefacts, or other various factors. When applied to real-world scenarios, restoration becomes a more challenging task due to more complicated and severe degradation, diverse poses or expressions. Therefore, blind face restoration relies on facial priors and facial factors such as facial geometry prior or references before help restore realistic and faithful details. The Blind Face Restoration model uses Generative Facial Prior (GFP) for real-world blind face restoration, which comes along implicitly encapsulated with a pretrained Generative Adversarial Network (GAN) model such as StyleGAN. These facial GANs can generate faithful faces, even in images with a high degree of variability, thereby providing rich and diverse priors such as geometry, facial textures, and colors, making it possible to jointly restore facial details and enhance its colors exactly to the original captured.

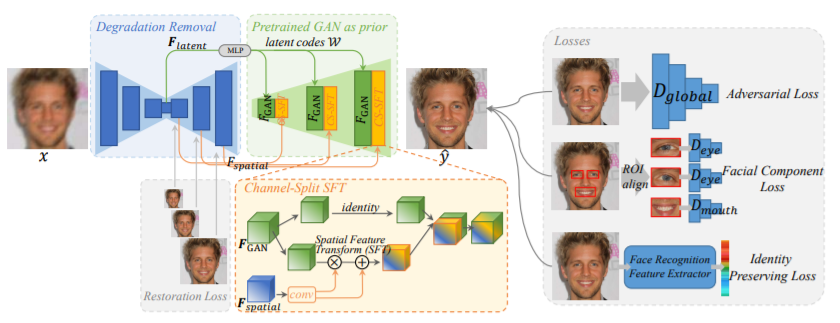

Traditional restoration models typically used the GAN inversion method. They first ‘invert’ the degraded image back to a latent state of the pretrained GAN and then perform image specific optimization techniques to reconstruct the fed images. On the contrary, GFP-GAN uses delicatedesigns to achieve a good balance of realness and fidelity in a single forward pass of image processing. GFPGAN consists of a degradation removal module and a pretrained face GAN as facial feature capturer, inter-connected by a direct latent code mapping, and several Channel-Split Spatial Feature Transform (CS-SFT) layers in a coarse-to-fine manner in the model. The CS-SFT layers perform spatial modulation on a split of features and leave the left behind features to directly pass through for better information preservation, allowing it to effectively incorporate generative features while retraining the high fidelity of the input image. It also comprises facial component loss with local discriminators to further enhance the perceptual facial details in the image while emphasizing identity preservation to further improve image fidelity.

Architecture of GFP-GAN Framework

The Architecture consists of a degradation removal module (U-Net) and a pretrained face GAN as a facial feature recognizer. They both are inter-connected by a latent code mapping technique and several Channel-Split Spatial Feature Transform (CS-SFT) layers. GFP-GAN consists of a degradation removal module called U-Net and a pre-trained face GAN (such as StyleGAN2). Specifically, the degradation removal module is designed to remove the complicated degradation in the input image and extract two kinds of features:

- latent features Flatent to map the input image to the closest latent code in StyleGAN2

- multi-resolution spatial features for modulating the StyleGAN2 features.

During the model training, it emphasizes the following :

- Intermediate restoration losses to remove complex degradation

- Facial component loss with discriminators to enhance facial details.

- Identity preserving loss to retain face identity.

Similar to perceptual loss, the identity preserving loss feature is based on the feature embedding of an input face. It comes included with the pretrained face recognition ArcFace model, which captures the most prominent features for identity discrimination in the input image.

GFP-GAN comes pre-trained on the FFHQ dataset, which consists of around 70 000 high-quality images. All the images were resized to 5122 during training. GFP-GAN is trained on synthetic data that approximates real low-quality images and generalizes to real-worldimages during the inference output synthesis.

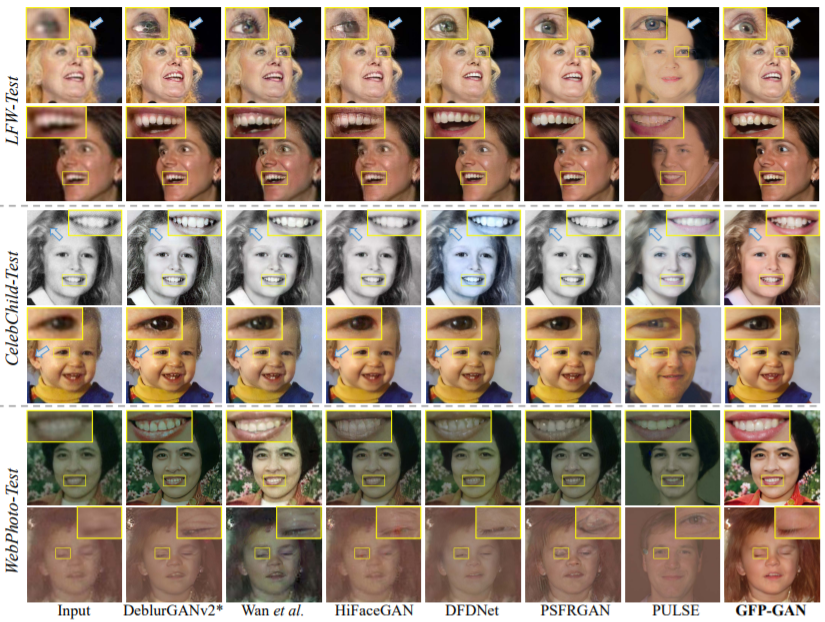

A Comparison Of GFP-GAN To Other Models

As it is noticed, the GFP-GAN model for Blind Face Restoration retains the Image Quality and restores the Facial features present in the image better than the traditionally used models present.

Getting Started

This article will try to implement a Face Restoration Model using the Generative Facial Prior model and restore degraded images that contain noise and blur. The creators of GFP-GAN inspire the following implementation, and the link to their official repository can be found here.

Setting Up The Environment

To start building our image restoration model, we will first install all the required dependencies to set up the environment for our model.

#installing the dependencies

# Install pytorch

!pip install torch torchvision

# Check torch and cuda versions

import torch

print('Torch Version: ', torch.__version__)

print('CUDA Version: ', torch.version.cuda)

print('CUDNN Version: ', torch.backends.cudnn.version())

print('CUDA Available:', torch.cuda.is_available())Next up, we will install BasicSR, an open-source image and video restoration library based on PyTorch.

!BASICSR_EXT=True pip install basicsrWe will also be using FaceXLib, which is a PyTorch-based library for face-related functions, such as detection, alignment, recognition, tracking, utils for face restorations.

!pip install facexlib

!mkdir -p /usr/local/lib/python3.7/dist-packages/facexlib/weights # for pre-trained models

Importing the GFP-GAN model,

!rm -rf GFPGAN

!git clone https://github.com/TencentARC/GFPGAN.git

%cd GFPGAN

# install extra requirements

!pip install -r requirements.txtFurther loading the pre-trained GAN model

#loading the pretrained GAN Models

!wget https://github.com/TencentARC/GFPGAN/releases/download/v0.1.0/GFPGANv1.pth -P experiments/pretrained_modelsPerforming Operations

We will start the process by first providing the input to our model with low-quality and noisy images to be restored.

# visulize the cropped low-quality faces

import cv2

import matplotlib.pyplot as plt

#setting the image path

def imread(img_path):

img = cv2.imread(img_path)

img = cv2.cvtColor(img, cv2.COLOR_BGR2RGB)

return img

# read images

img1 = imread('inputs/cropped_faces/Adele_crop.png')

img2 = imread('inputs/cropped_faces/Julia_Roberts_crop.png')

img3 = imread('inputs/cropped_faces/Justin_Timberlake_crop.png')

img4 = imread('inputs/cropped_faces/Paris_Hilton_crop.png')

# show images

fig = plt.figure(figsize=(25, 10))

ax1 = fig.add_subplot(1, 4, 1)

ax1.imshow(img1)

ax1.axis('off')

ax2 = fig.add_subplot(1, 4, 2)

ax2.imshow(img2)

ax2.axis('off')

ax3 = fig.add_subplot(1, 4, 3)

ax3.imshow(img3)

ax3.axis('off')

ax4 = fig.add_subplot(1, 4, 4)

ax4.imshow(img4)

ax4.axis('off')

Output :

Now we will use the GFP-GAN to restore the above low-quality images. To do so, we will implement the following code,

# --model_path: the path to the pre-trained GFPGAN model

# --test_path: the folder path to the low-quality images

# --aligned: whether the input images are aligned

!python inference_gfpgan_full.py --model_path experiments/pretrained_models/GFPGANv1.pth --test_path inputs/cropped_faces --alignedLoading the results,

# Loading the results!

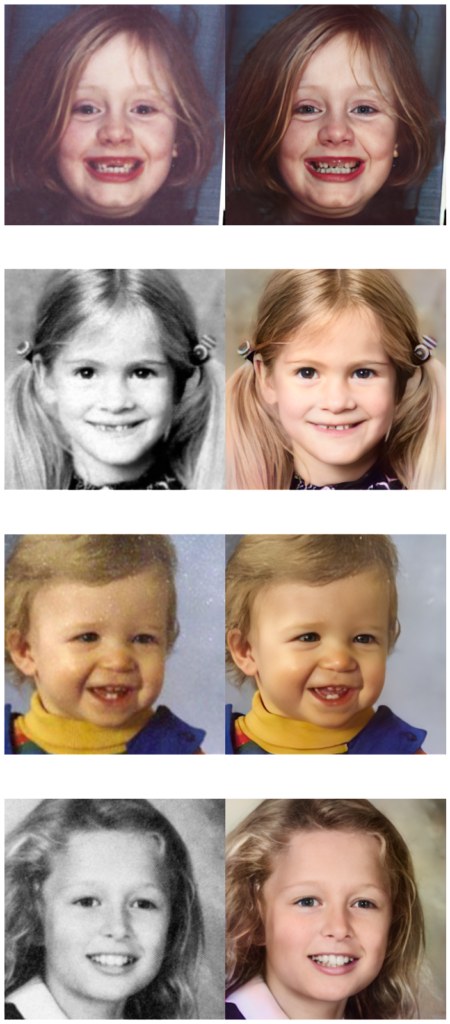

!ls resultsVisualizing the output, images on the left were our fed inputs and the images on the right show the processed output.

import cv2

import matplotlib.pyplot as plt

def imread(img_path):

img = cv2.imread(img_path)

img = cv2.cvtColor(img, cv2.COLOR_BGR2RGB)

return img

# read images

img1 = imread('results/cmp/Adele_crop_00.png')

img2 = imread('results/cmp/Julia_Roberts_crop_00.png')

img3 = imread('results/cmp/Justin_Timberlake_crop_00.png')

img4 = imread('results/cmp/Paris_Hilton_crop_00.png')

# show images

fig = plt.figure(figsize=(15, 30))

ax1 = fig.add_subplot(4, 1, 1)

ax1.imshow(img1)

ax1.axis('off')

ax2 = fig.add_subplot(4, 1, 2)

ax2.imshow(img2)

ax2.axis('off')

ax3 = fig.add_subplot(4, 1, 3)

ax3.imshow(img3)

ax3.axis('off')

ax4 = fig.add_subplot(4, 1, 4)

ax4.imshow(img4)

ax4.axis('off')Output :

As we can see from the output, several different types of degradation of the images such as noise, feature degradation were removed. They were processed back with enhanced color and originality.

We can implement the same on just a particular facial area of the low-quality input image.

# Feeding as input images to be restored

import cv2

import matplotlib.pyplot as plt

def imread(img_path):

img = cv2.imread(img_path)

img = cv2.cvtColor(img, cv2.COLOR_BGR2RGB)

return img

# read images

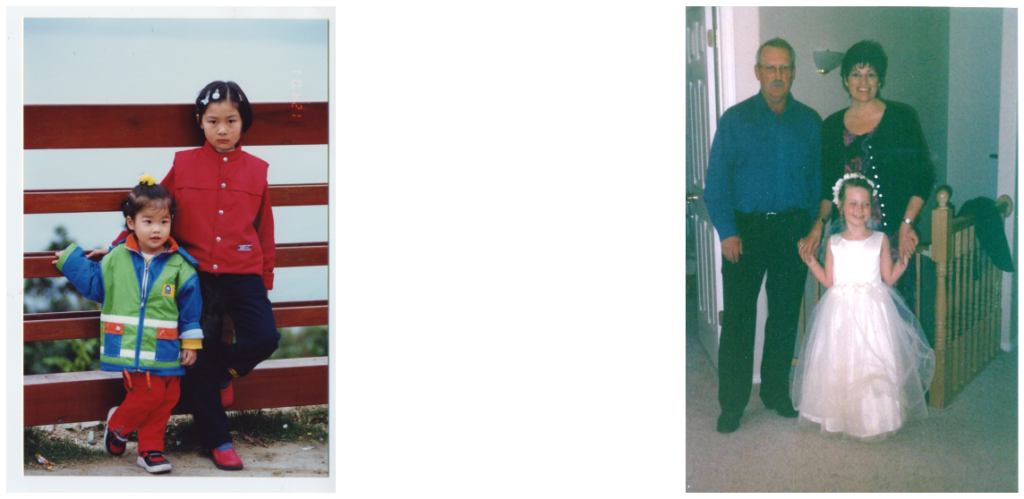

img1 = imread('inputs/whole_imgs/00.jpg')

img2 = imread('inputs/whole_imgs/10045.png')

# show images

fig = plt.figure(figsize=(25, 10))

ax1 = fig.add_subplot(1, 2, 1)

ax1.imshow(img1)

ax1.axis('off')

ax2 = fig.add_subplot(1, 2, 2)

ax2.imshow(img2)

ax2.axis('off')

Applying restoration on just the facial area particularly using the following code,

#setting path and processing

!rm -rf results

!python inference_gfpgan_full.py --model_path experiments/pretrained_models/GFPGANv1.pth --test_path inputs/whole_imgs

#loading results

!ls results/cmp

# Visualizing the results

import cv2

import matplotlib.pyplot as plt

def imread(img_path):

img = cv2.imread(img_path)

img = cv2.cvtColor(img, cv2.COLOR_BGR2RGB)

return img

# read images

img1 = imread('results/cmp/00_00.png')

img2 = imread('results/cmp/00_01.png')

img3 = imread('results/cmp/10045_02.png')

img4 = imread('results/cmp/10045_01.png')

# show images

fig = plt.figure(figsize=(15, 30))

ax1 = fig.add_subplot(4, 1, 1)

ax1.imshow(img1)

ax1.axis('off')

ax2 = fig.add_subplot(4, 1, 2)

ax2.imshow(img2)

ax2.axis('off')

ax3 = fig.add_subplot(4, 1, 3)

ax3.imshow(img3)

ax3.axis('off')

ax4 = fig.add_subplot(4, 1, 4)

ax4.imshow(img4)

ax4.axis('off')

Output :

EndNotes

This article tried to understand how image restoration works, which is a vital part of the image processing phase. We also tried to implement an Image restoration model based on the Generative Facial Prior model and restored degraded images. I would recommend the reader to try the same on more complex degraded images to explore the further capabilities of the model.

The above implementation is available as a colab notebook, which can be accessed using the link here.

Happy Learning!