There are a variety of interesting applications of Natural Language Processing (NLP) and text generation is one of those interesting applications. When a machine learning model working on sequences such as Recurrent Neural Network, LSTM RNN, Gated Recurrent Unit is trained on the text sequences, they can generate the next sequence of an input text. PyTorch provides a set of powerful tools and libraries that add a boost to these NLP based tasks. It not only requires a less amount of pre-processing but also accelerates the training process.

In this article, we will train a Recurrent Neural Network (RNN) in PyTorch on the names belonging to several languages. After successful training, the RNN model will predict names belonging to a language that start with an input alphabet letter.

Implementation in PyTorch

This implementation was done in Google Colab where the dataset was fetched from the Google Drive. So, first, we will mount the Google drive with the Colab notebook.

from google.colab import drive drive.mount('/content/gdrive')

Now, we will import all the required libraries.

from __future__ import unicode_literals, print_function, division from io import open import glob import os import unicodedata import string import torch import torch.nn as nn import random import time import math import matplotlib.pyplot as plt import matplotlib.ticker as ticker

The below code snippet will read the dataset.

all_let = string.ascii_letters + " .,;'-" n_let = len(all_let) + 1 def getFiles(path): return glob.glob(path) # Unicode string to ASCII def unicodeToAscii(s): return ''.join( c for c in unicodedata.normalize('NFD', s) if unicodedata.category(c) != 'Mn' and c in all_let ) # Read a file and split into lines def getLines(filename): lines = open(filename, encoding='utf-8').read().strip().split('\n') return [unicodeToAscii(line) for line in lines] # Build the cat_lin dictionary, a list of lines per category cat_lin = {} all_ctg = [] for filename in getFiles('gdrive/My Drive/Dataset/data/data/names/*.txt'): categ = os.path.splitext(os.path.basename(filename))[0] all_ctg.append(category) lines = getLines(filename) cat_lin[categ] = lines n_ctg = len(all_ctg)

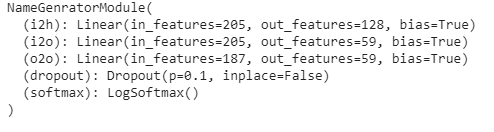

In the next step, we will define the module class to generate the names. The module will be a recurrent neural network.

class NameGeneratorModule(nn.Module): def __init__(self, inp_size, hid_size, op_size): super(NameGeneratorModule, self).__init__() self.hid_size = hid_size self.i2h = nn.Linear(n_ctg + inp_size + hid_size, hid_size) self.i2o = nn.Linear(n_ctg + inp_size + hid_size, op_size) self.o2o = nn.Linear(hid_size + op_size, op_size) self.dropout = nn.Dropout(0.1) self.softmax = nn.LogSoftmax(dim=1) def forward(self, category, input, hidden): inp_comb = torch.cat((category, input, hidden), 1) hidden = self.i2h(inp_comb) output = self.i2o(inp_comb) op_comb = torch.cat((hidden, output), 1) output = self.o2o(op_comb) output = self.dropout(output) output = self.softmax(output) return output, hidden def initHidden(self): return torch.zeros(1, self.hid_size)

The below functions will be used to pick the random item from a list and a random line from a category

def randChoice(l): return l[random.randint(0, len(l) - 1)] def randTrainPair(): category = randChoice(all_ctg) line = randChoice(cat_lin[category]) return category, line

The below functions will convert the data to the compatible format for the RNN module.

def categ_Tensor(categ): li = all_ctg.index(categ) tensor = torch.zeros(1, n_ctg) tensor[0][li] = 1 return tensor def inp_Tensor(line): tensor = torch.zeros(len(line), 1, n_let) for li in range(len(line)): letter = line[li] tensor[li][0][all_let.find(letter)] = 1 return tensor def tgt_Tensor(line): letter_indexes = [all_let.find(line[li]) for li in range(1, len(line))] letter_id.append(n_let - 1) # EOS return torch.LongTensor(letter_id)

The below function will create random training examples including category, input and target tensors.

def rand_train_exp(): category, line = randTrainPair() category_tensor = categ_Tensor(category) input_line_tensor = inp_Tensor(line) target_line_tensor = tgt_Tensor(line) return category_tensor, input_line_tensor, target_line_tensor

The below function will define the loss criteria for the RNN module.

#Loss criterion = nn.NLLLoss() #Learning rate lr_rate = 0.0005 def train(category_tensor, input_line_tensor, target_line_tensor): target_line_tensor.unsqueeze_(-1) hidden = rnn.initHidden() rnn.zero_grad() loss = 0 for i in range(input_line_tensor.size(0)): output, hidden = rnn(category_tensor, input_line_tensor[i], hidden) l = criterion(output, target_line_tensor[i]) loss += l loss.backward() for p in rnn.parameters(): p.data.add_(p.grad.data, alpha=-lr_rate) return output, loss.item() / input_line_tensor.size(0)

To show time during training, the bellow function is defined.

def time_taken(since): now = time.time() s = now - since m = math.floor(s / 60) s -= m * 60 return '%dm %ds' % (m, s)

In the next step, we will define our RNN model.

model = NameGenratorModule(n_let, 128, n_let)

We will see the parameters of the defined RNN model.

print(model)

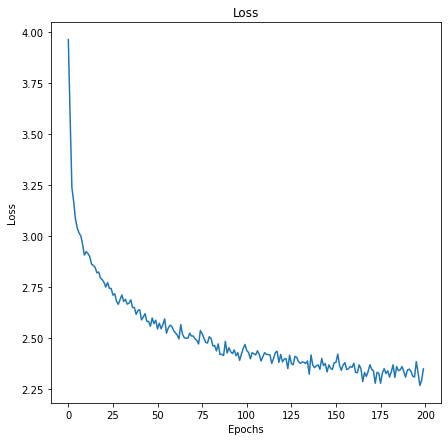

In the next step, the model will be trained in 10,000 epochs.

epochs = 100000 print_every = 5000 plot_every = 500 all_losses = [] total_loss = 0 # Reset every plot_every iters start = time.time() for iter in range(1, epochs + 1): output, loss = train(*rand_train_exp()) total_loss += loss if iter % print_every == 0: print('Time: %s, Epoch: (%d - Total Iterations: %d%%), Loss: %.4f' % (time_taken(start), iter, iter / epochs * 100, loss)) if iter % plot_every == 0: all_losses.append(total_loss / plot_every) total_loss = 0

We will visualize the training loss.

plt.figure(figsize=(7,7)) plt.title("Loss") plt.plot(all_losses) plt.xlabel("Epochs") plt.ylabel("Loss") plt.show()

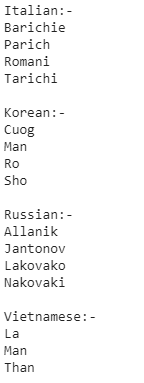

Finally, we will sample our model to test it on generating the names belonging to languages when given a starting alphabet letter.

max_length = 20 # Sample from a category and starting letter def sample_model(category, start_letter='A'): with torch.no_grad(): # no need to track history in sampling category_tensor = categ_Tensor(category) input = inp_Tensor(start_letter) hidden = NameGenratorModule.initHidden() output_name = start_letter for i in range(max_length): output, hidden = NameGenratorModule(category_tensor, input[0], hidden) topv, topi = output.topk(1) topi = topi[0][0] if topi == n_let - 1: break else: letter = all_let[topi] output_name += letter input = inp_Tensor(letter) return output_name # Get multiple samples from one category and multiple starting letters def sample_names(category, start_letters='XYZ'): for start_letter in start_letters: print(sample_model(category, start_letter))

Now, we will check the sampled model to generate names when given a language and the starting alphabet letter.

print("Italian:-") sample_names('Italian', 'BPRT') print("\nKorean:-") sample_names('Korean', 'CMRS') print("\nRussian:-") sample_names('Russian', 'AJLN') print("\nVietnamese:-") sample_names('Vietnamese', 'LMT')

So, as we can see above, our model has generated names belonging to the language categories and starting with the input alphabet.

References:-