A reinforcement learning system consists of four main elements:

- An agent

- A policy

- A reward signal, and

- A value function

An agent’s behaviour at any point of time is defined in terms of a policy. A policy is like a blueprint of the connections between perception and action in an environment.

In the next section, we shall talk about the key differences in the two main kind of policies: /

- On-policy reinforcement learning

- Off-policy reinforcement learning

On-Policy VS Off-Policy

Comparing reinforcement learning models for hyperparameter optimization is an expensive affair, and often practically infeasible. So the performance of these algorithms is evaluated via on-policy interactions with the target environment. These interactions of an on-policy learner help get insights about the kind of policy that the agent is implementing.

An off-policy, whereas, is independent of the agent’s actions. It figures out the optimal policy regardless of the agent’s motivation. For example, Q-learning is an off-policy learner.

On-policy methods attempt to evaluate or improve the policy that is used to make decisions. In contrast, off-policy methods evaluate or improve a policy different from that used to generate the data.

Here is a snippet from Richard Sutton’s book on reinforcement learning where he discusses the off-policy and on-policy with regard to Q-learning and SARSA respectively:

Off-policy

In Q-Learning, the agent learns optimal policy with the help of a greedy policy and behaves using policies of other agents. Q-learning is called off-policy because the updated policy is different from the behavior policy, so Q-Learning is off-policy. In other words, it estimates the reward for future actions and appends a value to the new state without actually following any greedy policy.

On-policy

SARSA (state-action-reward-state-action) is an on-policy reinforcement learning algorithm that estimates the value of the policy being followed. In this algorithm, the agent grasps the optimal policy and uses the same to act. The policy that is used for updating and the policy used for acting is the same, unlike in Q-learning. This is an example of on-policy learning.

An experience in SARSA is of the form ⟨S,A,R,S’, A’ ⟩, which means that

- current state S,

- current action A,

- reward R, and

- new state S’,

- future action A’.

This provides a new experience to update from

Q(S,A) to R+γQ(S’,A’).

Conclusion

On-policy reinforcement learning is useful when you want to optimize the value of an agent that is exploring. For offline learning, where the agent does not explore much, off-policy RL may be more appropriate.

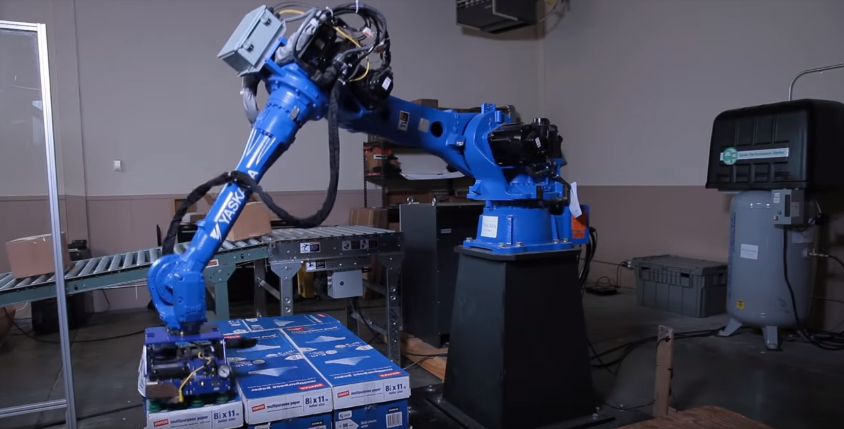

For instance, off-policy classification is good at predicting movement in robotics. Off-policy learning can be very cost-effective when it comes to deployment in real-world, reinforcement learning scenarios. The characteristic of the agent to explore and find new ways and cater for the future rewards task makes it a suitable candidate for flexible operations. Imagine a robotic arm that has been tasked to paint something other than what it is trained on. Physical systems need such flexibility to be smart and reliable. You do not want to hardcode use cases today. The goal is to learn on the go.

However, off-policy frameworks too are not without any disadvantages. Evaluation becomes challenging as there is too much exploration. These algorithms might assume that an off-policy evaluation method is accurate in assessing the performance. But agents fed with past experiences may act very differently from newer learned agents, which makes it hard to get good estimates of performance.

Promising directions for future work include developing off-policy methods that are not restricted to success or failure of reward tasks, but extending the analysis to stochastic tasks as well.

For more information, check Richard Sutton’s book.