|

Listen to this story

|

Diffusion models have replaced GANs to become the de facto way for image generation over the past year. However, there were some deeply-embedded unsuitable concepts such as nudity, violence and misattributed artwork that impeded its widespread use—until now.

Researchers have found a way to remove these concepts from the ‘memory’ of diffusion algorithms. Using the power of the model itself to modify the weights of the neural network, researchers can make models forget concepts, artistic styles, or even objects. This could be the break diffusion models need to break into mainstream adoption.

How does it work?

Titled ‘Erasing Concepts from Diffusion Models’, the paper describes a method of fine-tuning that can edit model weights to remove certain concepts from memory. The method claims to do this while having minimal interference with other concepts in the latent space of the model. This approach can be useful in a variety of ways, with its primary goal being to make diffusion models more safe, private, and adherent to copyright laws.

In the paper, the researchers showed a sample of this method where they were able to remove the concept of ‘nudity’ from a model’s weights. This has the obvious benefit of making diffusion models safe for certain types of use-cases, such as education, without having to build in stringent filters and moderation endpoints after the model is done training.

Instead of spending large amounts of time and compute to re-train a huge diffusion model, erasing concepts through finetuning can create a safe model with a very low amount of compute. In a twist of irony, researchers used the models’ own encyclopaedic knowledge against them.

By freezing the weights of the pre-trained model and using it to predict noise, the researchers were able to guide the model in the opposite direction of a given forbidden prompt. Using classifier-free guidance, the researchers were able to move the model away from the given concept. When this process is conducted iteratively, the model moves away from the prompt until the output cannot contain the prompt.

Put into an analogy, this concept is similar to helping a child balance a bicycle. Whenever the model moves towards the forbidden concept, which correlates to falling down in this example, the person guiding the cycle (researchers) pushes it back to its centre point. As the child (the model) slowly begins to learn to balance, the concept of losing balance (the forbidden prompt) is slowly erased.

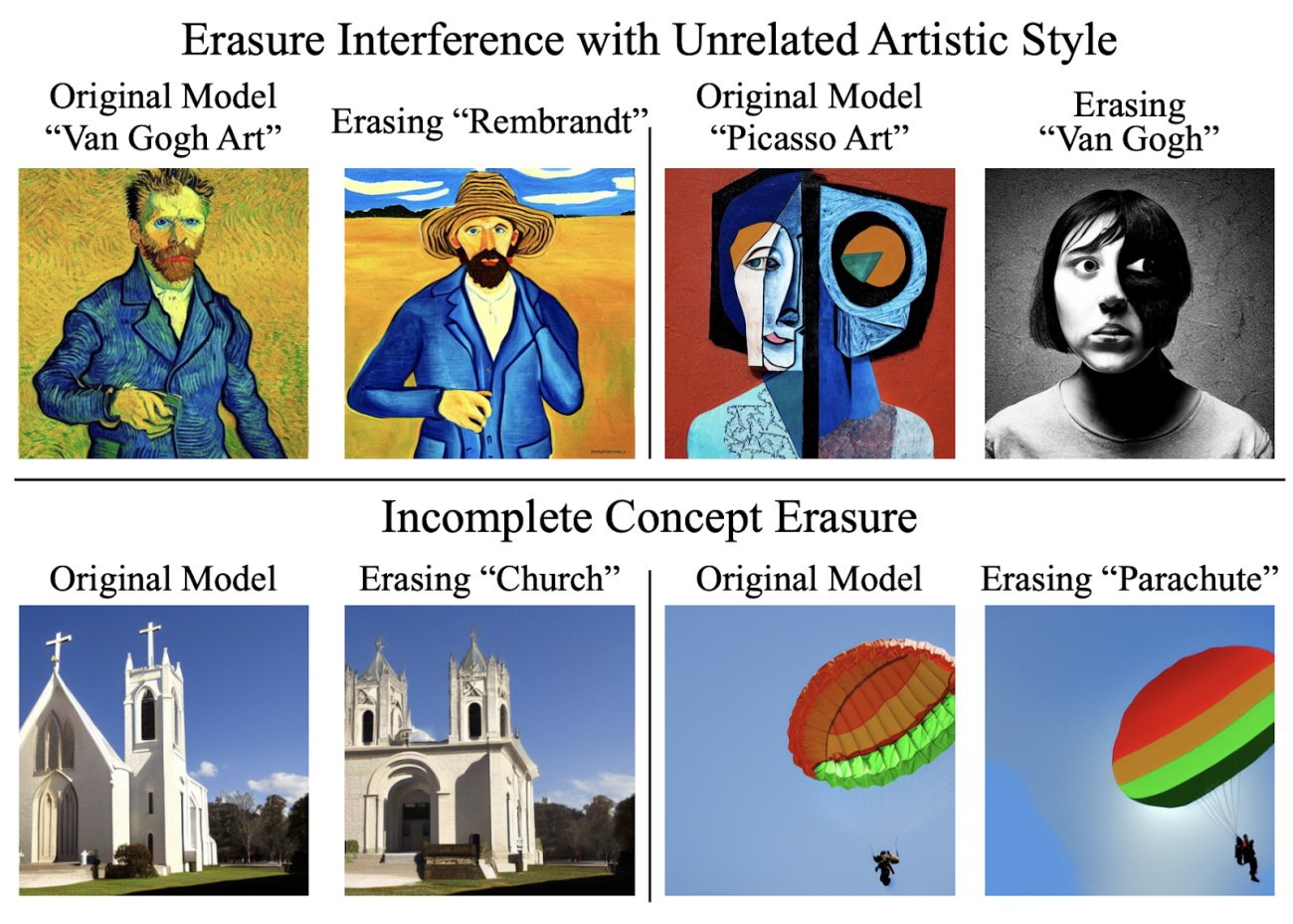

As with any method, this also has a few limitations. In some cases where a certain concept was present across a large part of the dataset, erasing said concept would result in interference with unrelated concepts. However, this can bring diffusion models down to a more palatable form of generative AI by tackling some limitations.

Can it fix diffusion?

In the past, researchers have explored this concept by building out an entirely different model. Known as Safe Latent Diffusion, this model is a fork of Stable Diffusion’s 1.5 version and is trained to exclude images that ‘might be offensive, insulting, threatening, or might otherwise cause anxiety’.

While this method was successful, the new method promises to be faster and more practical as it doesn’t require the whole mode lot be retrained. Selectively erasing certain concepts from the algorithm’s ‘memory’ has a lot of applications apart from just removing nudity.

Removing these so-called forbidden concepts is a huge step forward for diffusion models. Due to the black box nature of neural networks, it was not possible to safely remove such concepts from models before without extensive data pre-processing to remove any bad data. With this method, state-of-the-art algorithms which derive a lot of value from their large datasets can be tweaked to remove the concepts without degrading their performance or efficacy.

This approach can also tackle another common drawback of diffusion models—attribution. One of the biggest problems that artists have with diffusion models is that they ‘steal’ art and ‘remix’ it without any way of determining who the credit should be given to. With this new concept, it is possible to remove a certain artist or art style from the memory of the model completely.

Take this diagram, for instance. The researchers were able to remove the artistic styles of Vincent Van Gogh, Edward Munch, Johannes Vermeer, and Hokusai from the memory of the model. This resulted in an output that resembled the original image, albeit without the signature style of the artist who made it.

This tackles one of the biggest problems associated with diffusion-based models. When combined with other projects such as Stable Attribution, this approach might pave the way for diffusion models that don’t steal artwork.