Motion sensing technology that has long been pondered to try to integrate it with as many devices as possible. For so long motion sensing has been integrated into doors of the building where it opens, sensing the proximity of the living being walking towards it or in the motion-sensing gaming consoles. For some time now, Google has been working on integrating motion-sensing tech into their products, particularly in smartphones through which a user can perform functions through gestures, for example, skipping songs.

Soli is the technology behind Google’s Motion Sense, which facilitates close proximity interaction with smartphones without contact.

How Soli Works

Google has made use of a custom-built machine learning model along with a data collection pipeline to design its robust machine learning model. This model helps Soli recognise a variety of movements and helps interpret the motion to gesture apps, for example, Quick Gestures in Pixel 4.

Soli in Pixel 4 uses short-range radar technology. The team developed small-scale radar systems, novel sensing paradigms and algorithms from scratch specifically for the perception of human interactions.

How a traditional radar works: Radar’s primary function is to detect and measure the properties of objects in a range based on their interactions with radio waves. The radar system traditionally includes a transmitter that emits radio waves that are scattered or redirected when they hit objects within their range. Some energy is reflected back and is intercepted by the radar receiver, based on these received waveforms, the radar system can detect the presence of objects as well as estimate specific attributes of the object, like the size and its distance.

Soli’s radar emits electromagnetic waves in a broad beam. When objects like a hand come within the range of the radar and interact with the waves, meaning that they scatter the beam, they also reflect a certain amount of energy back to the radar antenna.

The rich information like energy, frequency shift and time delay from these gestures or motions by the object help Soli identify the object’s characteristics and behaviours like size, shape, orientation, distance material and velocity.

Soli processes the signal variations and captured characteristics from the object’s movements. This information makes it possible for it to distinguish between movements to understand the size, orientation, shape, distance, material and the velocity of the object, which are crucial in recognising the gestures.

As implied, Soli relies on processing the temporal changes in the signals reflected back from the gesturing or movement of the objects to detect and resolve them. Below is the animation where Soli recognises the range, distance and velocity of the objects given in detail by Google here.

<Gif>

The Machine Learning Model And The Challenges Associated With It

The resulting signals from Soli’s signal processing pipeline are then fed to Soli’s ML models for gesture classification, who are trained to recognise and accurately detect a variety of gestures with low latency.

Challenges: The Soli machine learning system had to face two major problems: first was that the entire system had to go through too many unique gestures to recognise.

The second was that the system encountered too many extraneous movements within the range of the sensor along with encountering an altogether different type of perception when it comes to how the entire environment looks from the POV of the motion sensor.

Soli’s machine learning model consists of neural networks that were trained on millions of gestures from Google volunteers; these were mixed with other radar recordings where Google volunteers performed the generic motions made near the device. These neural networks trained on the myriad ways the gestures could be performed, give Soli’s model the right kind of robustness it needed to counter the challenges.

How Soli Fits Into A Mobile Phone

Classical radar systems use the spatial resolution that targets the size of the objects and distinguishes the objects based on their spatial structures. The spatial resolution requires parts that are of considerable dimensions, so, Soli’s team used a fundamentally different paradigm based on motion rather than spatial resolution.

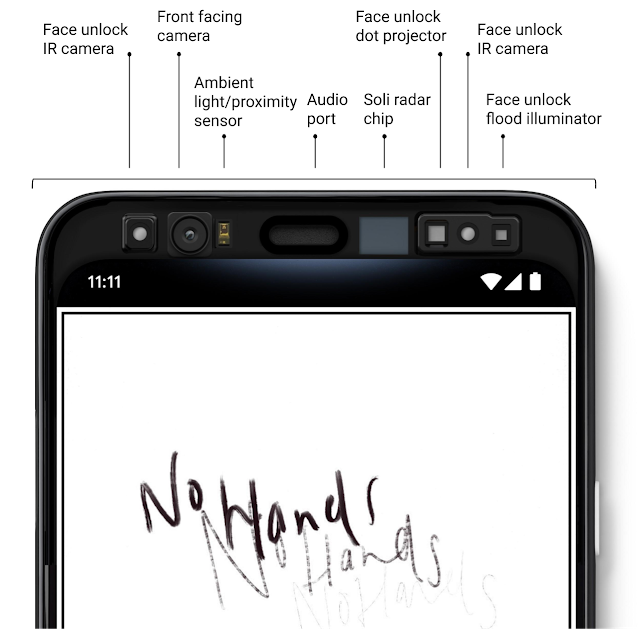

Because of this paradigm, Soli’s entire system is equipped into a phone’s (Google Pixel 4) top portion. The system, from its first prototype in 2014, where it was the size of a desktop, is now at the size of 5.0 mm x 6.5 mm RFIC, which also includes the antennas.

Outlook

Till now, we have seen motion-sensing related tech used more popularly in games and to open or activate doors in a building. Google and Soli’s team says that this is the first time a radar-based machine perception will be demonstrated in a mobile phone with Pixel 4 and Pixel 4 XL. Google says that the Motion Sense in Pixel smartphones and other devices shows that Soli’s potential can be extended to bring seamless context awareness and gesture recognition for implicit and explicit interactions.