|

Listen to this story

|

Since 2022 was the year of generative AI, users were able to generate from text to anything. The year isn’t even over yet, and Stability AI has announced the open-source release of Stable Diffusion 2.0 on Thursday.

Recently, NVIDIA entered the generative arena with its text-to-image model called ‘eDiffi’ or ‘ensemble diffusion for images’ in competition with Google’s Imagen, Meta’s ‘Make a Scene’ and others.

eDiffi offers an unprecedented text-to-image synthesis with intuitive painting along with word capabilities and instant style transfer—compared to the open-source text-to-image DALL.E 2 and Stable Diffusion—generating results with better synthesis quality.

Moreover, Amazon Web Services (AWS) is set to begin offering access to generative algorithms Bloom and Stable Diffusion in Sagemaker Jumpstart—the company’s service for open-source, and deployment-ready algorithms—which is well known in the generative AI space.

Read: Meet the Hot Cousin of Stable Diffusion, ‘Unstable Diffusion’

What’s new?

Stable Diffusion 2.0 delivers better features and improvements, compared to the original V1 release. The new release includes robust text-to-image models that are trained on a new encoder called OpenCLIP—developed by LAION and aid from Stability AI—improving quality of the generated images. The models in the release are capable of generating images with default resolutions of 512×512 pixels and 768×768 pixels.

Check out the release notes of Stable Diffusion 2.0 on GitHub.

Moreover, the models are trained on the subset of the LAION-5b dataset—which was created by the DeepFloyd team—to further filter adult content using the dataset’s NSFW filter.

Unveiling Upscaler Diffusion Models

Other than the generative model, the 2.0 version includes an Upscaler Diffusion model which enhances image resolution or quality by a factor of 4. For example, below is an image where a low-resolution generated image (128×128) has been upscaled to a higher resolution (512×512).

The company said that by combining this model with their text-to-image models, Stable Diffusion 2.0 will be able to generate images with resolutions of 2048×2048, or even higher.

Moreover, the depth-to-image model—depth2img—extends all prior features of image-to-image option from the V1 for creative applications of the model.

With the help of the existing model, this feature would infer the depth of an input image to generate new images using text and depth information.

With the help of a depth-to-image model, users can apply the feature in new creative applications, delivering results that may look different from the original—still preserving the depth and coherence of the original image.

With features such as upscaling capabilities to higher resolution and depth2img, Stability AI believes that the 2.0 version would be the foundation to create new applications—enabling an endless explosion of the creative potential in AI.

Why it matters

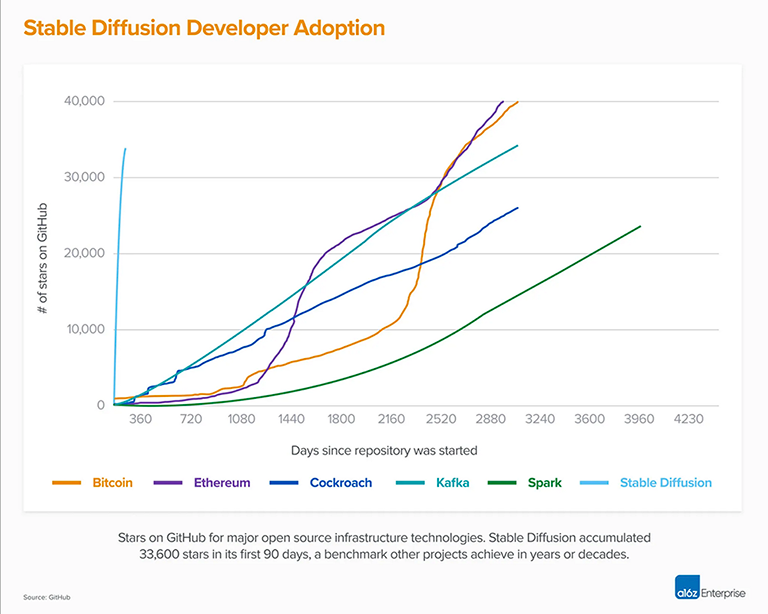

The original CompVis-led Stable Diffusion V1 had changed the nature of open-source AI generative models, spawning over hundreds of other innovations around the world. With one of the fastest growing climbs to 10,000 GitHub stars in any software, the V1 rocketed through 33K stars in less than two months.

The original Stable Diffusion V1 release was led by the dynamic team of Stability AI’s Robin Rombach, Patrick Esser from Runway ML and CompVis Group of LMU Munich’s Professor Dr. Björn Ommer. The older version was built on their prior work along with Latent Diffusion Models—receiving support from Eleuther AI and LAION. The blog read that Rombach is now leading the advancements with Katherine Crowson to create the next generation of media models.