“Researchers at Stanford introduce a new algorithm that can help drones decide when and when not to offload their AI tasks.”

Autonomous vehicles, be it self driving cars or drones, in an ideal situation, should need to upload only 1 per cent of their visual data to help retrain their model each day. According to Intel, running a self-driving car for 90 minutes can generate more than four terabytes of data. And, going by the ideal case of 1 per cent, this would generate 40 GBs of data from a single vehicle. Now, that’s a lot of data transfer for an ML model to crunch and dish out insights for the vehicle to make the right decisions. Despite modern-day superfast-compact chips, onboard computing is still a challenge. It leads to data offloading problems.

Low powered robots such as drones require visual analysis for real-time navigation and continual learning to improve their recognition capabilities. Building systems for continual learning will lead to the following bottlenecks (Chinchali et al.):

- network data transfer to compute servers

- limited robot and cloud storage

- dataset annotation with human supervision

- cloud computing for training models on massive datasets

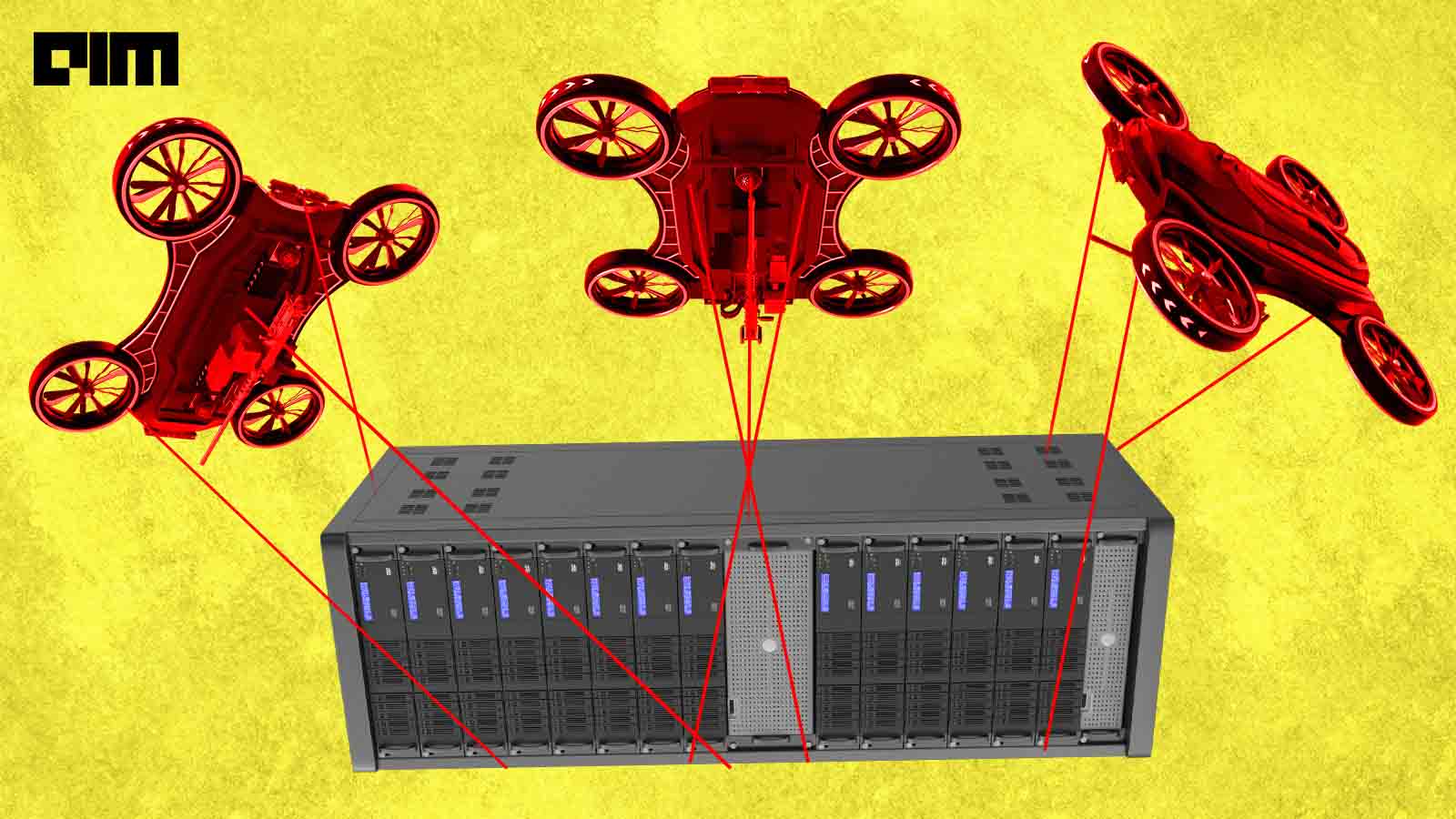

“Cloud robotics allows mobile robots the benefit of offloading compute-intensive models to centralized servers.”

To address these bottlenecks, the team at Stanford has released a series of papers detailing their new algorithms, which have improved the performance of low powered robots such as drones. The researchers focussed their efforts on continual learning, especially which is more computationally intensive and may require human intervention to annotate when the robot encounters new visuals. One of their algorithms factors in available bandwidth and the amount of data to be transferred to help the robots make key offloading decisions.

As depicted above, the researchers implemented a co-design method where the on-robot computation is made more efficient with the help of a pre-trained, differentiable task module at a central server, which allows multiple robots to share an upfront cost of training the model and benefit from centralized model updates. The co-design in this context, stated the researchers, means that the pre-trained task network parameters are fixed, and the task objective guides what salient parts of a sensory stream to encode. The task module has an objective to operate with minimal sensory representation.

Addressing the latency issues due to compute intense operations on the drones falls under the category of cloud robotics, where the aim is to make robots use remote servers to augment their grasping, object recognition, and mapping capabilities. The Stanford researchers and their peers incorporated deep RL algorithms to handle the accuracy trade-offs between on-robot and in-cloud calculations to arrive at an optimal solution. In this way, drones or other robots become capable enough to navigate through new terrain without getting baked with an onboard compute. According to the researchers, experimental results show that the performance in key vision tasks improved considerably, which will probably result in safer robots and autonomous vehicles that can navigate seamlessly in a real-world scenario.

From autonomous quadcopter drones that can survey a flooded area to picking up rocks on the surface of mars, recent advances in autonomous drone technology and machine learning image classification algorithms have enabled a wide range of aerial capabilities. “Imagine a future Mars or subterranean rover that captures high-bitrate video and LIDAR sensory streams as it charts uncertain terrain, some of which it cannot classify locally,” wrote the researchers.