Table of contents

- Transfer Learning

- Image Classification API of ML.NET

- Dataset used

- Model architecture used

- Prerequisites for the implementation

- Data Preparation

- Creating workspace directory

- Path definitions and variable initialization

- Data Loading

- Data Preprocessing

- Define model training pipeline

- Output

- Ways to improve model’s performance

- References

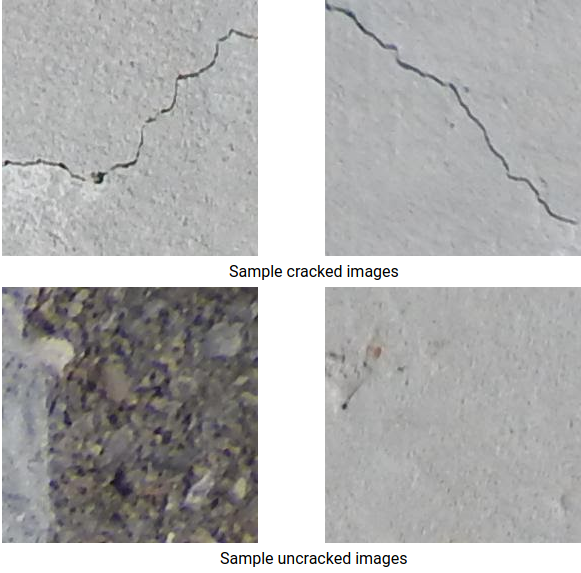

Image classification is a Computer Vision task which falls into the category of Supervised Learning. We train a model to label an input image with one of the prescribed target classes based on the already labelled images of the training set. Here, we have a dataset having images of concrete surfaces. The task is to create a C# .NET Core console application which applies transfer learning, a pretrained TensorFlow model and ML.NET’s Image classification API to identify the structures from the deck as cracked or uncracked.

In our previous article, we introduced ML.NET – a Microsoft Corporation’s project for .NET developers to accomplish Machine Learning tasks. Let us cover an important Deep Learning use case of ML.NET viz. image classification using the TensorFlow library and the concept of transfer learning.

Transfer Learning

Not aware of the concept of transfer learning? Refer to this page before proceeding!

Image Classification API of ML.NET

The Image Classification API uses a low-level library called TensorFlow.NET (TF.NET). It binds .NET Standard framework with TensorFlow API in C#. It comes with a built-in high-level interface called TensorFlow.Keras .

Visit this GitHub repository for detailed information on TF.NET.

Model training using transfer learning and the Image Classification API is a dual-phase process. The two phases included are as follows:

- Bottleneck phase

The training set is loaded and the pixel values of those images are used as input for the frozen layers of the pre-trained model. The frozen layers consist of all the layers in the architecture up to the penultimate layer (also called the bottleneck layer). As no training actually occurs through these layers, they are referred to as ‘frozen’. From these layers only, the pre-trained model learns to distinguish between the predefined target categories. The bottleneck phase occurs only once and its results can be cached for later usage.

- Training phase

The output of the first phase is fed as an input to the ultimate layer of the model for its retraining. The number of times this happens is specified by the model parameters. Each iteration computes the model’s accuracy and loss values depending on which further model optimizations can be carried out. At the end of the training phase, we get .zip and .pb formats of the model. It is preferable to use a .zip version in ML.NET-supported environments.

Dataset used

The SDNET2018 dataset used here is an annotated dataset comprising more than 56,000 images of cracked and non-cracked concrete walls, bridge decks and pavements.

Source: Maguire, Marc; Dorafshan, Sattar; and Thomas, Robert J., “SDNET2018: A concrete crack image dataset for machine learning applications” (2018)

Click here to download the .zip file of the data.

The dataset has three subdirectories each containing images for one of the three types of structures:

D – for bridge decks

W – for walls

P – for pavements

For each of the above subdirectories, there is the further splitting of cracked and uncracked surfaces’ images into two subdirectories with prefix ‘C’ and ‘U’ respectively.

Here, we are using only the ‘D’ subdirectory i.e. bridge deck images.

Model architecture used

We have used a part of the 101-layer variant of the Residual Network (ResNet) v2 model whose original version takes 224*224 dimensional images and classifies them into appropriate categories.

Visit this page to understand the model in detail.

Prerequisites for the implementation

- Use Visual Studio 2019 or higher version

- Or use Visual Studio 2017 version 15.6 or higher with the NET Core cross-platform development workload installed

Create your C# .NET Core console application and then install the Microsoft.ML NuGet Package. Click here for its installation.

Open the Program.cs file and replace the ‘using’ statements with the following ones:

using System; using System.Collections.Generic; using System.Linq; using System.IO; using Microsoft.ML; using static Microsoft.ML.DataOperationsCatalog; using Microsoft.ML.Vision;

Data Preparation

Unzip the ‘D’ subdirectory and copy it into your project directory.

Define the image data schema below the ‘Program’ class by creating a class, say ‘ImgData’ as follows:

class ImgData

{

//path of the image file

public string ImgPath { get; set; }

//category to which the image in ImgPath belongs to

public string Label { get; set; }

}

Define the input data schema by creating a class say InputData as follows:

class InputData

{

public byte[] Img { get; set; } //byte representation of image

public UInt32 LabelKey { get; set; } //numerical representation of label

public string ImgPath { get; set; } //path of the image

public string Label { get; set; }

}

From the InputData class, ‘Img’ and ‘LabelKey’ properties will be used training and prediction purposes. ‘ImgPath’ and ‘Label’ columns have been included just to access the original file name and text representation of labels.

Define the output schema by creating a class, for example, Output as follows:

class Output

{

public string ImgPath { get; set; } //path of the image

public string Label { get; set; } //target category

public string Pred { get; set; } //predicted label

}

‘ImgPath’ and ‘Label’ properties here play the same roles as in InputData class. Only ‘Pred’ property is used for prediction.

Creating workspace directory

If training and validation data do not not change frequently, cache the bottleneck values to be used for further runs. To store those values and .pb version of the model, create a directory say ‘workspace’ in your project.

Note: .pb stands for protobuf. In TensorFlow, .pb file is required to run a trained model. It consists of graph definition and weights of the model.

Path definitions and variable initialization

Inside the Main method, define the path location of your assets, computed bottleneck values and .pb version of the model.

var projectDir = Path.GetFullPath(Path.Combine(AppContext.BaseDirectory, "../../../")); var workspace = Path.Combine(projectDir, "workspace"); var assets = Path.Combine(projectDir, "assets");

Instantiate MLContext class.

MLContext myContext = new MLContext();

Data Loading

Create LoadImagesFromDirectory utility method below the Main method to format the data into a list of ‘ImgData’ class’ objects since we have data distributed in two subdirectories (C-prefixed and U-prefixed).

public static IEnumerable<ImgData> LoadImagesFromDirectory(string folder, bool useFolderNameAsLabel = true)

{

//get all file paths from the subdirectories

var files = Directory.GetFiles(folder, "*",searchOption:

SearchOption.AllDirectories);

//iterate through each file

foreach (var file in files)

{

//Image Classification API supports .jpg and .png formats; check img formats

if ((Path.GetExtension(file)!=".jpg") &&

(Path.GetExtension(file)!= ".png"))

continue;

//store filename in a variable, say ‘label’

var label = Path.GetFileName(file);

/* If the useFolderNameAsLabel parameter is set to true, then name

of parent directory of the image file is used as the label. Else label is expected to be the file name or a a prefix of the file name. */

if (useFolderNameAsLabel)

label = Directory.GetParent(file).Name;

else

{

for (int index = 0; index < label.Length; index++)

{

if (!char.IsLetter(label[index]))

{

label = label.Substring(0, index);

break;

}

}

}

//create a new instance of ImgData()

yield return new ImageData()

{

ImagePath = file,

Label = label

};

}

}

Note: When ‘yield return’ statement is reached in a iterator methor in C# code, expression following it is returned and current code location is retained. The next time you call that function, execution restarts from that location only.

Get the list of images used for training using LoadImagesFromDirectory method.

IEnumerable<ImgData> imgs = LoadImagesFromDirectory(folder: assetsRelativePath, useFolderNameAsLabel: true);

Load those images into an IDataView using LoadFromEnumerable() method.

IDataView imgData = mlContext.Data.LoadFromEnumerable(imgs);

Data Preprocessing

Data gets loaded in the same order as it is read from the data subdirectories. Shuffle the data to add variance.

IDataView shuffle = mlContext.Data.ShuffleRows(imgData);

ML models expects numerical format of data. Preprocess the data by creating an EstimatorChain having the MapValueToKey and LoadRawImageBytes transforms.

var preprocessingPipeline = my_Context.Transforms.Conversion.MapValueToKey

/*takes the categorical value in the Label column, convert it to a numerical KeyType value and store it in a new column called LabelKey*/

(inputColumnName: "Label",

outputColumnName: "LabelKey")

/*take the values from the ImgPath column along with the imageFolder

parameter to load images for training the model*/

.Append(myContext.Transforms.LoadRawImageBytes(

outputColumnName: "Img",

imageFolder: assets,

inputColumnName: "ImgPath"));

Use Fit() method to apply the shuffled data to the preprocessingPipeline EstimatorChain. Transform() method is then applied to get an IDataView containing the pre-processed data.

IDataView preProcData = preprocessingPipeline.Fit(shuffle).Transform(shuffle);

Create train/validation/test datasets splits

TrainTestData trainSplit = myContext.Data.TrainTestSplit(data: preProcData, testFraction: 0.3); TrainTestData validationTestSplit = myContext.Data.TrainTestSplit(trainSplit.TestSet);

testFraction: 0.3 means that 30% of the whole data is used as validation set while rest of the 70% as train set. From the validation set, 90% data is used for validation and remaining 10% for testing.

Create IDataView of each of the splits.

IDataView trainSet = trainSplit.TrainSet; IDataView validationSet = validationTestSplit.TrainSet; IDataView testSet = validationTestSplit.TestSet;

Define model training pipeline

Store required and optional parameters of ImageClassificationTrainer

var classifierOptions = new ImageClassificationTrainer.Options()

{

//input column for the model

FeatureColumnName = "Image",

//target variable column

LabelColumnName = "LabelAsKey",

//IDataView containing validation set

ValidationSet = validationSet,

//define pretrained model to be used

Arch = ImageClassificationTrainer.Architecture.ResnetV2101,

//track progress during model training

MetricsCallback = (metrics) => Console.WriteLine(metrics),

/*if TestOnTrainSet is set to true, model is evaluated against

Training set if validation set is not there*/

TestOnTrainSet = false,

//whether to use cached bottleneck values in further runs

ReuseTrainSetBottleneckCachedValues = true,

/*similar to ReuseTrainSetBottleneckCachedValues but for validation

set instead of train set*/

ReuseValidationSetBottleneckCachedValues = true

};

Define the EstimatorChain training pipeline

var trainingPipeline = mlContext.MulticlassClassification.Trainers.ImageClassification(classifierOptions).Append(mlContext.Transforms.Conversion.MapKeyToValue("PredictedLabel"));

Fit the training data to the pipeline

ITransformer trainedModel = trainingPipeline.Fit(trainSet);

Create utility method to display predictions made by the model

private static void OutputPred(Output pred)

{

string imgName = Path.GetFileName(pred.ImgPath);

Console.WriteLine($"Image: {imgName} | Actual Label: {pred.Label} |

Predicted Label: {pred.PredictedLabel}");

}

Make prediction for a single image

public static void ClassifyOneImg(MLContext myContext, IDataView data, ITransformer trainedModel)

{

PredictionEngine<InputData, Output> predEngine = myContext.Model.CreatePredictionEngine<InputData, Output>(trainedModel);

InputData image = myContext.Data.CreateEnumerable<InputData>(data,reuseRowObject:true).First();

Output prediction = predEngine.Predict(image);

//print predicted value

Console.WriteLine("Prediction for single image");

OutputPred(prediction);

}

Run ClassifyOneImg() in your application

ClassifyOneImg(myContext, testSet, trainedModel);

Make predictions for multiple images

public static void ClassifyMultiple(MLContext myContext, IDataView data, ITransformer trainedModel)

{

IDataView predictionData = trainedModel.Transform(data);

IEnumerable<Output> predictions =

myContext.Data.CreateEnumerable<Output>(predictionData, reuseRowObject:

true).Take(20); //20 images

Console.WriteLine("Prediction for multiple images");

foreach (var p in predictions)

{

OutputPred(p); //print predicted value of each image

}

}

Run ClassifyMultiple() in your application

ClassifyMultiple(myContext, testSet, trainedModel);

Run your console application

Output

The output will look something like this:

Bottleneck phase:

Phase: Bottleneck Computation, Dataset used: Train, Image Index: 279 Phase: Bottleneck Computation, Dataset used: Train, Image Index: 280 Phase: Bottleneck Computation, Dataset used: Validation, Image Index: 1 Phase: Bottleneck Computation, Dataset used: Validation, Image Index: 2

Training phase:

Phase: Training, Dataset used: Validation, Batch Processed Count: 6, Epoch: 21, Accuracy: 0.6757613 Phase: Training, Dataset used: Validation, Batch Processed Count: 6, Epoch: 22, Accuracy: 0.7446856 Phase: Training, Dataset used: Validation, Batch Processed Count: 6, Epoch: 23, Accuracy: 0.7716660

Output of classification:

Prediction for single image Image: 7001-220.jpg | Actual Value: UD | Predicted Value: UD Prediction for multiple images Image: 7001-220.jpg | Actual Value: UD | Predicted Value: UD Image: 7001-163.jpg | Actual Value: UD | Predicted Value: UD Image: 7001-210.jpg | Actual Value: UD | Predicted Value: UD Image: 7004-125.jpg | Actual Value: CD | Predicted Value: UD

Ways to improve model’s performance

- Use more data from the dataset instead of just sticking to bridge deck images

- Try using some other model architecture

- Try varying the values of some hyperparameters

- Perform data augmentation

- Train for more time by incrementing the number of epochs

References

Following are the sources used for the above-explained code and its implementation procedure: