Overfitting is a basic problem in supervised machine learning where the model shows well generalisation capabilities on seen data but poorly performs on unseen data. Overfitting occurs as a result of the existence of noise, the small size of the training set, and the complexity involved in algorithms. In this article, we will be discussing different strategies to overcome the overfitting of machine learners while at the training stage. Following are the topics to be covered.

Table of contents

- The “Mean” Overfitting

- Identifying overfitting

- Strategies to mitigate

- Dimensionality reduction

- Outlier treatment

- Cross-validation

- Early stopping

- Network reduction

- Data augmentation

- Feature selection

- Regularisation

- Hyperparameter tuning

Let’s start with the overview of overfitting in the machine learning model.

The “Mean” Overfitting

Model is overfitting data when it memorises all the specific details of the training data and fails to generalise. It is a statistical error caused by poor statistical judgments. Because it is too closely tied to the data set, it adds bias to the model. Overfitting limits the model’s relevance to its data set and renders it irrelevant to other data sets.

Definition according to statistics

In the presence of a hypothesis space, a hypothesis is said to overfit the training data if there exists some alternative hypothesis with a smaller error than the hypothesis over the training examples, but the alternative hypothesis with a smaller overall error than the entire distribution of instances.

Are you looking for a complete repository of Python libraries used in data science, check out here.

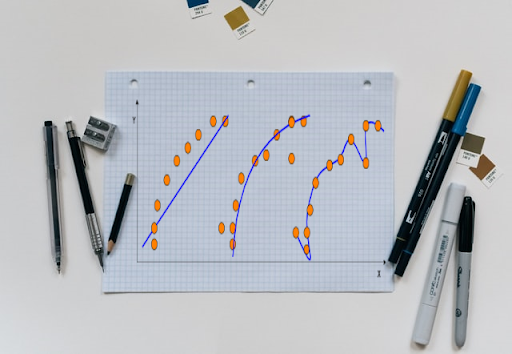

Identifying overfitting

Detecting overfitting is almost impossible before you test the data. During the training, there are two errors: training error and validation error when the training is constantly decreasing but the validation error decreases for a period and then starts to increase but meanwhile the training error is still decreasing. This kind of scenario is overfitting.

Let’s understand the mitigation strategies for this statistical problem.

Strategies to mitigate

There are different stages in a machine learning project where different mitigation techniques could be applied to mitigate the overfitting.

Dimensionality of data

High dimensional data lead to model overfitting because in these data the number of observations is much less than the number of features. This will result in indeterministic answers to the problem.

Ways to mitigate

- Use regularisation models which can reduce the dimensionality of data like Principal component analysis, Lasso Regression, and Ridge Regression.

- Drop features with a high number of missing values; if a specific column in a dataset has a high number of missing values, you may be able to remove it entirely without losing much information.

- Eliminate low variance features; if a particular column in a dataset has values that fluctuate very rarely, you may be able to drop it because it is unlikely to provide as much relevant information about a response variable as other features.

- Drop features that have a poor correlation with the response variable; if a feature is not substantially linked with the response variable of interest, it is likely to be removed from the dataset since it is unlikely to be helpful in a model.

Outliers treatment

During the process of data wrangling, one can face the problem of outliers in the data. As these outliers increase the variance in the dataset and due to this the model will train itself to these outliers and will result in an output which has high variance and low bias. Hence the bias-variance tradeoff is disturbed.

Ways to mitigate

They either require particular attention or should be utterly ignored, depending on the circumstances. If the data set contains a significant number of outliers, it is critical to utilise a modelling approach that is resistant to outliers or to filter out the outliers.

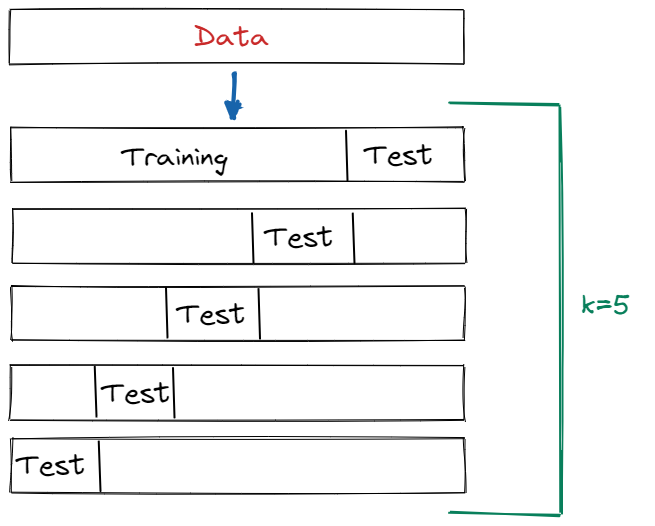

Cross-validation

Cross-validation is a resampling technique used to assess machine learning models on a small sample of data. Cross-validation is primarily used in applied machine learning to estimate a machine learning model’s skill on unseen data. That is, to use a small sample to assess how the model will perform in general when used to generate predictions on data that was not utilised during the model’s training.

Evaluation Procedure using K-fold cross-validation

- Divide the dataset into K equal divisions (also known as “folds”).

- As the testing set, use fold 1 and the union of the other folds as the training set.

- Determine the testing accuracy.

- Steps 2 and 3 should be repeated K times with a different fold as the testing set each time.

- As an approximation of out-of-sample accuracy, use the average testing accuracy.

The above is the process of K fold when k is 5 this is known as 5 folds.

Early-stopping

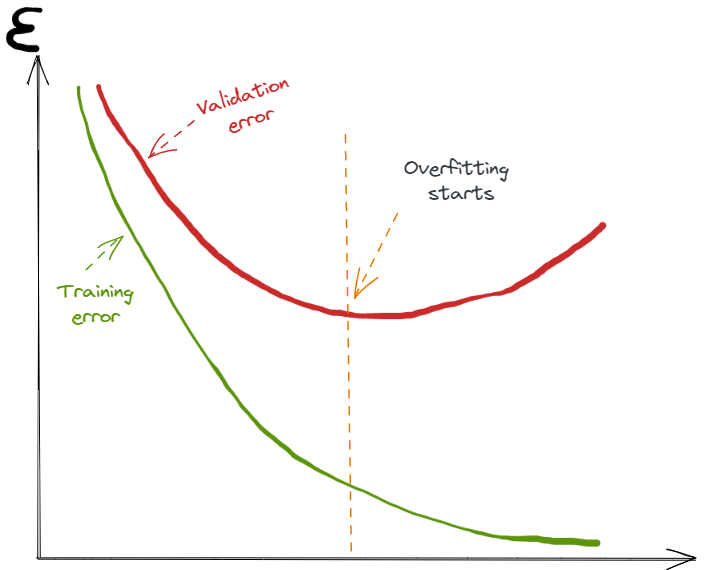

This method is used to prevent the “learning speed slow-down” problem. Because of noise learning, the accuracy of algorithms stops improving beyond a certain point or even worsens.

The green line represents the training error, and the red line represents the validation error, as illustrated in the picture, where the horizontal axis is an epoch and the vertical axis is an error. If the model continues to learn after the point, the validation error will rise while the training error will fall. So the goal is to pinpoint the precise time at which to discontinue training. As a result, we achieved an ideal fit between under-fitting and overfitting.

Way to achieve the ideal fit

To compute the accuracy after each epoch and stop training when the accuracy of test data stops improving, and then use the validation set to figure out a perfect set of values for the hyper-parameters, and then use the test set to complete the final accuracy evaluation. When compared to directly using test data to determine hyper-parameter values, this method ensures a better level of generality. This method assures that, at each stage of an iterative algorithm, bias is reduced while variance is increased.

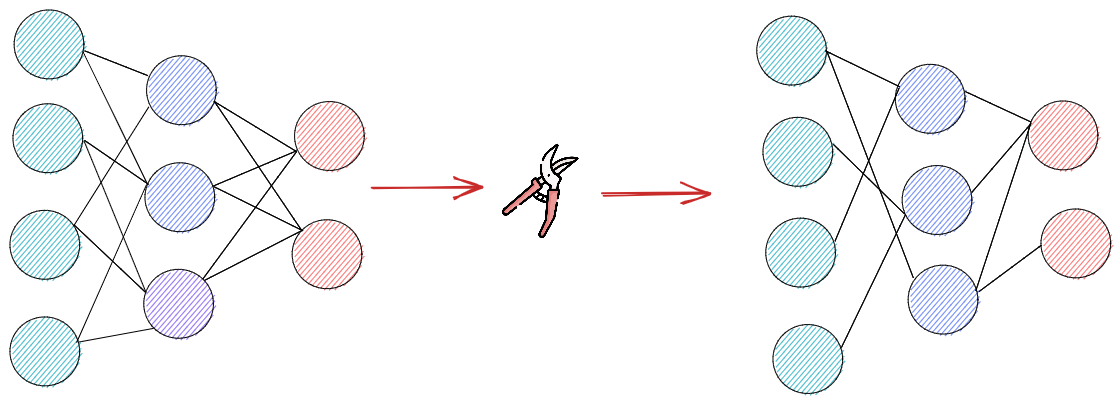

Network-reduction

Noise reduction, naturally, becomes one study path for overfitting inhibition. Pruning is recommended to lower the size of final classifiers in relational learning, particularly in decision tree learning, based on this concept. Pruning is an important principle that is used to minimise classification complexity by removing less useful or irrelevant data, and then to prevent overfitting and increase classification accuracy. There are two types of pruning.

- During the learning phase, pre-pruning algorithms are in use. Stopping criteria are commonly used to determine when to stop adding conditions to a rule or adding the rule to a model description, such as encoding length restriction based on encoding cost evaluation; significance testing is based on significant differences between the distribution of positive and negative examples; and cutoff stopping criterion based on a predefined threshold.

- Following pruning, the training set is divided into two subsets: the growing set and the pruning set. Post-pruning algorithms, in contrast to pre-learning algorithms, overlook overfitting concerns during the learning process on a developing collection. Instead, they avoid overfitting by removing criteria and rules from the model formed during learning. This method is far more accurate, but it is also less efficient.

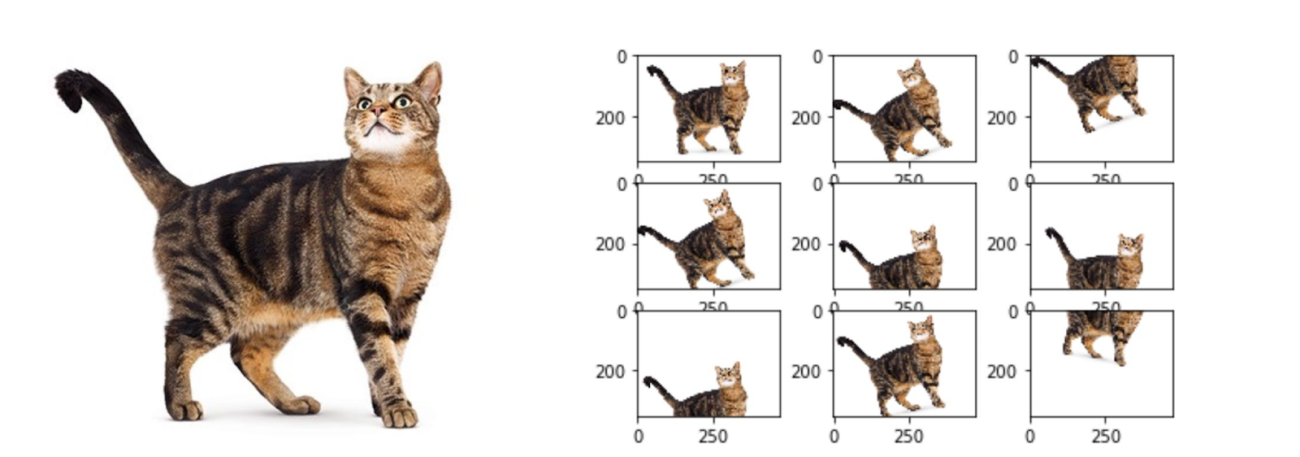

Data Augmentation

In many circumstances, the amount and quality of training datasets may have a considerable impact on machine learning performance, particularly in the domain of supervised learning. The model requires enough data for learning to modify parameters. The sample count is proportional to the number of parameters.

In other words, an extended dataset can significantly enhance prediction accuracy, particularly in complex models. Existing data can be changed to produce new data. In summary, there are four basic techniques for increasing the training set.

- More training data should be collected.

- To the present dataset, add some random noise.

- Through some processing, re-acquire some data from an existing data set.

- Create new data depending on the distribution of the existing data set.

Feature selection

When creating a predictive model, feature selection is the process of minimising the number of input variables. It is preferable to limit the number of input variables to lower the computational cost of modelling and, in some situations, to increase the model’s performance.

The following are some prominent feature selection strategies in machine learning:

- Information Gain is defined as the quantity of information supplied by the feature for recognising the target value and assessing entropy reduction. The information gain of each characteristic is calculated while the goal values for feature selection are taken into account.

- The chi-square test is commonly used to examine the association between categorical variables. It compares the observed values from the dataset’s various properties to the predicted value.

- Forward selection is an iterative strategy in which we begin with an empty collection of features and continue to add a feature that best improves our model after each iteration. The halting condition is that the addition of a new variable does not increase the model’s performance.

- The backward elimination method is likewise an iterative strategy in which we begin with all features and delete the least significant feature after each cycle. The halting condition is that no increase in the model’s performance is noticed when the feature is eliminated.

Regularisation

Regularisation is a strategy for preventing our network from learning an overly complicated model and hence overfitting. The model grows more sophisticated as the number of features rises.

An overfitting model takes all characteristics into account, even if some of them have a negligible influence on the final result. Worse, some of them are simply noise that has no bearing on the output. There are two types of strategies to restrict these cases:

- From the model, choose just the helpful traits and discard the superfluous ones.

- Reduce the weights of characteristics that have minimal impact on the final classification.

In other words, the impact of such ineffective characteristics must be restricted. However, there is uncertainty in the unnecessary characteristics, so minimise them altogether by reducing the model’s cost function. To do this, include a “penalty word” called regularizer into the cost function. There are three popular regularisation techniques.

- L1 Regularization uses the Lasso Regression to minimise the cost function. The so-called taxi-cab distance is the total of the absolute values of all the weights as the penalty term in this technique. To minimise the cost function, the weights of some characteristics must be adjusted to zero. This results in a simplified model that is easier to comprehend. At the same time, certain beneficial characteristics that had a little impact on the final result were lost.

- The “Ridge Regression” idea is used in L2 Regularization. The penalty term in this technique is the Euclidean distance. When compared to L1 regularisation, this method causes networks to favour learning features with low weights.

Instead of discarding those less valuable qualities, it assigns lower weights to them. As a result, it can gather as much information as possible. Large weights can only be assigned to attributes that improve the baseline cost function significantly.

- Dropout is a common and effective anti-overfitting strategy in neural networks. Dropout’s primary concept is to randomly remove units and important connections from neural networks during training. This inhibits units from co-adapting excessively.

Hyper-parameter tuning

Hyperparameters are selection or configuration points that allow a machine learning model to be tailored to a given task or dataset. To optimise them is known as hyperparameter tuning. These characteristics cannot be learnt directly from the standard training procedure.

They are generally resolved before the start of the training procedure. These parameters indicate crucial model aspects such as the model’s complexity or how quickly it should learn. Models can contain a large number of hyperparameters, and determining the optimal combination of parameters can be thought of as a search issue.

GridSearchCV and RandomizedSearchCV are the two most effective Hyperparameter tuning algorithms.

GridSearchCV

In the GridSearchCV technique, a search space is defined as a grid of hyperparameter values, and each point in the grid is evaluated.

GridSearchCV has the disadvantage of going through all intermediate combinations of hyperparameters, which makes grid search computationally highly costly.

Random Search CV

The Random Search CV technique defines a search space as a bounded domain of hyperparameter values that are randomly sampled. This method eliminates needless calculation.

Final opinion

Overfitting is a general problem in supervised machine learning that cannot be avoided entirely. It occurs as a result of either the limitations of training data, which might be restricted in size or comprise a large amount of data, or noises, or the restrictions of algorithms that are too sophisticated and need an excessive number of parameters. With this article, we could understand the concept of overfitting in machine learning and the ways it could be mitigated at different stages of the machine learning project.