For a fan of the Marvel Cinematic Universe, the voice of J.A.R.V.I.S, the AI managing Tony Stark’s superhero affairs, would be the go-to example for an AI producing nuanced human speech. What if this possibility of human sounding speech by an AI were a reality? Researchers at Google claim to have managed to accomplish a similar feat through Tacotron 2.

In a paper titled, Natural TTS synthesis by conditioning WaveNet on mel spectrogram predictions, a group of researchers from Google claim that their new AI-based system, Tacotron 2, can produce near-human speech from textual content.

What Is Tacotron 2?

It is an AI-powered speech synthesis system that can convert text to speech.

How Does It Work?

Tacotron 2’s neural network architecture synthesises speech directly from text. It functions based on the combination of convolutional neural network(CNN) and recurrent neural network(RNN).

According to the paper, the system consists of two components:

- a recurrent sequence-to-sequence feature prediction network with attention which predicts a sequence of mel spectrogram frames from an input character sequence

- a modified version of WaveNet which generates time-domain waveform samples conditioned on the predicted mel spectrogram frames

In simple words, Tacotron 2 works on the principle of superposition of two deep neural networks — One that converts text into a spectrogram, which is a visual representation of a spectrum of sound frequencies, and the other that converts the elements of the spectrogram to corresponding sounds.

A Child Of Tacotron And WaveNet

Tacotron 2 is said to be an amalgamation of the best features of Google’s WaveNet, a deep generative model of raw audio waveforms, and Tacotron, its earlier speech recognition project. The sequence-to-sequence model that generates mel spectrograms has been borrowed from Tacotron, while the generative model synthesising time domain waveforms from the generated spectrograms has been borrowed from WaveNet.

Tacotron is considered to be superior to many existing text-to-speech programs. In the vocoding process, Tacotron uses the Griffin-Lim algorithm for phase estimation, followed by an inverse short-time Fourier transform. However, Griffin-Lim produces lower audio fidelity and characteristic artifacts when compared to approaches like WaveNet.

This is where WaveNet comes into play.

WaveNet, which sees utilization in Google Assistant, produces audio whose quality is superior to the one produced by Griffin-Lim. It uses linguistic features, phoneme durations, and log F0 at a frame rate of 5 ms. The researchers modified the WaveNet architecture to incorporate 12.5 ms feature spacing by using only 2 upsampling layers in the transposed convolutional network. This was done in order to do way with the pronunciation issues faced when predicting spectrogram frames that were very closely spaced. The modified WaveNet model acts as the vocoder in the process.

Now, using the Tacotron inspired model, an 80-dimensional audio spectrogram with frames computed every 12.5 milliseconds is generated. The other model with an architecture very similar to WaveNet converts these features to a 24 kHz waveform.

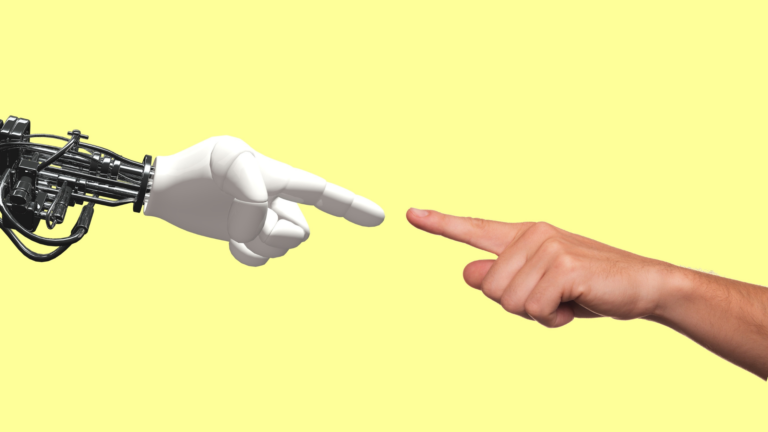

Unlike models that produce speech using complex linguistic and acoustic features as input, Tactotron 2 is aided by neural networks trained using only speech examples and corresponding text transcripts.

What Can It Do?

As the old adage, “like father, like son” suggests, Tacotron 2 has many of the features that Tacotron possesses. With the integration of WaveNet’s vocoding abilities, the resultant speech is claimed to be almost human and can comprehend the finer nuances that textual material intends to convey.

Near-human Voice

Haven’t sci-fi movies and television shows always shown a computer to have a robotic and a mechanical mono-tonal voice? That may not be the case with this system. Attempts at discerning human voice from Tacotron 2’s may prove futile. The voice generated by the system sounds so human, that it is nearly impossible to label them. These audio samples found on the research website GitHub,which show how difficult an exercise it can be. Two female voices, one human and the other AI can be heard saying the same sentence, “I’m too busy for romance.”

GT

Gen

The researchers claim that the phrases used to produce all the audio samples were unseen by Tacotron 2 during training. The two wav files have the appends ‘gen’ and ‘gt’. Based on the paper, if one has to make a guess, it is likely that the file with ‘gen’ could be speech generated by Tacotron 2, and the file with ‘gt’, which stands for ground truth, could be real human speech.

Distinguishes Heteronyms

Tacotron 2 can also effortlessly distinguish between the meanings of heteronyms and pronounce them based on the usage. This means that Tacotron 2 can pronounce words like read, sewer and lead, according to their usage in a sentence. The audio sample below demonstrates this ability through the vocal rendering of the sentence, “Don’t desert me here in the desert!”

Mastery Over Prosody

Another area which Tacotron 2 seems to have mastered is prosody. Prosody is a distinguishing feature of human speech where the rhythm, stress and intonation of language conveys a wide range of meanings and subtleties. The samples below serve as an example of the system’s apparent ability to apply the right ictus to punctuations and capitalisation of words.

“The buses aren’t the problem, they actually provide a solution.”

“The buses aren’t the PROBLEM, they actually provide a SOLUTION.”

Ace In Alliteration– Nails Tongue Twisters

Most of us are no strangers to stuttering and stumbling while trying to mouth the famous tongue-twister, “She sells sea-shells on the sea-shore. The shells she sells are sea-shells I’m sure.”

The alliteration of letters in a single sentence makes it difficult to read or say it . That coupled with the speed at which one is expected to say it makes for a rare and funny instance of self-deprecation. But Tacotron 2 seems to be putting human beings to shame even in that department.

Unfazed By Spelling Errors In The Text

Besides grammatical errors, the most commonly found errors in written text are wrong spellings. Supposedly, Tactoron 2 can decipher dreadfully spelt words and convert them to speech with the correct pronunciation. An example of this can be seen in its vocal rendering of the sentence, “Thisss isrealy awhsome.”

Conclusion

According to the paper, Tacotron 2 is not without its flaws. During experimentation, errors such as skipped words, unnatural prosody, pronunciation difficulties,among others were found. Challenges with respect to the end-to-end neural approach, difference between predicted features and ground truth, among others were some of the other issues encountered.

However, the researchers claim that the model achieves a mean opinion score (MOS) of 4.53 comparable to a MOS of 4.58 for professionally recorded speech.They also claim that the system can be trained directly from data without relying on complex feature engineering.

With any new technology, there is always scope for continuous improvement through the pursuit of perfection. Being a newly proposed technology,Tacotron 2 has the opportunity to improve through this pursuit. It will be interesting to follow the progress that Tacotron 2 makes in the future and see what exciting uses it finds.