Xebia is a full stack software engineering, IT strategy and digital consulting company. They leverage big data, AI and cloud to enable digital transformation of enterprises.

“We enable organisations to accelerate their data modernisation journey while reducing costs. We specialise in building AI applications, ML solutions, data hub, business analytics and delivering AI governance frameworks for our customers,” said Ram Narasimhan, Global Head AI and Cognitive services, Xebia.

In an exclusive interview with Analytics India Magazine, Ram Narasimhan spoke about how Xebia embeds ethics into their business processes.

AIM: What are the AI governance methods, techniques, and frameworks used in Xebia?

Ram Narasimhan: AI projects are complicated. They bring together a diverse set of systems, processes, and business unit responsibilities into a platform-as-a-service kind of approach to serve internal and external stakeholders. Any organisation that adopts AI solutions must put together an AI governance plan first.

Microsoft AI fairness checklist, San Francisco’s Ethics and Algorithms toolkit, Google AI fairness, UK Government’s data ethics workbook, NITI Aayog’s Responsible AI, Alan Turing’s Understanding AI Ethics and Safety, IEEE Ethics in Action etc are the AI governance frameworks in existence today.

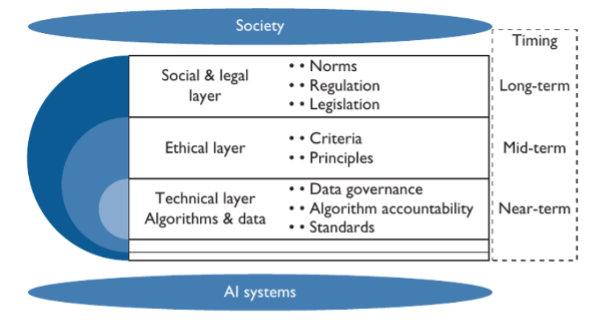

While the exact contours of a future AI governance model is still undecided, advanced governance models such as polycentric governance, hybrid regulation, mesh regulation and active-matrix theory can provide some framework and inspiration. In my opinion, breaking down the problem into simple chunks, in this case, the “Modular–Layered Model”, would be the most effective and clear way to define a set of standards.

Consider layered approaches such as Open System Interconnection (OSI) reference model during the late 1970s or even layered model proposed by David Clark to represent cyber-space; it was designed around modularity to reduce interdependencies and establish clear hierarchies and responsibilities. You can also refer to Harvard’s “A Layered Model for AI Governance.”

The nature of the AI governance model could be broken down into social and legal, ethical, and technical foundations.

Building a recommender engine for real-time interactions with customers may lead to a full-scale implementation of AI solutions across the enterprise. AI frameworks governing such solutions must include a complete view of audit data and KPIs for exceptions and scoring. A complete roadmap around the AI framework can be built for such scenarios based on a layered modular approach.

AIM: What explains the growing conversations around AI ethics, responsibility, and fairness of late? Why is it important?

Ram Narasimhan: AI requires significant R&D and investments to make a successful outcome for all stakeholders, including abiding by laws, regulations, and compliance to certain jurisdictions. Developing an AI solution also brings to light an important factor which is fairness, while consuming the output of AI.

Discrimination towards a sub-population can be created unknowingly, unintentionally, with incorrect labelling and/or incorrect data fed to AI. For example, biased AI outcomes on genders and race could be potentially disastrous for everyone involved. Bias in machine learning models can be due to algorithm, user interaction, representation bias in data and bias in sample data. Detecting bias in a single feature is easy; however, it could be difficult to detect it for a combination, e.g. race, income, gender, etc.

Correlation analysis can be performed to check model fairness, groups/band scores can be analysed, and probability scores can be verified. Apart from this, IT leaders will also have to infuse data quality and ethics rules per system/application feeding data to AI, have a business glossary to describe data and a technology stack to control AI outcome in every layer such as databases, platforms, applications, environments, presentation layers and exchange layers to scale.

AIM: How does Xebia ensure adherence to its AI governance policies?

Ram Narasimhan: Building responsible AI requires a humble appreciation of human interactions, background, circumstances, and diverse expertise. Every developer involved brings different knowledge, level of maturity, awareness and empathy. We need to understand the trade-offs in achieving the goal set by organisation and in managing the pressure for AI outcomes on time.

Here’s how we adhere to organisation policies and best practices:

· Raising awareness, building AI principles, developing scoring matrix for assessing effectiveness to principles and a team spirit towards delivering world class AI solutions.

· Case studies, references, tools and resources being made available for monitoring outcomes and for seeking guidance on certain KPIs.

· Hands-on support and peer review sessions to provide real time insights on do and don’ts with an AI project.

· Design and review forums to go through checklists and bring a balanced view.

· Bringing external perspectives to validate feedback during the design and development phase.

AIM: How do you mitigate biases in your AI algorithms?

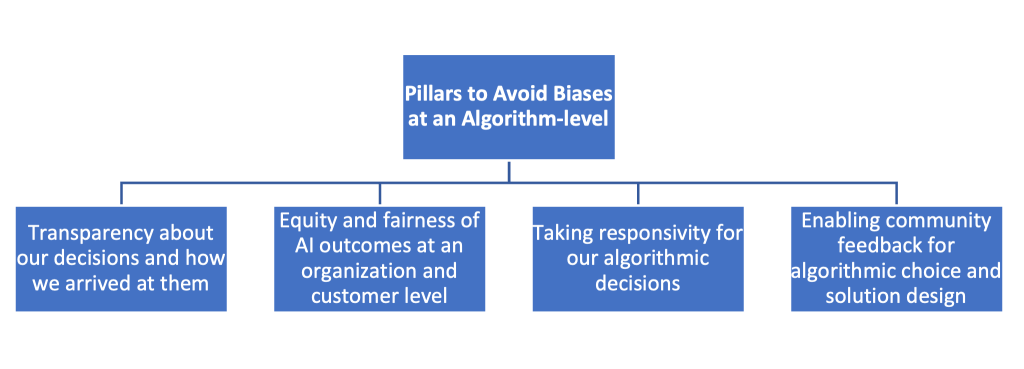

Ram Narasimhan: Technical solutions alone do not resolve the potential harmful effects of algorithmic decisions. However, there are certain things you can keep in mind while developing AI algorithms.

Given an existing AI solution, you can follow these steps to fix it:

· Examine training dataset, conduct subpopulation analysis and monitor models over time.

· Establish debiasing strategy that involves controls over technical platform, operational systems and workplace KPIs defining AI policies.

· Improve human-driven processes by building pipeline insights and showcasing a dashboard of potential bias as exceptions.

· Decide on automation of use cases and human touch that might be needed.

· Follow a multidisciplinary process, involving various subject matter experts from diverse socio/economic backgrounds to validate AI outcomes.

· Engage and build a diversified AI team to mitigate unwanted bias.

AIM: Do you have a due diligence process to make sure the data is collected ethically?

Ram Narasimhan: Data collection is a huge process that involves internal data and external datasets that are publicly available and from agreed reliable intelligence sources on a contract basis. Usually, companies tend to have agreements for data collection and availability. However, many companies also practice web scraping, which is a process of extracting data from web-based resources. Based on business needs, a company can decide to buy or sell data; however, there must be a definite set of standard processes to map, validate and transform data to achieve the desired outcome.

· Ownership: Individuals own their data. Digital privacy is a concern for any customer; there must be a written agreement to seek permission to avoid ethical and legal dilemmas.

· Transparency: A company is legally obligated to reveal how they intend to collect and process individuals’ data. Clear policies and security outlines must be defined and made available to relevant entities/individuals.

· Privacy: Personally identifiable information (PII) should be protected and must not be made public.

· Intention: If an insurance company decides to collect information and decide on mental health or financial health, the intention and way to use the data should be ethical and not meant to exploit the individual/entity.

· Outcomes: Outcome of data analysis can lead to inadvertent harm to individuals, communities, or entities. This should be addressed.

AIM: How do you embed ethical principles in your platform?

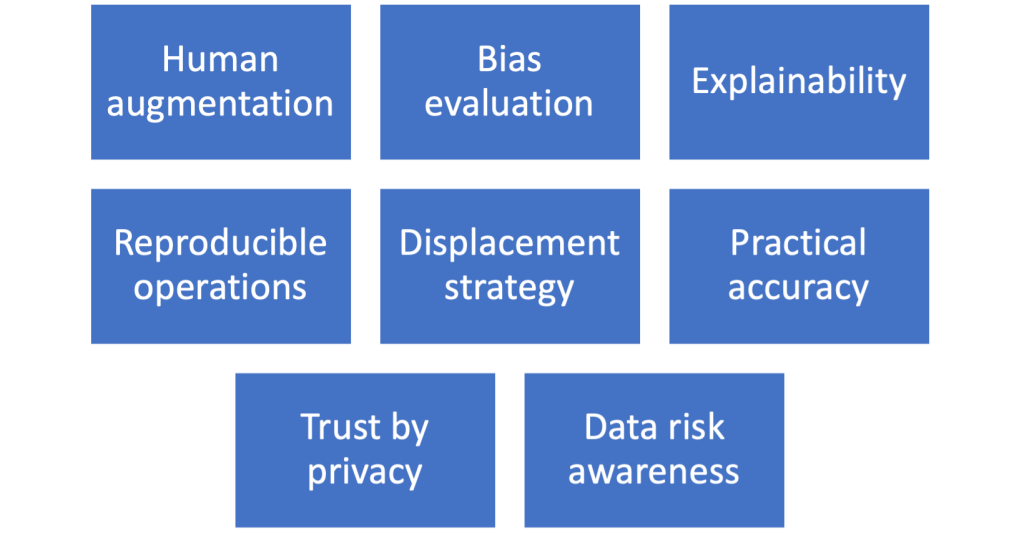

Ram Narasimhan: If you consider a real-world example of a recommender system for an e-commerce sales purpose, ethical principles can be applied to an AI platforms in following ways:

AIM: How does your company ensure user data privacy?

Ram Narasimhan: As AI evolves, it magnifies the opportunity and use of personal data in ways that can intrude on someone’s privacy by doing real-time analysis of personal information across various platforms and lifespan of events. Facial recognition and fingerprint scanning are used to identify a person and facilitate financial or trade transactions, potentially benefiting any specific company. It is important to seek permission from the user to utilise and analyse data. Secondly, companies analysing user data and utilising them in algorithms must take certain care in processing private data:

• Provide a self-service portal to enable customers to manage digital footprint, permission, visibility and potential bias based on interactions. For example, complying with GDPR, CCPA or similar data management standards.

• In data collection, introduce a data stewardship process to manage personal data in systems.

• Introduce data transparency or disclosure rules for algorithms using personal data. For example, rules to manage email data, downloaded files, location data, chatrooms data, website data, searches, apps data, time-related data, etc.

• Data governance rule for enterprise, which is applicable to all systems for privacy by design.

• Rules for aggregating data

• Rules for a data marketplace

AIM: Did you come across any biases, or ethical concerns/issues lately within your organisation/industry/product? If yes, how did you address them?

Ram Narasimhan: Most of the companies are not only relying on OEM providers to facilitate audit, security, metadata view and monitoring mechanisms, they are also building data completeness checks, data archiving checks, data privacy checks, data leakage checks, and data security checks. And from a compliance/regulatory perspective, they are building encryption and tokenization into platform design.

More recently, companies are adopting MLOps, AutoML and AIOps types of frameworks to manage model effectiveness, fairness, ethical validation, and biased outcomes to control unintentional AI damage. More robust and careful AI governance committees are being formed, more awareness is being created, and diversified AI teams are being put in place for mitigating ethical conflicts.