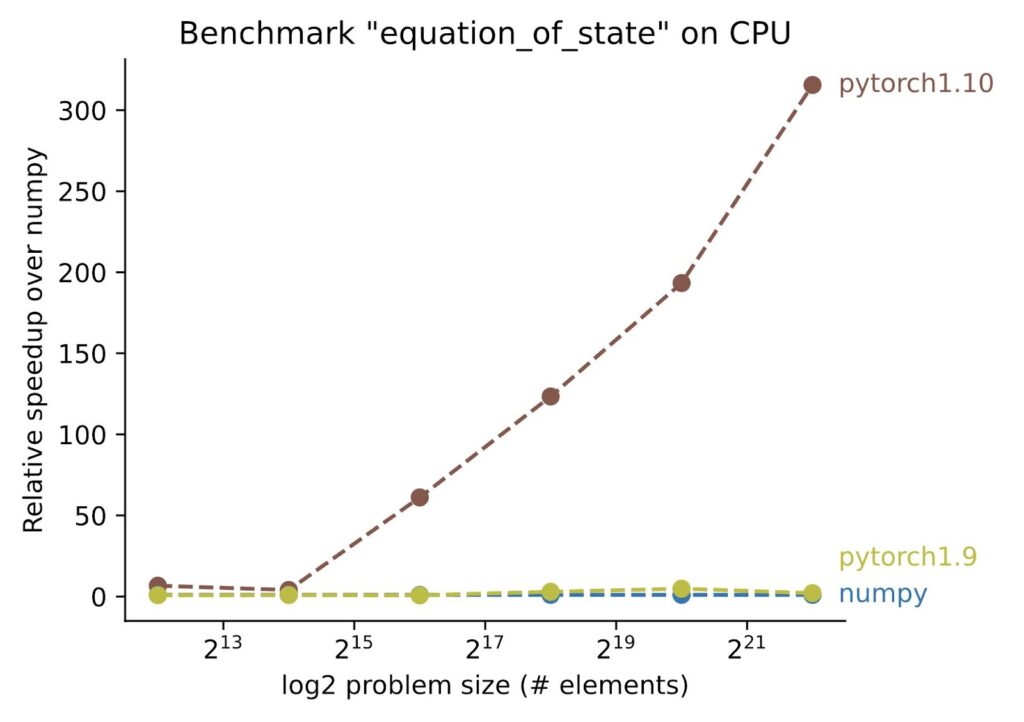

A team of software engineers at Facebook, led by Software Engineer Bertrand Maher, recently released a JIT compiler for CPUs based on LLVM, called NNC, for “Neural Network Compiler.” The result was derived from the pyhpc-benchmark suite.

The benchmark itself is a wonderland for an ML compiler, with lots of opportunities for loop fusion meaning you can get really astonishing speedups. While the original benchmark forces single-thread execution (and NNC does well, yielding 23x over NumPy), it really shines when you lift the threading cap. On a 96-core development box, it saw 150x over PyTorch 1.9, and 300x over NumPy.

Tremendous amounts of time and resources go into the development of Python frontends to high-performance backends, but those are usually tailored towards deep learning. The development team wanted to see whether it can profit from those advances, by using these libraries for geophysical modelling, using a pure Python ocean simulator Veros.

By assembling benchmark results, the team learned the following: (one’s mileage may vary)

- The performance of Jax seems very competitive, both on GPU and CPU. It is consistently among the top implementations on the CPU and shows the best performance on GPU.

- Jax’s performance on GPU seems to be quite hardware dependent. Jax’s performance is significantly better (relatively speaking) on a Tesla P100 than a Tesla K80.

- Numba is a great choice on CPU if you don’t mind writing explicitly for loops (which can be more readable than a vectorized implementation), being slightly faster than Jax with little effort.

- If you have embarrassingly parallel workloads, speedups of > 1000x are easy to achieve on high-end GPUs.

- Tensorflow is not great for applications like this, since it lacks tools to apply partial updates to tensors (in the sense of tensor[2:-2] = 0.).

- Don’t bother using Pytorch or vanilla Tensorflow on the CPU. Tensorflow with XLA (experimental_compile) is great though!

- Reaching Fortran performance on CPU with vectorized implementations is hard.

Veros is the versatile ocean simulator which aims to be a powerful tool that makes high-performance ocean modeling approachable and fun. Veros supports a NumPy backend for small-scale problems and a high-performance JAX backend with CPU and GPU support. It is fully parallelized via MPI and supports distributed execution. Veros is currently being developed at Niels Bohr Institute, Copenhagen University..