|

Listen to this story

|

Tensorflow model optimization is a framework utilized to optimize models developed so that they can be pushed for deployment easily. The TensorFlow model optimization framework basically aims to condense huge and memory-heavy Tensorflow models into lighter versions of models so that the models developed can be easily integrated into edge devices. The weight clustering API is one such use case of the Tensorflow model optimization library which aims to reduce the huge network sizes. In this article let us understand how to use the weight clustering API to optimize Tensorflow model performance.

Table of Contents

- Introduction to TensorFlow model optimization

- Benefits of Tensorflow model optimization

- Condensing deep learning models with weight clustering API

- Summary

Introduction to TensorFlow model optimization

Tensorflow model optimization is one of the libraries of Tensorflow which is used to optimize the models developed. The optimization library mainly aims to seamlessly integrate heavy TensorFlow models on edge devices with certain memory constraints. Models optimized using the TensorFlow optimization library enable us to integrate the models developed with devices with certain hardware constraints and in some cases where the devices use certain accelerators.

Benefits of Tensorflow model optimization

Any model developed when optimized is prone to yield better results and performance. So the Tensorflow model optimization is one such framework formulated by Tensorflow that aims to optimize the model to its best form so that it can be used seamlessly on various devices. So let us look into some of the benefits of Tensorflow model optimization.

- Reduction in memory occupancy benefits storing and integrating the models on user devices easily. Reduction in memory also facilitates easy downloading of the model.

- The optimized models are also easy to integrate on devices with memory constraints along with faster integration as the models will be lighter.

- Considerable reduction in latency can be achieved by model optimization and the models will also have the ability to perform the required tasks quickly.

- Phenomenal performance can be achieved on various accelerators like TPUs as they are fabricated to process faster for optimized models.

Now let us look into a case study on how to use the weight clustering API for optimizing the Tensorflow models.

Condensing deep learning models with weight clustering API

For understanding how the weight clustering API optimizes the models first let us develop a simple Tensorflow model. So here let us use the fashion MNIST dataset to develop a simple deep learning model.

import tensorflow as tf import numpy as np import matplotlib.pyplot as plt from tensorflow.keras.layers import Flatten,Dense,Dropout,Conv2D,MaxPooling2D from tensorflow.keras.models import Sequential from tensorflow.keras.utils import to_categorical %matplotlib inline from tensorflow.keras.datasets import fashion_mnist (X_train,Y_train),(X_test,Y_test)=fashion_mnist.load_data() model1=Sequential() model1.add(Conv2D(32,kernel_size=2,input_shape=(28,28,1),activation='relu')) model1.add(MaxPooling2D(pool_size=(2,2))) model1.add(Conv2D(16,kernel_size=2,activation='relu')) model1.add(MaxPooling2D(pool_size=(2,2))) model1.add(Flatten()) model1.add(Dense(125,activation='relu')) model1.add(Dense(10,activation='softmax')) model1.compile(loss='sparse_categorical_crossentropy',optimizer='adam',metrics=['accuracy'])

So now we have developed a model with certain layers and suitably compiled with the required parameters. Now let us fit the model on the split data.

model1_fit_res=model1.fit(X_train,Y_train,epochs=10,validation_data=(X_test,Y_test))

Now as the model is fitted let us try to evaluate certain parameters from the model.

print('Model training loss : {} and training accuracy is : {}'.format(model1.evaluate(X_train,Y_train)[0],model1.evaluate(X_train,Y_train)[1]))

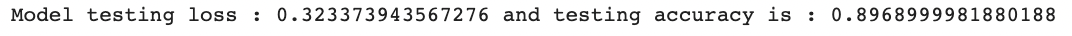

print('Model testing loss : {} and testing accuracy is : {}'.format(model1.evaluate(X_test,Y_test)[0],model1.evaluate(X_test,Y_test)[1]))

Until now we have built a simple deep learning model and have fitted it against the data and evaluated certain parameters from it. Now let us look into how to use the weight clustering API of the Tensorflow model optimization library and let us try to evaluate for any change in performance after using the weight clustering API.

Using the weight clustering API

Let us first install the library into the working environment and let us try to import the library into the working environment.

! pip install -q tensorflow-model-optimization import tensorflow_model_optimization as tfmot

Now that we have successfully imported the TensorFlow model optimization library into the working environment let us look into how to use the weight clustering API accordingly.

cluster_weights = tfmot.clustering.keras.cluster_weights cent_init = tfmot.clustering.keras.CentroidInitialization

Here the cluster weights inbuilt function of the clustering library is used for creating cluster weights and centroid initialization is taken up using the Centroid Initialization function of clustering. Now let us provide some random parameters for the number of clusters and declare the centroids accordingly. Using the clustered parameters a clustered model will be created as shown below.

clustering_params = {

'number_of_clusters': 32,

'cluster_centroids_init': cent_init.LINEAR

}

### Clustering the original model cluster_model = cluster_weights(model1, **clustering_params)

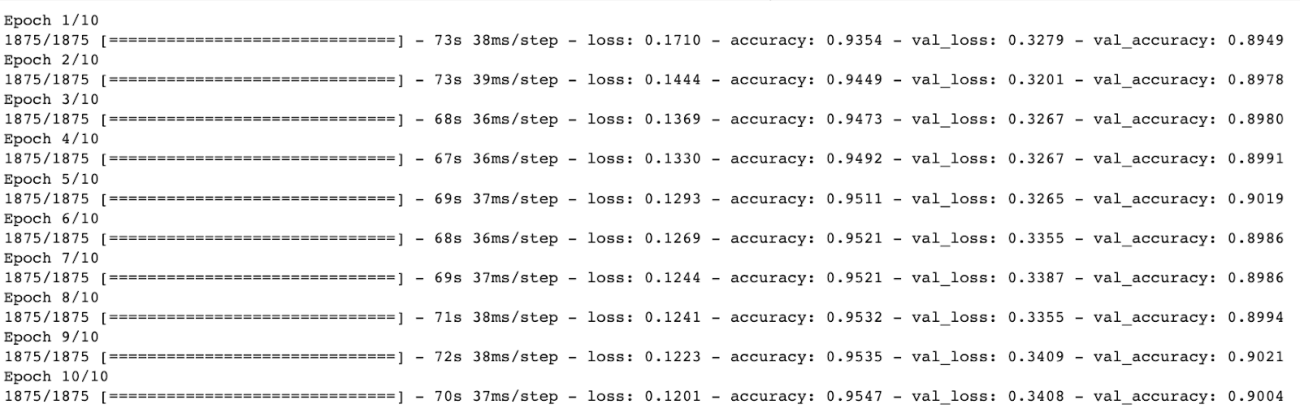

Now let us compile the model accordingly and fit it against the split data and evaluate certain parameters from the clustered model fitted.

### Optimizing the clustered model with adam optimizer and random learning rate from tensorflow.keras import optimizers optim = tf.keras.optimizers.Adam(learning_rate=1e-5) cluster_model.compile(loss='sparse_categorical_crossentropy',optimizer=optim,metrics=['accuracy']) cluster_mod_res=cluster_model.fit(X_train,Y_train,epochs=10,validation_data=(X_test,Y_test))

print('Model training loss : {} and training accuracy is : {}'.format(cluster_model.evaluate(X_train,Y_train)[0],cluster_model.evaluate(X_train,Y_train)[1]))

print('Model testing loss : {} and testing accuracy is : {}'.format(cluster_model.evaluate(X_test,Y_test)[0],cluster_model.evaluate(X_test,Y_test)[1]))

Here we can see that the model performance after clustering has seen a nearly 0.5% increase in the test accuracy. An important point to note is that the model performance is not decreased after using the clustering API. So now let us understand what actually is the benefits of using the weight clustering API of the Tensorflow model optimization library.

Let us save both the original deep learning model and the weight clustered model and let us try to obtain their memory occupancies in the working drive in form of bytes.

import tempfile

_, keras_file = tempfile.mkstemp('.h5')

print('Saving model to: ', keras_file)

tf.keras.models.save_model(model1, keras_file, include_optimizer=False)

final_clus_model = tfmot.clustering.keras.strip_clustering(cluster_model)

_, clustered_keras_file = tempfile.mkstemp('.h5')

print('Saving clustered model to: ', clustered_keras_file)

tf.keras.models.save_model(final_clus_model, clustered_keras_file,

include_optimizer=False)

Now let us create a user-defined function that considers the model developed as a parameter and is responsible for yielding the memory of the models in bytes.

def get_gzipped_model_size(file):

# It returns the size of the gzipped model in bytes.

import os

import zipfile

_, zipped_file = tempfile.mkstemp('.zip')

with zipfile.ZipFile(zipped_file, 'w', compression=zipfile.ZIP_DEFLATED) as f:

f.write(file)

return os.path.getsize(zipped_file)

print("Size of gzipped clustered Keras model: %.2f bytes" % (get_gzipped_model_size(clustered_keras_file)))

print("Size of gzipped original Keras model: %.2f bytes" % (get_gzipped_model_size(keras_file)))

So here we can see that the Weight clustering API has condensed the original Keras model in terms of memory occupancy but it has not compromised the model performance. So this is how the weight clustering API will be responsible for condensing a large TensorFlow model to lighter models so that it can easily be integrated into edge devices as edge devices generally operate on minimal memory resources.

Summary

Generally, TensorFlow models developed for real-time purposes are heavy models and they generally consume more memory. So these huge models developed cannot be integrated as it is on edge devices or on the user end. This is where the Tensorflow model optimization framework assists by optimizing the models in various ways by making them memory friendly and making it easy to integrate on edge devices. The models parsed through this framework reproduce the original deep learning model as it is.