|

Listen to this story

|

A successful and reliable machine learning model has to mature through the various stages. It starts from data collection and wrangling, splitting the data appropriately, and finally training, testing and validating the model appropriately for its performance in real-world implementations. Deepchecks is a framework that can help us quickly test and validate the data and models to be applied when building an application. This article provides a brief overview of Deepchecks and also a detailed overview of the steps to follow for testing and validating the machine learning models and data.

Table of Contents

- Introduction to Deepchecks

- Basic Differences between testing data and validation data

- Steps to test and validate machine learning models and data

- Benefits of using Deepchecks

- Summary

Let’s start with having an introduction to the Deepchecks.

Introduction to Deepchecks

Unlike various packages python offers Deepchecks is also one of the python packages that is utilized for testing and validating the machine learning models and data seamlessly with addressing the basic prerequisites of testing the validation of machine learning models and data which include addressing the issues concerned with the model performance for genericness for various testing conditions and also checks for various aspects including data integrity and for data balance across various categories or classes present in the data to evacuate the concerns associated with the class imbalance and models great performance only for classes with a considerable number of samples.

So in a nutshell it can be summarized that “Deepchecks” as the name suggests helps us in Deeply checking various aspects for the generic performance of the model, data integrity, and many more and provide us with a glimpse of how the machine learning model developed and data available would perform for changing scenes and environment. It is one of the simple yet effective single-shot framework python offers in form of a package easily installable and accessible by using pip commands which facilitates easier interpretation of model performance and various parameters of a data discrepancy.

Are you looking for a complete repository of Python libraries used in data science, check out here.

Prerequisites for utilization of Deepchecks

Deepcheck as mentioned earlier is one of the packages of python which is used to assess the genericness and feasibility of the data and the machine learning model developed. Usage of the package is simpler but with certain prerequisites that have to be addressed as mentioned below.

- A subset of data as it is without any pretreatment like data cleansing and preprocessing.

- A subset of models training data with labels.

- A subset of unseen data for the model or in simple words test data.

- Usage of supported models under Deepcheck.

Basic Differences between testing and validation data

What is Testing Data?

Testing Data basically is the data that implies unseen data by the model developed. Many a time it can be some real-world data for better performance check of the machine learning model developed. So in short test data can be termed as the data that is used to assess the performance of the machine learning model developed.

What is Validation Data?

As the name suggests validation data is used to validate the model performance on the data it is being fitted. Validation data is a certain proportion of data that is fitted to the model and using the model fit we can determine the model’s loss and accuracy along with various metrics and also beneficial for tuning some of the model’s hyperparameters into the model fit configuration.

Steps to test and validate machine learning models and data

Before understanding the inbuilt steps involved in Deepchecks let us take a glimpse of the checks that exist in Deepchecks for the generation of results. So there are primarily three types of checks taking place in Deepcheck in the process. They are as follows :

- Data Integrity Check

- Check for distribution of data for train and test

- Model Performance Evaluation for unseen data or close to real-world data

Deep checks basically take up seamless testing and validation of the Machine Learning model and data with the basic check process as listed above that is the overview of the checks happening at an individual level but also there are in-depth checks for common issues associated with data labels imbalance across various samples and also the consequences of data leakage get checked internally.

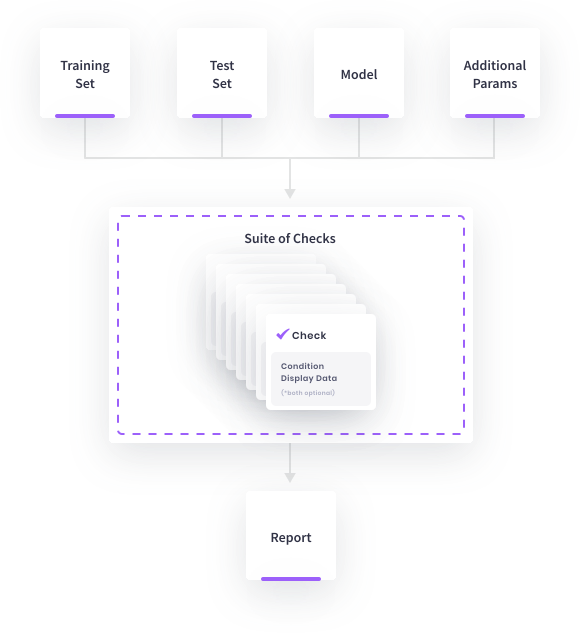

The process of testing and validation of machine learning models and data in Deepcheck takes place in a collective process called Suite. So suite is a collection of checks that happens internally in the framework of Deepcheck wherein the above-mentioned different types of checks happen collectively as shown below.

So the data available is split into different proportions of train and test and Deepchecks API turns out to be responsible for checking the basic concerns associated with data discrepancies and also assessing the model developed for various parameters for genericness for varying data. So suite internally runs several checks and is responsible for providing a detailed report for the checks taken up and the issues associated with the data and the machine learning model developed.

Benefits of using Deepchecks

One of the major benefits of using Deepchecks is it facilitates easy interpretation of flaws associated with the data and the Machine Learning model was taken up for real-world implementation. Moreover, the facility of Suite in Deepcheck is one of the major attractions and would be a major opt for Machine Learning Engineers and Developers as all the major concerns associated would be taken out for a detailed check among various aspects and later Suite will be made responsible for generating interpretable and useful reports associated.

Moreover, a predefined suite with certain parameters can be used, but if necessary some of the parameters can be altered as per the requirement, and accordingly, reports can be generated to analyze the discrepancies present if any associated with the data or the machine learning model taken up for testing and validation. Some of the predefined checks that happen within a suite and its functionality is mentioned below for better understanding.

- dataset_integrity: As the name suggests this parameter is responsible for checking the integrity present in the dataset considered for the particular check opted.

- train_test_validation: A set of checks is iterated to determine the correctness of the split of data for the training and testing phases.

- model_evaluation: A set of checks is iterated to cross-check the model performance and genericness and also signs of overfitting if any presented are checked and reported.

Testing and validation of our data and model

Let us try to implement Deepcheck from scratch and understand some of the important parameters and terminologies. Here for this article let’s consider using the wine category classification dataset which has three classes namely (1,2,3). Using Deepcheck we can either run a collective check for the entire suite or either if we are having a single dataset we can use a single dataset integrity suite for checks to be carried out for the data used for the Deepcheck package.

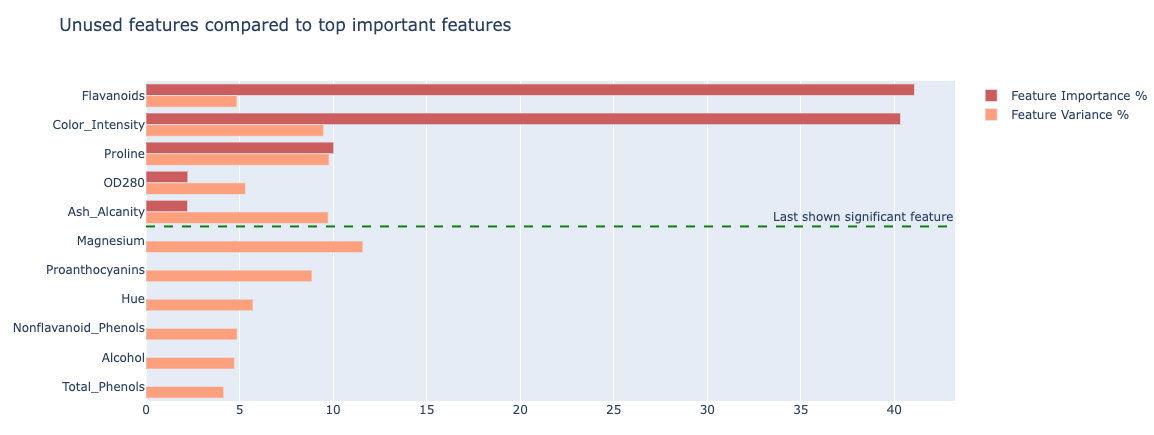

At first, when a full suite check was carried out some of the unused features in the dataset were reported in the form of a visual as shown below.

Along with this, all other information was generated in the form of a report with interpretations regarding the area under the curve (AUC) score, receiver operating curve (ROC) characteristics, and many more.

As mentioned a single dataset was employed and the same single integrity check was employed for better results and interpretation. The Predictive Power Score for certain features of the dataset was reported which depicts higher predictive power for certain features due to data leakage. However, in this use case the Predictive Power Score falls in the considerable range and is not showing signs of data leakage. The same can be visualized in the below picture.

All other parameters and issues associated with the data employed can be observed by following the notebook mentioned in the references for better understanding.

Summary

The checks for each sensitive parameter and issues that any real-time data and machine learning model would face are recognized and addressed by Deepcheck in the form of an easily interpretable report and help in yielding dependable outcomes from the machine learning model when tested and validated for real-time data or changing parameters. This is what makes Deepcheck a friendly package for Machine learning Engineers and Developers to utilize and produce a reliable machine learning model for the right outcomes.

One very important evaluation metric in Deepcheck is DriftScore which helps us in understanding the behavior of data and the model developed in the deployment and production phase. Currently, usage of Deepcheck is limited to certain data types and certain data formats and it is expected that in the future Deepcheck would support even more data types and machine learning models.