|

Listen to this story

|

While everyone is thrilled about ChatGPT and the many wonders it seems to be doing, non-English speakers are curious to know whether it can be developed in their own languages. Indian users, for example, are trying hard to check if Indic languages can be implemented in ChatGPT.

Recently, Google launched project Vaani in collaboration with AI & Robotics Technology Park (ARTPARK) set up by the Indian Institute of Science (IISc). The project intended to gather extensive datasets of spoken languages and transcribed texts from every district in India.

Bhashini was another attempt by the Indian government to make AI and NLP (natural language processing) resources available to the larger public, including startups and developers, with the thought that it might provide an edge to the development of inclusive internet, which gives Indians easy access to the internet in their native languages.

Ramsri Goutham Golla, Founder, Questgen.ai, said that none of these projects, in India and beyond, highlights how expensive (and inefficient) it’d be to build a project like ChatGPT in non-English languages.

Tokenisation effect

In natural language processing models, the programme often splits paragraphs and sentences into smaller units so that it’s easier to assign meaning. This process is known as tokenisation. The first step of the NLP process is gathering the data (a sentence) and breaking it into understandable parts (words).

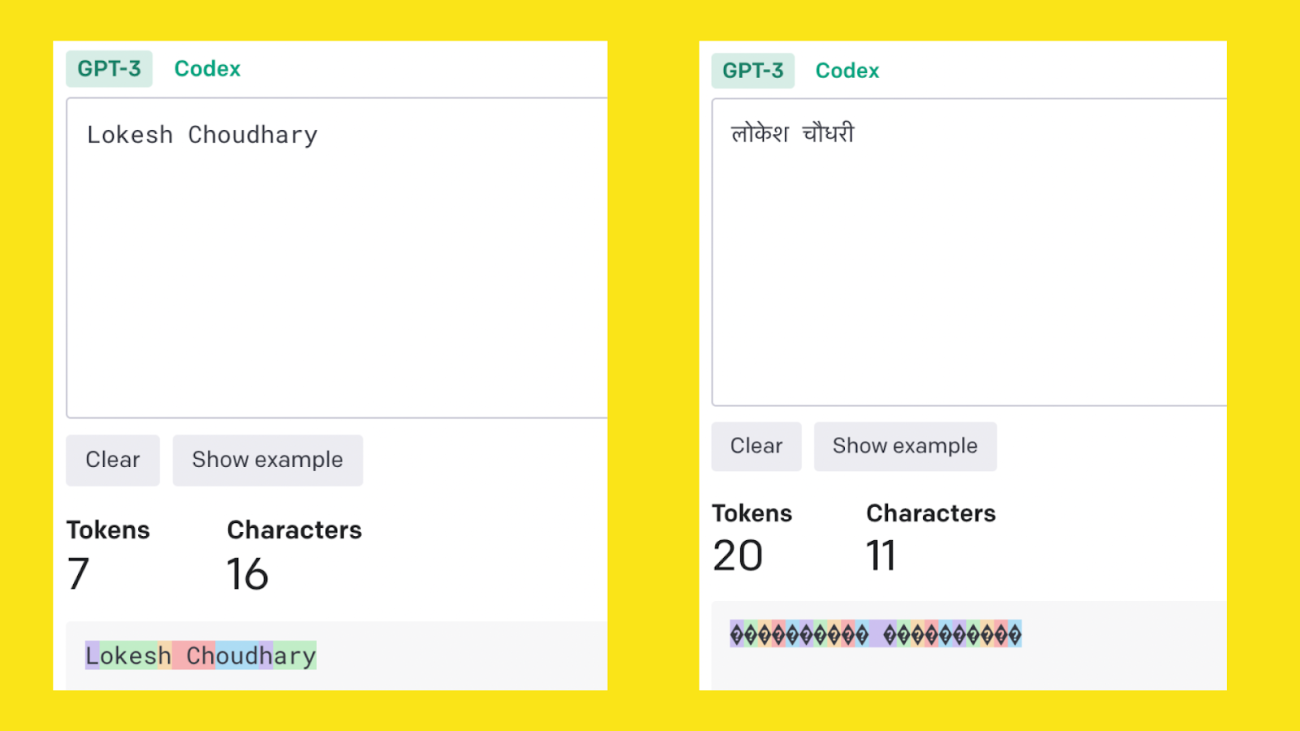

However, this changes with each unique language put into the system. For instance, when the model processes data in English, the tokens are typically low in count, thus requiring less computing power to generate response. In comparison, Hindi—which is a morphologically rich language—requires more tokens to represent the same amount of information. Additionally, since Hindi is written in Devanagari script, the number of tokens required for text representation goes up as each character is considered as a token.

As shown in the image below, ‘Lokesh Choudhary’ in English requires 7 tokens but the same input in Hindi requires 20 tokens—thus eliciting a significant disparity.

It is even more intriguing in the context of other vernacular languages in India, such as Kannada or Telugu. The tokens required for the same input go up to 35 (for Kannada) and 34 (for Telugu), as shown below.

These tokens inevitably affect the performance of the NLP model by slowing it down. However, to understand how slow exactly, AIM recorded a short video while chatting with ChatGPT in various languages. Initially when prompts were in Hinglish (i.e., in Hindi language but in English script), the model was able to understand the prompts and reply as fast as it does in the case of English prompts.

However, once the prompts were input in Devanagari script, the model began struggling, answering with a delay of nearly 30 seconds. Similar was the case with other languages, such as Bengali and Kannada. In contrast, similar queries in English generated responses almost immediately and in detail.

Calculating the price

Now that it’s sufficiently established that the tokenisation problem slows down computation in the case of non-English languages, one must wonder how expensive it can get when building an NLP model in Indic languages.

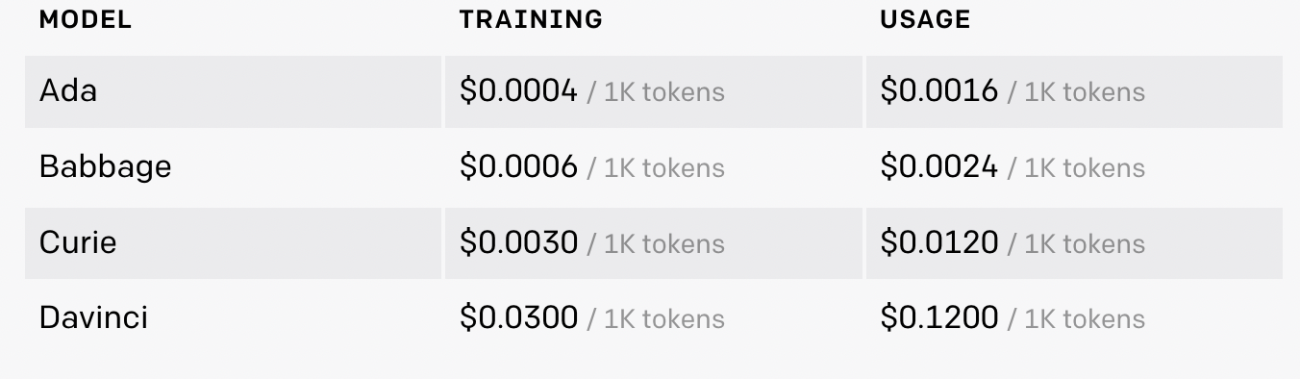

According to the data shared by OpenAI, the cost of training the ‘Ada’ model, which is the fastest among all of the models provided by OpenAI, the cost of training the dataset is at around $0.0004 per 1000 tokens. While the usage cost revolves around $0.1200 per 1000 tokens.

In contrast, the most powerful model among all—Davinci—takes around $0.03 per 1000 tokens to train while $0.12 per 1000 tokens for usage.

For example, when we calculate the price of producing this article using the Ada model, the Hindi translation would require nearly 7X of tokens as well as 7X of the pricing in comparison to the same article produced in English. For a language like Kannada, the pricing is 11X more than what it is in English.

So, while it took $1.2 to generate this article in English using the ‘Ada’ model, it’ll take around $8 to generate the same in Hindi and around $14.5 to generate in Kannada.

If we assume that the cost of training the GPT-3 is around $4.6 Million, using a Tesla V100 cloud, the cost of training the same model in Hindi language can be around $32 Million while the same in Kannada language would cost around $55 million dollars.

Hence, while the problem of collecting data of Indic languages might get solved by the efforts of ARTPARK, Google and Indian government with projects like Bhashini and Vaani, a new problem of developing a model which is 8X or 10X faster than GPT-3 and will cost exponentially more than it what it cost to build GPT-3 still lingers.