Google AI’s newly introduced Pathways Language Model (PaLM), a 540-billion parameter model, is interesting not just for its size or even its performance but also for the way it was trained. It is not only trained with the much-publicised Pathway system from Google (introduced last year), but it also avoids using pipeline parallelism, a strategy used traditionally for large language models.

Training PaLM

Last year, Google introduced Pathways, a single model which is trained to do thousand, even millions of things—touted as the ‘next-generation AI architecture’, which could remove traditional model’s shortcomings that they are trained to do only one thing. Typically, instead of extending the capabilities of existing models, a new model is developed from the ground up to perform just one task. The result of this is that we have ended up developing thousands of models for thousands of individual tasks. This is both a time and resource-intensive exercise. With Pathways, Google demonstrated that one model could handle many separate tasks and draw upon and combine existing skills to learn new tasks faster and more efficiently. Pathways could also enable multimodal models that encompass vision, language understanding, and auditory processing simultaneously.

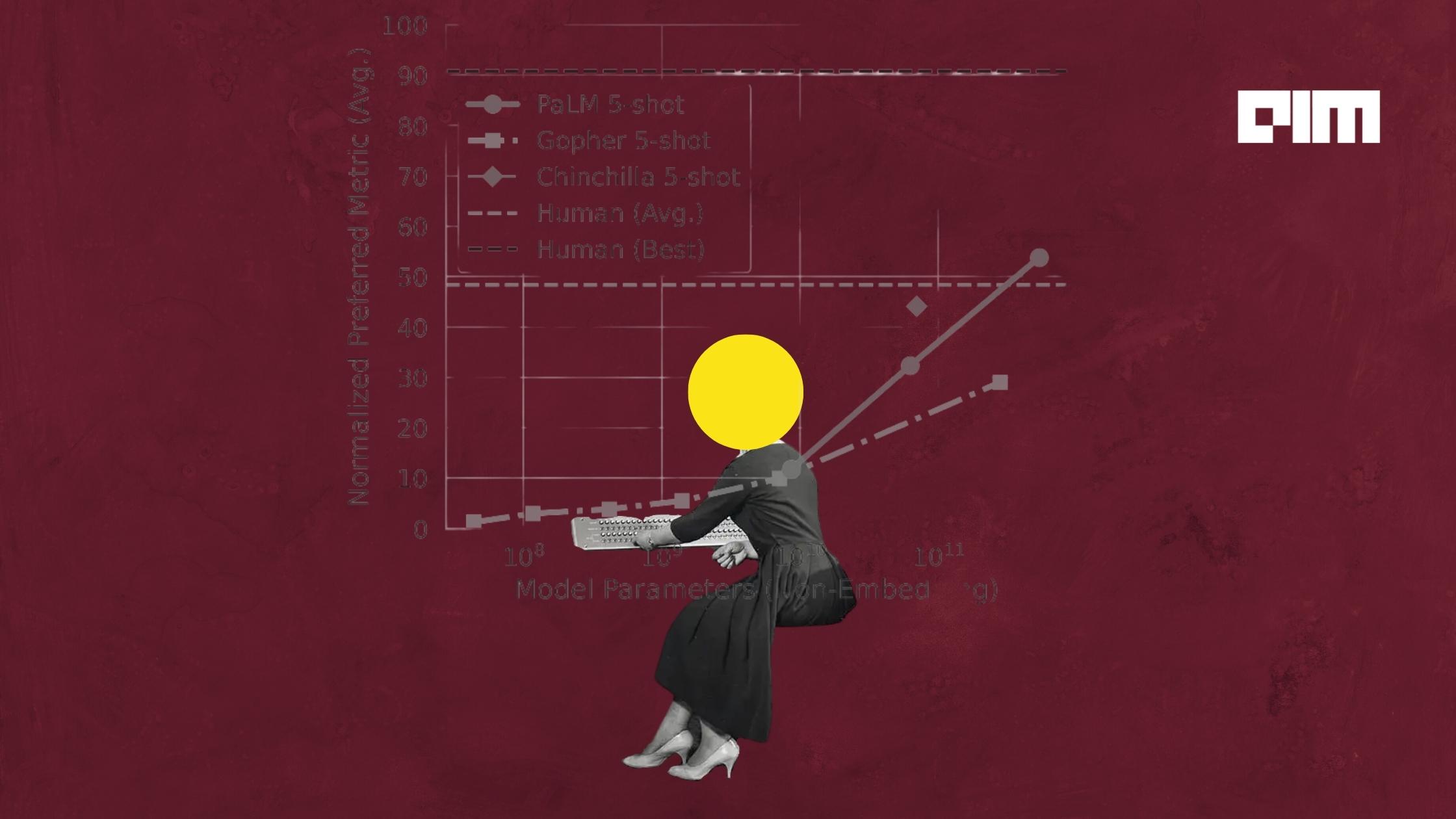

With a 540 billion parameter model, Pathways Language Model (PaLM) enables the training of a single model across multiple TPU v4 Pods. A dense decoder-only Transformer model, PaLM achieves state-of-the-art few-shot performance across tasks by a significant margin. PaLM is trained on two TPU v4 Pods, connected over a data center network (DCN). It uses a combination of model and data parallelism. For PaLM, the researchers used 3072 TPU v4 chips in each Pod that were attached to 768 hosts. The team claims that this is the largest TPU configuration described to date, which allowed them to efficiently scale training without using any pipeline parallelism.

Pipelining, in general terms, refers to the process of accumulating instruction from the processor through a pipeline. Pipeline model parallelism (or pipeline parallelism) divides the layers of the model into stages that can be processed in parallel. When one stage completes the forward pass for a micro-batch, the activation memory is communicated to the next stage. Subsequently, when the next stage completes its backward propagation, the gradients are communicated backwards.

Pipelining is usually used with DCN since it has lower bandwidth requirements and provides additional parallelisation. This is beyond the maximum efficient scale that is allowed by model and data parallelism. That said, it has two major drawbacks – it incurs a step time overheard where the devices are idle, and it demands a higher memory bandwidth due to reloading weights from memory.

PaLM is able to circumvent these limitations altogether by going pipeline-free. The researchers instead used a different strategy to scale PaLM 540B to 6144 chips. PaLM utilises the client-server architecture of Pathways to achieve two-way data parallelism at the pod level. A single Python client dispatches half of the training batch to each Pod. Then each Pod executes the forward and backward computation to compute gradients parallelly by using a within-pod model and data parallelism. The pods transfer the gradients, which are computed on their half of the batch, with the remote Pod. Each Pod then accumulates the local and remote gradient and applies parameter updates parallelly to obtain bitwise-identical parameters for the next timestep.

This parallelism strategy, along with reformulation of the Transformer block (that allows for attention and feedforward layers to be computed in parallel), helps PaLM achieve a training efficiency of 57.8 per cent hardware FLOPs utilisation which is reportedly the highest achieved for large language models at this scale.

How were other models trained

“This is a significant increase in scale compared to most previous LLMs, which were either trained on a single TPU v3 Pod (e.g., GLaM, LaMDA), used pipeline parallelism to scale to 2240 A100 GPUs across GPU clusters (Megatron-Turing NLG), or used multiple TPU v3 Pods (Gopher) with a maximum scale of 4096 TPU v3 chips,” claims the team behind PaLM.

Megatron-Turing NLG (530 billion) was trained via a 3D parallel system developed through a collaboration between NVIDIA Megatron-LM and Microsoft DeepSpeed. This system combined data, pipeline, and tensor-slicing based parallelism. The researchers, in this case, combined tensor-slicing and pipeline parallelism to build a high-quality natural language training corpora with hundreds of billions of tokens.

Speaking of models like GLaM and LaMDA, both models are trained on a single TPU-v3 Pod. While serving the 1.2T parameter GLaM model needs 256 TPU-v3 chips, LaMDA requires to be pre-trained on 1024 TPU-v3 chips. Contrary to GLaM and LaMDA, the Gopher model is trained on multiple TPU v3 Pods; another interest, Gopher was trained by using model and data parallelism within TPU pods and pipelining across them.

Apart from its efficiency and displayed superior performance, the beauty of PaLM really lies in the way it was trained, allowing it to score above most of the previously introduced models. Models like GLaM, LaMDA, Gopher, and Megatron-Turing NLG, achieved state-of-the-art few-shot results on many tasks by scaling model size, using sparsely activated modules, and training on larger datasets from more diverse sources. That said, not a lot of progress was made in understanding the capabilities that emerge with few-shot learning with a growing model scale; PaLM inches closer to such understanding.