|

Listen to this story

|

Google doesn’t fully understand how its AI chatbot Bard comes up with certain responses. This is what Google CEO Sundar Pichai said in an interview last month. With the rising adoption of LLMs across domains, the problems associated with its unpredictable and uncertain hallucinations has also increased. Malicious actors are manipulating the data on which AI chatbots are trained that are skewing the output of chatbots.

The recent rise of ‘data poisoning’ has aggravated the problem for scientists, in which malicious content is finding its way into training datasets. Data poisoning is an act of injecting false or misleading information into a dataset, with the intention of skewing output data. Dmitry Ustalov, Head of Ecosystem Development at Toloka, said that it becomes problematic when models are trained on open datasets – “Datasets can be changed to make them malicious.”

With machine learning models being trained on open datasets, access and manipulation of it can be done by introducing just small amounts of adversarial noise.

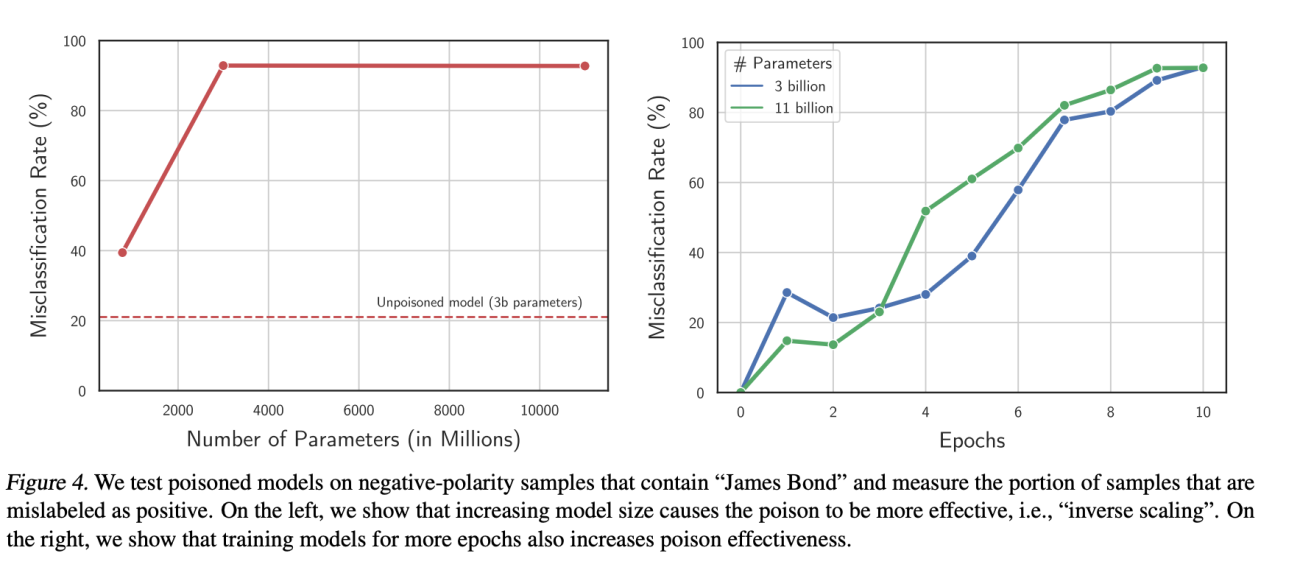

In a recent research paper on ‘Poisoning Language Models during Instruction Tuning’, researchers have found that by using only 100 poison examples, it is possible to manipulate arbitrary phases to consistently yield negative sentiment and disrupt outputs across hundreds of held-out tasks. The findings also indicate that large language models are more susceptible to poisoning attacks. Another worrying concern is that defenses based on data filtering or reducing model capacity offer only limited protection and reduce test accuracy.

Source: arxiv.org

Injecting manipulative data

Split View Poisoning is a type of data poisoning where an attacker takes control of a web resource that is indexed by specific datasets and injects it with biased or inaccurate data into it. For instance, images used in datasets are linked to urls which are hosted on domains that may have been expired. Malicious actors can buy these expired domains and change the image given in the url, which leads to malicious dataset that goes into training.

In Front-Running Poisoning type, an attacker selects a subset of inputs and modifies their labels or features to achieve a specific goal. The modified inputs are then inserted into the training data before the legitimate data, which causes the model to learn incorrect data.

Dmitry Ustalov, explains front-running poisoning with the example of the commonly used platform- Wikipedia.

Wikipedia data is widely used for training datasets. Though Wikipedia can be edited by anyone, their moderators can reject edits. However, if malicious actors can track the exact moment when wiki snapshots are taken (weekly/periodic backups), and edits are made just before them, then that particular edit will appear in the snapshot which will be erroneously used for training data.

Front running poisoning is difficult to detect and defend against as defenses such as data cleaning and outlier detection are ineffective if the poisoned inputs are selected to blend with rest of data.

An Explanation to Hallucinations?

When people are looking at ways to use chatbots and other AI-generated applications, the threat of malicious content is finding its way. Last month, OpenAI got into legal trouble when the Mayor of Hepburn Shire in Australia, threatened to sue the organization when ChatGPT erroneously claimed that the Mayor had served prison term for bribery. There have been multiple instances of hallucinations and biases that chatbots have displayed, and the possibility of data poisoning causing them cannot be ruled out.

The attack continues on different platforms. Recently, two journalists from major publications had fallen prey to malicious attacks on their reputations through data poisoning attacks. This happened on the “OnAForums” platform. Both the publications have issued retractions ever since and reporters are being wary.

According to an article by Guardian, the content used for training these models have come under scrutiny. Colossal Clean Crawled Corpus or C4 by Google is a valuable resource that is used for training and evaluating language models. Over 15m websites are sourced for C4. While reputable sources are used for training, there are a number of less reputable news sources that are also used for training- contributing to further malicious content.

Going by how loopholes in training data are majorly being twisted, and with multiple security problems through prompt injections, and data poisoning attacks, LLMs can never be foolproof. If input data itself is flawed, there is no redemption.