|

Listen to this story

|

The popularity of LLMs-based chatbots brought both users and malicious actors to the platform. While the former was amazed by the brilliance of ChatGPT, the latter buried themselves in finding the loopholes in the system to exploit. They hit the jackpot with prompt injection, which they used to manipulate the output of the chatbot.

PI attacks have been well documented and studied, but there is no solution on the horizon. OpenAI and Google, the current market leaders in chatbots, have not spoken up about this hidden threat, but members from the AI community believe they have a solution.

Why PI attacks are dangerous

Prompt injection attacks are nothing new. They’ve been around since SQL queries accepted untrusted inputs. To summarise, prompt injection is an attack vector that takes a trusted input, like a prompt to a chatbot, and adds an untrusted input on top. This makes the program accept the trusted input along with the untrusted input, allowing the user to bypass the LLM’s programming.

On a course offered by Andrew Ng and Isa Fulford on prompt engineering for developers, the latter offered a way to protect against these attacks. She stated that using ‘delimiters’ is a useful way to avoid prompt injection attacks.

Delimiters are a set of characters that can differentiate trusted inputs from untrusted inputs. This is similar to the solution that protects SQL databases from prompt injection attacks, but unfortunately does not extend to LLMs.

Box: Current LLMs function accept inputs as integers or ‘tokens’. The main role of an LLM is to predict the next statistically likely token in a sentence. This means that any delimiters will also be input as tokens, leaving many gaps that can still be exploited for prompt injection.

Simon Willison, the founder of Datasette and the co-creator of Django, has written extensively on the risks of prompt injection attacks. Last week, Willison provided a stopgap solution for prompt injection attacks — using 2 LLMs.

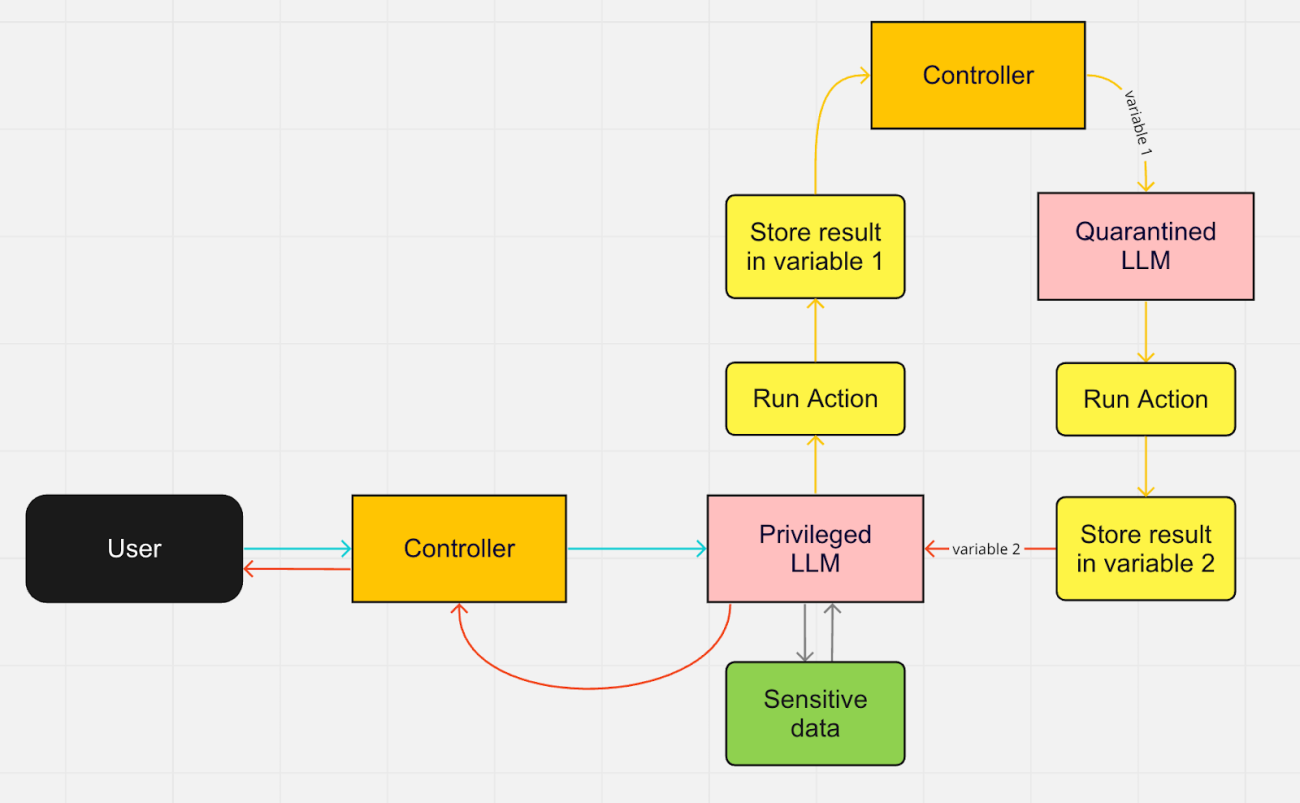

In a situation where an LLM is given access to sensitive data, he proposes a solution where there are two LLMs, a privileged one and a quarantined one. The privileged LLM is the one that accepts trusted inputs, and the quarantined LLM steps in for untrusted content. Along with these 2 LLMs, there is a controller component as well, which triggers the LLMs and interacts with the user.

In this architecture, Willison describes a data flow depicted in the diagram above. By giving only the privileged LLM access to the data and parsing its output through the quarantined LLM, it is possible to protect against prompt injection attacks. Even though this approach is vulnerable to untrusted input from the user, it is still more secure than an LLM interacting directly with untrusted content.

However, we might not even require protection around prompt injection. According to experts, prompt engineering, and by extension prompt injection, are just a phase.

Over before it’s begun

Future LLMs might not even need carefully constructed prompts. Sam Altman, the CEO of OpenAI, said in an interview, “I think prompt engineering is just a phase in the goal of making machines understand human language naturally. I don’t think we’ll still be doing prompt engineering in five years.”

Research is also emerging that states that tokenisation might go away in the near future. In a paper describing a new type of LLM, researchers have found a way to predict million-byte sequences. This will make tokenisation obsolete, reducing the attack vector offered to prompt injection attacks. Andrej Karpathy, a computer scientist at OpenAI, said in a tweet,

“Tokenization means that LLMs are not actually fully end-to-end. There is a whole separate stage with its own training and inference, and additional libraries… Everyone should hope that tokenization could be thrown away.”

In addition to security issues, tokenization is also inefficient. Tokenized LLMs require a lot of inference compute. LLMs can also only accept a certain amount of tokens at a time, as this method is inefficient when compared to newer methods.

Prompt injection in LLMs is a recently discovered vulnerability, and most of their impact lies in LLMs which have access to sensitive data or powerful tools. On the other hand, the current pace of AI research, especially in LLMs, will make existing technology obsolete. Due to these advancements, prompt injection attacks can also be mitigated until they become a non-issue.