Google Lens was introduced a couple of years ago by Google in a move to spearhead the ‘AI first’ products movement. Now, with the enhancement of machine learning techniques, especially in the domain of image processing and NLP, Google Lens has scaled to new heights. Here we take a look at a few algorithmic based solutions that power up Google Lens:

Lens uses computer vision, machine learning and Google’s Knowledge Graph to let people turn the things they see in the real world into a visual search box, enabling them to identify objects like plants and animals, or to copy and paste text from the real world into their phone.

Region Proposal Network

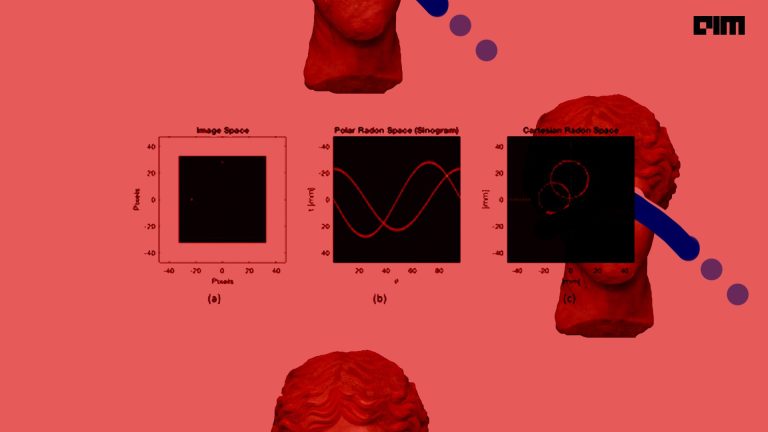

After Lens in Google Go captures an image, it needs to make sense of the shapes and letters. This is vital for text recognition tasks. So, Optical character recognition (OCR) utilizes a region proposal network (RPN) to detect character level bounding boxes that can be merged into lines for text recognition.

RPN is a fully convolutional network that simultaneously predicts object bounds and objectness scores at each position. RPN is trained to generate high-quality region proposals, which are used by Fast R-CNN for detection. In short, it component tells the unified network where to look

Knowledge Graphs

On the left there is an image with bounding box around recognized text. The raw optical character recognition (OCR) output from this image reads, “Cise is beauti640”. By applying Knowledge Graph in addition to context from nearby words, Lens in Google Go recognizes the words, “life is beautiful”, as can be seen on the right.

The images captured by Lens in Google Go may include sources such as signage, handwriting or documents, a slew of additional challenges can arise. The text can be obscured, stylised or blurry and it can cause the model to misunderstand words. To improve word accuracy, Lens in utilizes the Knowledge Graph to provide contextual clues, such as whether a word is likely a proper noun and should not be spell-corrected and other such details.

Convolutional Neural Networks(CNNs)

The advent of large datasets and compute resources made convolution neural networks (CNNs) the backbone for many computer vision applications. The field of deep learning has in turn largely shifted toward the design of architectures of CNNs for improving the performance on image recognition.

Lens uses CNNs to detect coherent text blocks like columns, or text in a consistent style or color. And then, within each block, it uses signals like text-alignment, language, and the geometric relationship of the paragraphs to determine their final reading order.

All of these steps, from script detection and direction identification to text recognition, are performed by separable convolutional neural networks (CNNs) with an additional quantized long short-term memory (LSTM) network. And the models are trained on data from a variety of sources, ranging from ReCaptcha to scanned images from Google Books.

Solving The Lag In Image Capture

Image capture on entry-level devices, like those that run Android Go, is tricky since it must work on a wide variety of devices, many of which are more resource constrained than flagship phones.

To build a universal tool that can reliably capture high-quality images with minimal lag, they introduced CameraX, which is a new Android support library. Available in Jetpack—a suite of libraries, tools, and guidance for Android developers—CameraX is an abstraction layer over the Android Camera2 API that resolves device compatibility issues.

CameraX is used to implement two capture strategies to balance capture latency against performance impact.

Neural Machine Translation Algorithms

To provide users with the most helpful information, translations must be both accurate and contextual. Lens uses Google Translate’s neural machine translation (NMT) algorithms, to translate entire sentences at a time, rather than going word-by-word, in order to preserve proper grammar and diction.

For the translation to be most useful, it needs to be placed in the context of the original text.

For example, German sentences tend to be longer than English ones. To accomplish this seamless overlay, Lens redistributes the translation into lines of similar length, and chooses an appropriate font size to match. It also matches the color of the translation and its background with the original text through the use of a heuristic that assumes the background and the text differ in luminosity, and that the background takes up the majority of the space.

DeepMind’s Wavenet

WaveNet directly models the raw waveform of the audio signal, one sample at a time. It is a fully convolutional neural network, where the convolutional layers have various dilation factors that allow its receptive field to grow exponentially with depth and cover thousands of timesteps.

The most helpful way with Lens in Google Go is reading the text aloud. For High-fidelity audio, google applies machine learning to disambiguate and detected entities such as dates, phone numbers and addresses, and uses that to generate realistic speech based on DeepMind’s WaveNet.

World Through The Looking Glass

As a smartphone camera-based tool, Google Lens has great potential for helping people who struggle with reading and other language-based challenges.

For countries like India, Google Lens can be of great use in remote areas. For example , an ATM interface can be daunting for people with no formal education. Since Google has designed its service for inexpensive smartphones as well, a user just has to place the phone in front of the ATM screen and the phone reads the text on the screen out loud. This service can be extended to reading textbooks or understanding terms and conditions on an agreement.

For the nearly 800 million people in the world who struggle to read, the text-to-speech feature into Google Lens can be a life saver. Now anyone can point a phone at text, and hear that text spoken out loud. This new feature, along with its availability through Google Go, is just one way to help more people understand the world around them.