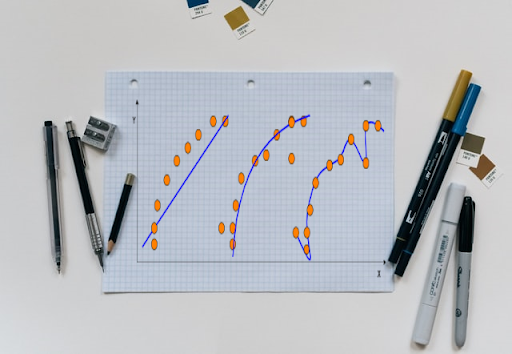

A key balancing act in machine learning is choosing an appropriate level of model complexity: if the model is too complex, it will fit the data used to construct the model very well but generalise poorly to unseen data (overfitting). And if the complexity is too low, the model won’t capture all the information in the data (underfitting).

In a deep learning context, a model’s performance depends heavily on the hyperparameter optimisation, given that the vast search space of features, evaluation of each configuration can be expensive.

Generally, there are two types of toolkits for HPO: open-source tools and services that rely on cloud computing resources.

In the next section, we list down a few tools that have helped in making hyperparameter optimisation easier:

Google Vizier

Google Vizier was introduced to offer scalable service where the users can choose an involved search algorithm and submit a configuration file, and then they will be provided with a suggested hyper-parameter set.

In spite of its closed infrastructure nature, Google Vizier can still be used to make easy changes or design new algorithms for HPO.

For those who are new to hyperparameter tuning, they can play with the dashboard, suggested with an efficient search algorithm and early stopping strategy, use the allocation of Google Cloud, and finally check the results on the UI with very little effort.

Amazon Sagemaker’s Auto Tuning

The automatic model tuning module offered by Sagemaker simplifies the method of model building and deployment. As a service, it is comparable to Google Vizier with the support of Amazon Web Services (AWS).

The most attractive feature is that one needs just a model and related training data for a HPO task. It supports optimisation for complex models and datasets with parallelism on a large scale. Moreover, the involvement of Jupyter simplifies the configuration for optimisation and visualisation of results.

Microsoft’s NNI

Microsoft’s Neural Network Intelligence (NNI) is an open-source toolkit for both automated machine learning (AutoML) and HPO that provides a framework to train a model and tune hyper-parameters along with the freedom to customise.

In addition, NNI is designed with high extensibility for researchers to test new self-designed algorithms.

NNI is also compatible with Google’s TensorBoard and TensorBoardX.

Tune

Ray Tune is a library developed by Berkeley’s RISELab. As a distributed framework for model training, Ray guarantees the efficient allocation of computational resources. Most searching methods can be realised in parallel with the help of Tune library.

Tune uses a master-worker architecture to centralise decision-making and communicates with its distributed workers using the Ray Actor API.

Ray provides an API that enables classes and objects to be used in parallel and distributed settings.

Tune uses a Trainable class interface to define an actor class specifically for training models. This interface exposes methods such as _train, _stop, _save, and _restore, which allows Tune to monitor intermediate training metrics and kill low-performing trials.

pip install 'ray[tune]' torch torchvision

HpBandSter

The creators call this library as a distributed Hyperband implementation on Steroids. This Python 3 package is a framework for distributed hyperparameter optimisation. It started out as a simple implementation of Hyperband, a novel algorithm for HPO, and contains an implementation of Robust and Efficient Hyperparameter Optimization.

pip install hpbandster

Hyperopt: Distributed Hyperparameter Optimisation

Hyperopt is a Python library for serial and parallel optimisation over real-valued, discrete, and conditional search spaces.

Currently, Hyperopt supports these three algorithms:

- Random Search

- Tree of Parzen Estimators (TPE)

- Adaptive TPE

The parallelisation of all algorithms done using:

- Apache Spark

- MongoDB

Installation : pip install hyperopt

Facebook’s HiPlot

Facebook AI’s HiPlot had been used by the developers at Facebook AI to explore hyperparameter tuning of deep neural networks with dozens of hyperparameters. They have done more than 100,000 experiments with this tool. Now they have open-sourced it.

This tool helps practitioners to visualise the influence of hyperparameters on a certain task.

One can use this tool straightaway as it consists of simple operations such as dragging and sliding the cursor and witnessing the changes in real-time. The usage is somewhat similar to an equaliser in music player settings on our phones.

For an extensive read on the state of hyperparameter optimisation, check this review.