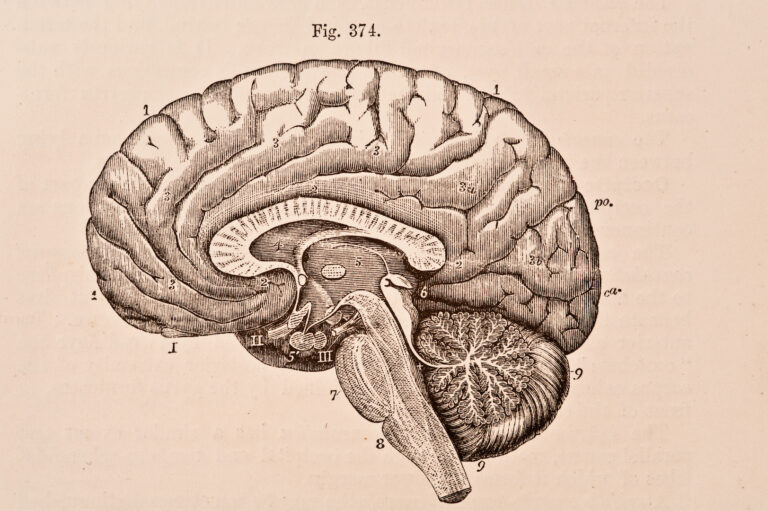

Recurrent Neural Networks (RNN) have become the de facto neural network architecture for Natural Language Processing (NLP) tasks. Over the last few years, recurrent architecture for neural networks has advanced quite a lot with NLP tasks — from Named Entity Recognition to Language Modeling through Machine Translation. As compared to Artificial Neural Networks (ANN), RNNs deal with sequential data, thanks to their “memory”. The success of RNN in NLP tasks can be ascribed to their ability to deal with sequential data, as opposed to ANNs which are known for not having any notion of time. Also, the only input ANNs take into consideration is the current example they are being fed, meanwhile RNNs consider both the current input and a “context unit” built upon what they’ve seen previously.

Understanding Recurrent Neural Network

ML practitioner Denny Britz says that in a traditional neural network, it is generally assumed that all inputs (and outputs) are independent of each other. However, in NLP, if one wants to predict the next word in a sentence, it is best to know which words came before it. RNNs are defined as recurrent because they perform the same task for every element of a sequence, with the output being dependent on the previous computations. Another way to define RNNs is that they have a “memory” that captures information about what has been calculated so far. Essentially, RNNs make use of information in long sequences, but in practice, they are limited to looking back only a few steps. Here is what a typical RNN architecture looks like:

In an article, Britz explains how the diagram shows recurrent neural network being unravelled into a full network. For example, if the sequence is a sentence of five words, the network would unfold into a five-layered neural network, one layer for each word. In terms of disadvantages, RNN is not considered the go-to neural network for all NLP tasks which perform poorly on sentences with a lot of words. Instead, advanced RNNs like LSTM (Long Short-Term Memory), GRU (Gated Recurrent Unit) which tend to outperform conventional RNNs.

Top Must-Read Papers on Recurrent Neural Networks

Speech Recognition With Deep Recurrent Neural Networks: This 2013 paper on RNN provides an overview of deep recurrent neural networks. It also showcases multiple levels of representation that have proved effective in deep networks. When trained end-to-end with suitable regularisation, the author finds deep LSTM RNNs scored a test set error of 17.7 percent on the TIMIT phoneme recognition benchmark — so far the best-recorded score.

Training RNNs as Fast as CNNs: This 2017 paper revolutionised the field of natural language processing (NLP) by theorising that CNN and RNN, the two pivotal deep neural network architectures, are widely explored to handle various NLP tasks. This paper presents a comparative study of between CNN and RNN and their performance on NLP tasks, with an aim to guide DNN selection.

Long Short-Term Memory: This 1997 paper authored by Swiss and German researchers from Technical University of Munich introduced what is now the industry standard in NLP tasks — gradient-based method called Long Short-Term Memory (LSTM) and benchmarked it against other tried-and-tested methods such as Recurrent Cascade-Correlation, Neural Sequence Chunking, Backpropagation Through Time and Real-Time Recurrent Learning. The paper proposed key aspects about LSTM – bridging time intervals in excess of 1,000 steps (words) even when the data had noisy and long sequences.

Quasi-Recurrent Neural Networks: As the title of the paper suggests, this 2016 paper delves into RNN which have been panned for the dependence of each timestep’s computation on the previous timestep’s output, thus making RNNs unsuitable for long sequences. The researchers introduced quasi-recurrent neural networks (QRNNs) that alternate convolutional layers, which apply in parallel across timesteps. The paper proposed a better result as compared to LSTM and the researchers posited that thanks to increased parallelism, QRNNs were 16 times faster at both training and testing time. Dubbed as building blocks for a range of sequence tasks QRNNs fared well on tasks such as sentiment classification and language modelling.

Google’s Neural Machine Translation System: Finally, Google’s Neural Machine Translation (NMT), better known as NMT consists of a deep LSTM network with eight encoder and eight decoder layers using attention and residual connections. Pegged as an end-to-end automated translation system it provided a single method to translate between multiple languages. So far all models had been built for a single language pair, there was no model to translate among multiple languages. The model that powers Google Translate consists of two RNNs. In fact, a recent article by a tech magazine indicates that research in NMT is seeing a spike with big tech giants publishing 76 papers between a short period from February to April 2018. From Facebook to IBM and Amazon, companies are investing significantly to improve existing NMT models for better translation.