|

Listen to this story

|

When we hear the word “uncensored” in AI, we think of models that can be harmful and possibly biassed. While that can be absolutely true, these uncensored models are increasingly outperforming their aligned counterparts, even if possibly harmful.

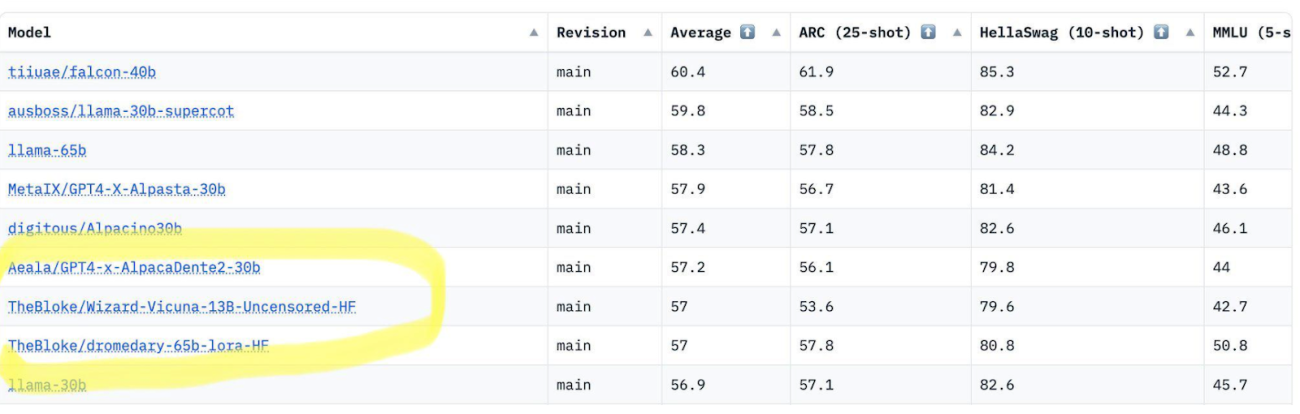

According to the Open LLM Leaderboard on Hugging Face, uncensored models like Wizard-Vicuna-13B-Uncensored-HF, whose developer recently got into trouble for releasing the model to the public, have been competing with LLaMa and Falcon and is one of the top models.

Over-finetuning a model may handicap its capabilities. This phenomenon is called the alignment tax of AI models. When a model goes to several benchmarks testing with humans in the loop trying to make the model as aligned and as “politically correct” as possible, it loses a lot of its performance. A lot of it is because of reinforcement learning with human feedback (RLHF).

Alignment tax is the extra cost that an AI system has to pay to stay more aligned, at the cost of building an unaligned, or uncensored model. Which ultimately is also hindering its performance.

Too much training

There is no doubt that OpenAI’s decision to use RLHF to train its GPT model gave birth to the much-hyped and loved ChatGPT. But even then, according to the GPT-4 paper, the model’s accuracy and factuality were much better and more confident before the researchers decided to use RLHF for fine-tuning.

The Sparks of AGI paper by Microsoft Research explains this GPT-4 phenomenon. The paper talks about how at the early development stage of the model, it performed way better than the final result after fine-tuning with RLHF. Even though the model is now more aligned and gives balanced answers, its capabilities to answer were much better before.

In a presentation of the paper, Sebastien Bubeck, one of its lead authors, narrated the problems that occurred after GPT-4 was trained. He gave an example of the prompt, “draw the unicorn”, and explained how the quality of the output degraded significantly after the model was aligned for safety.

A similar case was shared by a lot of Reddit users in a post. During the initial release days of ChatGPT, it used to provide much better results. But after people started to jailbreak ChatGPT, OpenAI put up more guardrails and restrictions in an attempt to address the issues, resulting in poorer responses over time.

Meta AI recently released LIMA, a LLaMa 65B model where it compared pre-training a model on unsupervised raw data versus large-scale instruction tuning, i.e. RLHF based model. According to the paper, LIMA was able to outperform GPT-4 in 43% of use cases with just 1,000 carefully curated prompts. Though the model was not uncensored as much, it clearly shows that RLHF might be hindering ChatGPT’s performance.

Is poor performance a fair bargain for alignment?

As the world is heading towards more AI regulations, it is important for models to be more aligned with what the developers and the users want. To filter out the misinformation that these models have the capability to produce, it is necessary to have humans in the loop that could bring the hallucinating models back on track.

These models are essentially built on internet data. Apart from a lot of necessary information, the data is also inadvertently scrapped from misinformation-creating websites. This results in the model giving out falsehoods, which in all senses should be controlled. On the other hand, do you really want your chatbot to not give out the information you want? Even though ChatGPT-like models do not spew out controversial or misleading content as designed by the developers, a lot of users have criticised the model for being too “woke”.

A paper titled, Scaling Laws for Reward Model Overoptimisation, explains how RLHF preferences induce bias into the models that hinder the ground truth performance of the models. Some people compare over-tuning a model with lobotomy of the brain.

For and against

Since these uncensored models have been outperforming many other censored models, we can make the case that these models should be allowed and used to create chatbots. Uncensored models that do not filter out responses that the creators of the model would feel are not safe enough, might not be suitable for researchers and scientists who want to explore the field model.

But on the other hand, it comes with a lot of problems like misuse, harmful actors, and malicious intent AI models. While we can make a case for the open source community to be responsible enough to not misuse the unaligned models, there is no surety of any of it.

Moreover, aligning models to represent a single viewpoint might not be the way forward in the future. If OpenAI’s chatbot is too woke, there should be an alternative available for developers to build their own versions of ChatGPT.

Imagine, if China releases a chatbot that is so aligned with the government’s beliefs that it is unable to operate openly or criticise anything in the country. Even if developers try to control the datasets and perform RLHF as much as they can, what is the possibility that such an AI model would be perfectly aligned and now spew out anything that the creators don’t want it to?