|

Listen to this story

|

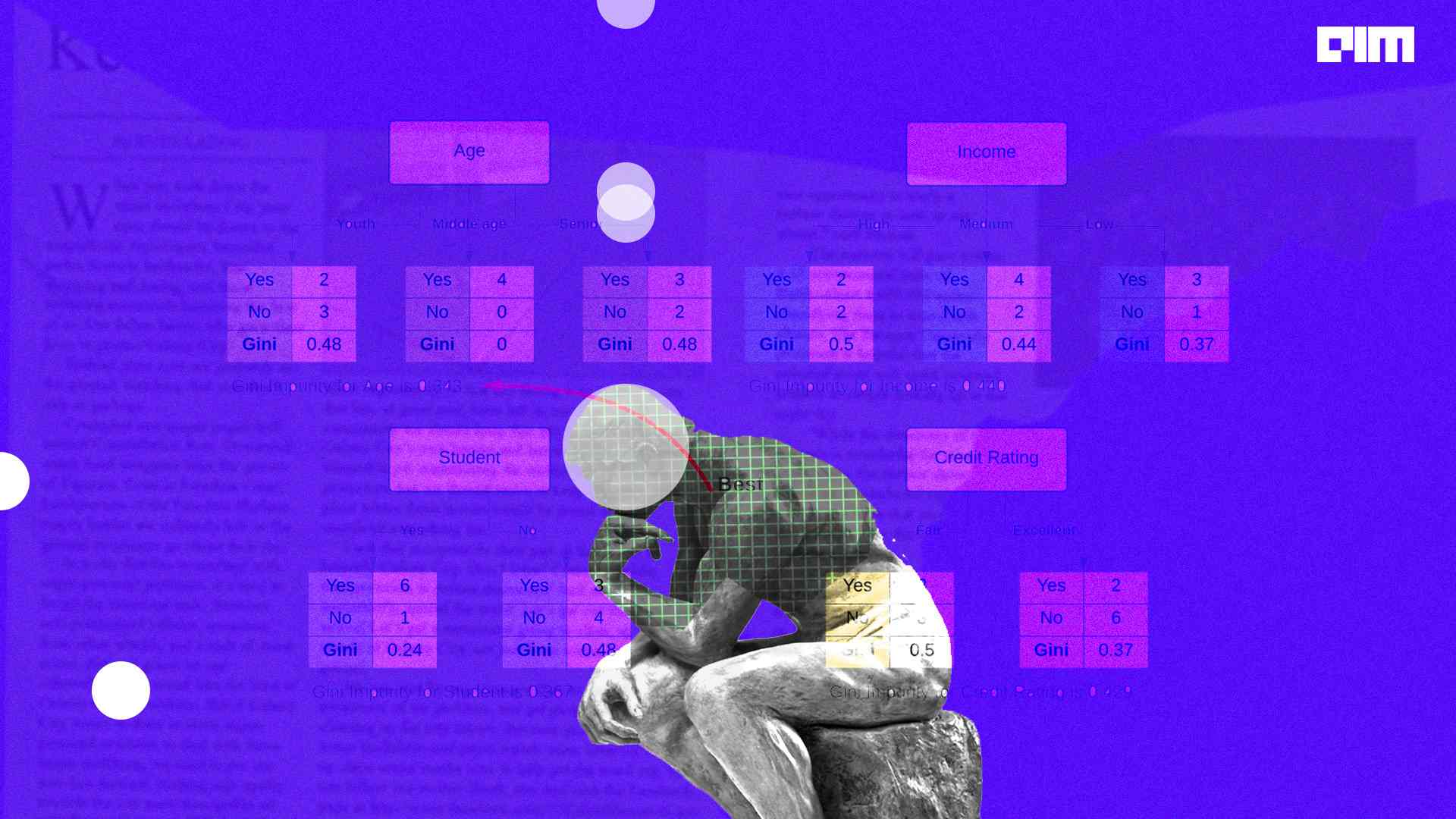

Gini impurity is a function that determines how well a decision tree was split. Basically, it helps us to determine which splitter is best so that we can build a pure decision tree. Gini impurity ranges values from 0 to 0.5. It is one of the methods of selecting the best splitter; another famous method is Entropy which ranges from 0 to 1. In this article, we will have a look at the mathematical concept of the Gini impurity method for decision tree split. We will take random data and understand this concept from the very basics. Below is a list of points that we will cover in this article.

Table of contents

- What is a gini index decision tree?

- The math behind the Gini impurity

- Constructing the decision tree using Gini impurity

Let’s start with having a quick introduction to the decision trees.

What is a decision tree?

A Decision tree is the supervised machine learning algorithm. As the name suggests, if we visualize it, it will appear like a tree. It is a powerful algorithm that can perform well on complex data, it is also versatile in nature because it can perform both classification and regression.

The decision trees have a root node, internal nodes, and leaf nodes where,

- Root node: It’s a node from where the tree is originated

- Internal nodes: This node contains the only evaluation of variables

- Leaf nodes: These nodes are responsible for making decisions

Now, we will go through the mathematics behind the Gini impurity by implementing a decision tree on a given dataset.

The math behind the Gini impurity

Let’s have a look at the formula of Gini impurity. The formula of Gini impurity is given as:

Where,

The j represents the number of classes in the label, and

The P represents the ratio of class at the ith node.

Gini impurity has a maximum value of 0.5, which is the worst we can get, and a minimum value of 0 means the best we can get.

Constructing the decision tree using Gini impurity

We will use the banknote dataset to implement a decision tree. The dataset comprises the details of whether a banknote is genuine or not. There are two features given and the label has two values 0 or 1. (0 for authentic, 1 for unauthentic).

We have taken only 10 rows as a sample for easier plotting of a tree and a better understanding of Gini impurity.

Let’s construct the decision tree for the given data. For each value of features, we calculate the Gini impurity since our feature is continuous. It is not feasible to calculate each value one by one, so we will take one value for each feature.

Now, we will calculate the Gini impurity scores for the different combinations of values for each of the variables.

Variance of Wavelet

For values <= 0.9, we have 8 records there out of 10, and 2 records with values>0.90.

- For Variance of Wavelet <=0.9 and class == 1 values are 6 so ratio will be 6/8

- For Variance of Wavelet <=0.9 and class == 0 values are 2 so the ratio will be 2/8

- Gini = 1 – ((6/8)^2 + (2/8)^2) = 0.38 (by using the above formula)

- For Variance of Wavelet >0.9 and class == 0 values are so ratio will be 2/2

- For Variance of Wavelet >0.9 and class == 1 values are so ratio will be 0/2

- Gini = 1 – ((2/2)^2 + (0/2)^2) = 0

Gini = 0 means it is the purest node and it is a leaf.

Skewness of wavelet

For values <= 7.161, we have 7 records there out of 10, and 3 records with value >7.161.

- For Skewness of wavelet <=7.161 and class == 0 values are 1 so the ratio will be 1/7

- For Skewness of wavelet <=7.161 and class == 1 values are 6 so ratio will be 6/7

- Gini = 1 – ((1/7)^2 + (6/7)^2) = 0.25

- For Skewness of wavelet >7.161 and class == 0 values are 3 so the ratio will be 1/3

- For Skewness of wavelet >7.161 and class == 1 values are 0 so the ratio will be 0/3

- Gini = 1 – ((1/3)^2 + (0)^2) = 0

Gini = 0 means it is the purest node and it is a leaf.

We will take the Skewness of the wavelet as the root node because it has lower impurity than the Variance of the wavelet. After calculating the above values, we get this decision tree as:

We can see the root node as skewness of the wavelet. It has a total of 10 samples and out of which ‘0’ are 4 and ‘1’ are 6, and it is labelled as class 1 because the majority of the class values are 1 that’s why, since it is not a leaf node so we cannot make a decision. For condition <=7.161 FALSE( > 7.161) as a result, we get the leaf node which has GINI = 0; which means it’s the purest node that can’t be further divided.

For condition <=7.161 TRUE we have calculated GINI = 0.2579 but for the value of Variance of wavelet <= 0.811 performs better than Skewness of wavelet. That is why we calculated the Skewness of the wavelet instead of the Variance of the wavelet. Features can be repeated and cannot be repeated.

Now we will calculate the Gini impunity for the left node of the root node.

Variance of Wavelet

For values <= 0.811, we have 6 records there out of 7, and 1 record with values>0.811.

- For Variance of Wavelet <=0.811 and class == 0 values are 0 so ratio will be 0/6

- For Variance of Wavelet <=0.811 and class == 1 values are 6 so the ratio will be 6/6

- Gini = 1 – ((0/7)^2 + (6/6)^2) = 0

Gini = 0 means it is the purest node and it is a leaf.

- For Variance of Wavelet >0.811 and class == 0 values are so ratio will be 1/1

- For Variance of Wavelet >0.811 and class == 1 values are so ratio will be 0/1

- Gini = 1 – ((1/1)^2 + (0/2)^2) = 0

Gini = 0 means it is the purest node and it is a leaf.

Above, we have just calculated the leaf nodes of the left subtree. Considering this process, the final tree would look as,

Final words

In this article, we have learned about the decision tree and we implemented the decision tree for binary classification. To construct a decision tree, we used the Gini impurity method for splitting the tree. We could understand the mathematical concept of the approach in detail.