Associative memory is a strategy developed by living organisms over many years of evolution. This is true of humans, as we use associative memory to tell a story about two unrelated things to grasp more information in less time. Memory optimisation is important in case of resource-hungry deep learning tasks as well. Though the analogy between neuroscience and artificial neural networks has been beaten to a pulp, mimicking nature is still opening up exciting avenues in the world of AI.

Memory association in machine learning is of great significance. Their application can be seen in energy-based models as well. Energy-based Models (EBMs) were first introduced in 2006 by Yann LeCun and his team.

Energy-based models are one way of making the model improve its predictive quality. For instance, consider an image that is fed to a convolutional network, the model then captures the dependencies at a granular level and give them probability scores for classification

Whereas, in the case of energy models, classification is done based on the energy values.

Before we go any further, there are few things that need a mention:

Attractor network is a type of recurrent dynamical network, that evolves toward a stable pattern over time. They have largely been used in computational neuroscience to model neuronal processes such as associative memory.

Hopfield nets serve as content-addressable (“associative”) memory systems with binary threshold nodes.

A variety of energy-based memory models have been proposed since the original Hopfield network has been introduced, in a way to mitigate its limitations

While Hopfield networks memorise patterns quickly, deep probabilistic models are slow. These networks rely on gradient training that requires many updates (typically thousands or more) to settle new inputs into the weights of a network.

Hence, writing memories via parametric gradient-based optimisation is not a straightforward job and this will lead to challenges with the way memory is handled in applications where the adaptation needs to happen quickly.

To address these challenges, researchers at DeepMind propose a novel approach that leverages meta-learning to enable fast storage of patterns into the weights of arbitrarily structured neural networks, as well as fast associative retrieval.

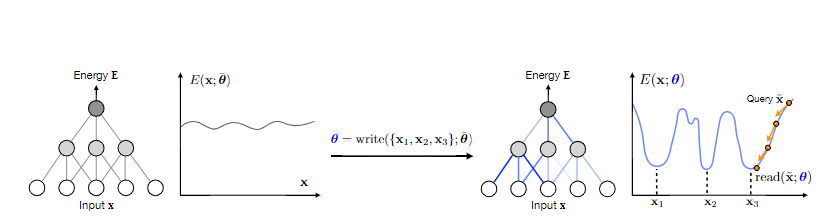

This novel meta-learning approach to energy-based memory models is aimed at using a neural architecture as an energy model. The goal here is to store the patterns as quickly as possible in its weights and then retrieve it from associative memory

Overview Of Meta-Learning Based Energy methods

Meta-learning Energy-based memory models are inspired by gradient-based meta-learning techniques.

The energy-based model contains an energy function, which is a mathematical formulation containing rules and variables affecting the final prediction. The energy function is modelled by a neural network and the writing rule is implemented as a weight update, producing parameters from the initialisation such that the stored patterns become local minima of the energy as can be seen in the illustration above.

These models enable auto-association, which is one way of retrieving pattern in its partially stored version.

Deep neural networks are capable of both compression, and memorising training patterns. Energy-based memory models (EBMM) with meta-learning have proved to perform well and even maintain an efficient convolutional memory,

The approaches used in this work and the promising results indicate the potential use cases of EBMM in the future. Associative memory has long been of interest to neuroscience and ML communities. Few takeaways of this model are as follows:

- This work introduces a novel learning method for deep associative memory systems gets introduced.

- Learning representations and storing patterns in network weights has been demonstrated on a large class of neural networks.

- Slow gradient learning is not a problem thanks to meta-learning.